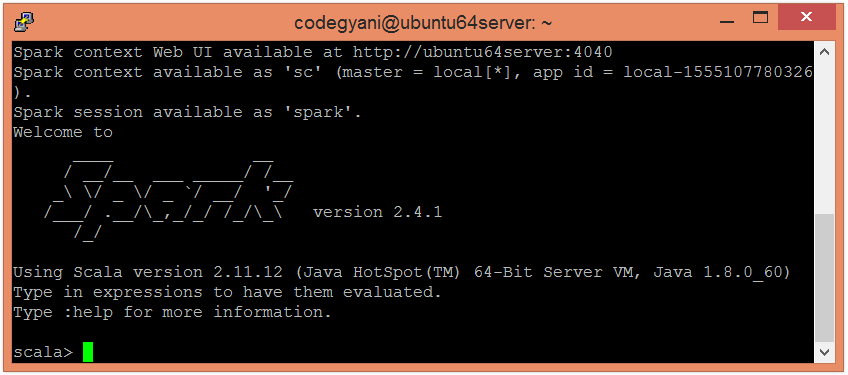

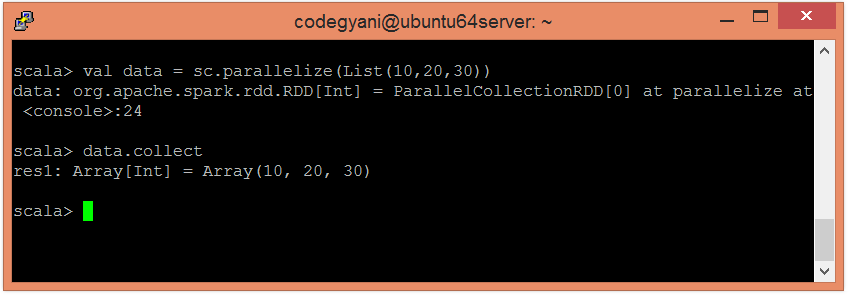

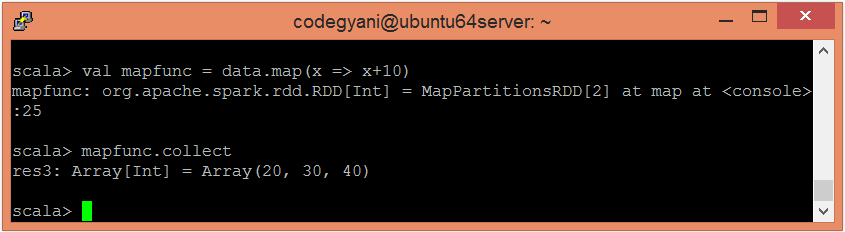

Spark Map functionIn Spark, the Map passes each element of the source through a function and forms a new distributed dataset. Example of Map functionIn this example, we add a constant value 10 to each element.

Here, we got the desired output.

Next TopicSpark Filter Function

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share