Associate Memory NetworkAn associate memory network refers to a content addressable memory structure that associates a relationship between the set of input patterns and output patterns. A content addressable memory structure is a kind of memory structure that enables the recollection of data based on the intensity of similarity between the input pattern and the patterns stored in the memory. Let's understand this concept with an example:

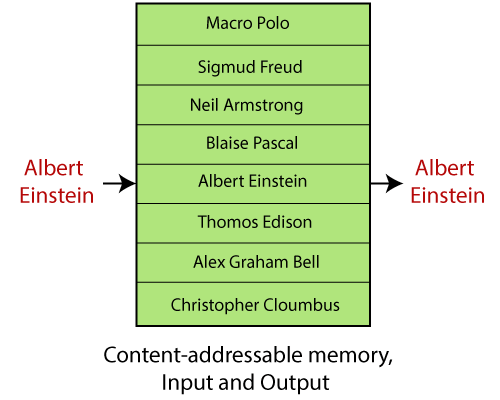

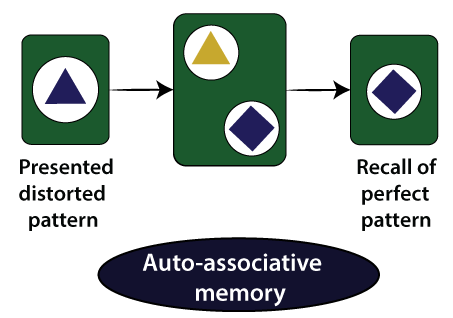

The figure given below illustrates a memory containing the names of various people. If the given memory is content addressable, the incorrect string "Albert Einstein" as a key is sufficient to recover the correct name "Albert Einstein." In this condition, this type of memory is robust and fault-tolerant because of this type of memory model, and some form of error-correction capability. Note: An associate memory is obtained by its content, adjacent to an explicit address in the traditional computer memory system. The memory enables the recollection of information based on incomplete knowledge of its contents.There are two types of associate memory- an auto-associative memory and hetero associative memory. Auto-associative memory:An auto-associative memory recovers a previously stored pattern that most closely relates to the current pattern. It is also known as an auto-associative correlator.

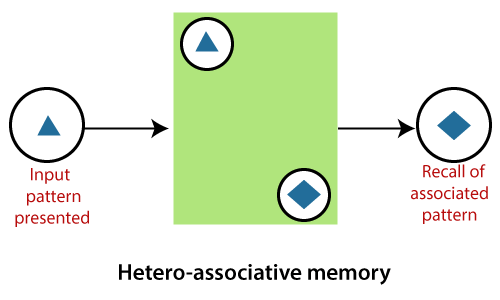

Consider x[1], x[2], x[3],….. x[M], be the number of stored pattern vectors, and let x[m] be the element of these vectors, showing characteristics obtained from the patterns. The auto-associative memory will result in a pattern vector x[m] when putting a noisy or incomplete version of x[m]. Hetero-associative memory:In a hetero-associate memory, the recovered pattern is generally different from the input pattern not only in type and format but also in content. It is also known as a hetero-associative correlator.

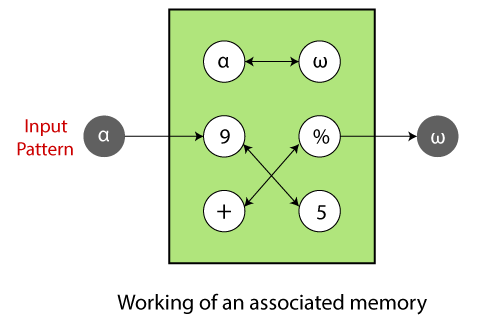

Consider we have a number of key response pairs {a(1), x(1)}, {a(2),x(2)},…..,{a(M), x(M)}. The hetero-associative memory will give a pattern vector x(m) when a noisy or incomplete version of the a(m) is given. Neural networks are usually used to implement these associative memory models called neural associative memory (NAM). The linear associate is the easiest artificial neural associative memory. These models follow distinct neural network architecture to memorize data. Working of Associative Memory:Associative memory is a depository of associated pattern which in some form. If the depository is triggered with a pattern, the associated pattern pair appear at the output. The input could be an exact or partial representation of a stored pattern.

If the memory is produced with an input pattern, may say α, the associated pattern ω is recovered automatically. These are the terms which are related to the Associative memory network: Encoding or memorization:Encoding or memorization refers to building an associative memory. It implies constructing an association weight matrix w such that when an input pattern is given, the stored pattern connected with the input pattern is recovered. (Wij)k = (pi)k (qj)k Where, (Pi)k represents the ith component of pattern pk, and (qj)k represents the jth component of pattern qk Where, strong>i = 1,2, …,m and j = 1,2,…,n. Constructing the association weight matrix w is accomplished by adding the individual correlation matrices wk , i.e.,

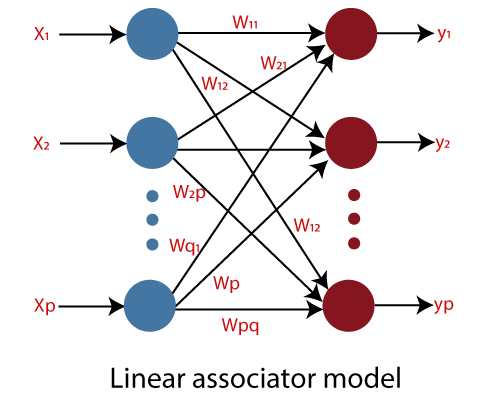

Where α = Constructing constant. Errors and noise:The input pattern may hold errors and noise or may contain an incomplete version of some previously encoded pattern. If a corrupted input pattern is presented, the network will recover the stored Pattern that is adjacent to the actual input pattern. The existence of noise or errors results only in an absolute decrease rather than total degradation in the efficiency of the network. Thus, associative memories are robust and error-free because of many processing units performing highly parallel and distributed computations. Performance Measures:The measures taken for the associative memory performance to correct recovery are memory capacity and content addressability. Memory capacity can be defined as the maximum number of associated pattern pairs that can be stored and correctly recovered. Content- addressability refers to the ability of the network to recover the correct stored pattern. If input patterns are mutually orthogonal, perfect recovery is possible. If stored input patterns are not mutually orthogonal, non-perfect recovery can happen due to intersection among the patterns. Associative memory models:Linear associator is the simplest and most widely used associative memory models. It is a collection of simple processing units which have a quite complex collective computational capability and behavior. The Hopfield model computes its output that returns in time until the system becomes stable. Hopfield networks are constructed using bipolar units and a learning process. The Hopfield model is an auto-associative memory suggested by John Hopfield in 1982. Bidirectional Associative Memory (BAM) and the Hopfield model are some other popular artificial neural network models used as associative memories. Network architectures of Associate Memory Models:The neural associative memory models pursue various neural network architectures to memorize data. The network comprises either a single layer or two layers. The linear associator model refers to a feed-forward type network, comprises of two layers of different processing units- The first layer serving as the input layer while the other layer as an output layer. The Hopfield model refers to a single layer of processing elements where each unit is associated with every other unit in the given network. The bidirectional associative memory (BAM) model is the same as the linear associator, but the associations are bidirectional. The neural network architectures of these given models and the structure of the corresponding association weight matrix w of the associative memory are depicted. Linear Associator model (two layers):The linear associator model is a feed-forward type network where produced output is in the form of single feed-forward computation. The model comprises of two layers of processing units, one work as an input layer while the other work as an output layer. The input is directly associated with the outputs, through a series of weights. The connections carrying weights link each input to every output. The addition of the products of the weights and the input is determined in each neuron node. The architecture of the linear associator is given below.

All p inputs units are associated to all q output units via associated weight matrix W = [wij]p * q where wij describes the strength of the unidirectional association of the ith input unit to the jth output unit. The connection weight matrix stores the z different associated pattern pairs {(Xk,Yk); k= 1,2,3,…,z}. Constructing an associative memory is building the connection weight matrix w such that if an input pattern is presented, the stored pattern associated with the input pattern is recovered.

Next TopicBoltzmann Machines

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share