Creating Kafka Producer in JavaIn the last section, we learned the basic steps to create a Kafka Project. Now, before creating a Kafka producer in java, we need to define the essential Project dependencies. In our project, there will be two dependencies required:

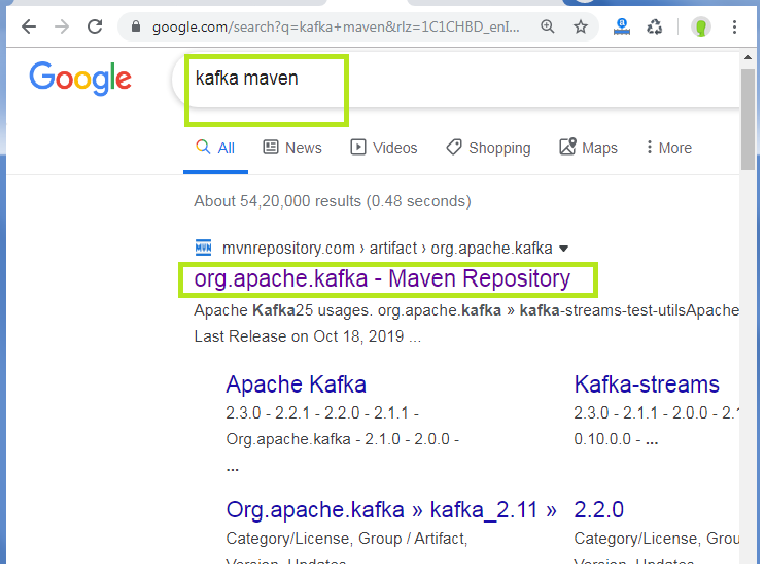

There are following steps required to set the dependencies: Step1: The build tool Maven contains a 'pom.xml' file. The 'pom.xml' is a default XML file that carries all the information regarding the GroupID, ArtifactID, as well as the Version value. The user needs to define all the necessary project dependencies in the 'pom.xml' file. Go to the 'pom.xml' file. Step2: Firstly, we need to define the Kafka Dependencies. Create a '<dependencies>...</dependencies>' block within which we will define the required dependencies. Step3: Now, open a web browser and search for 'Kafka Maven' as shown below:

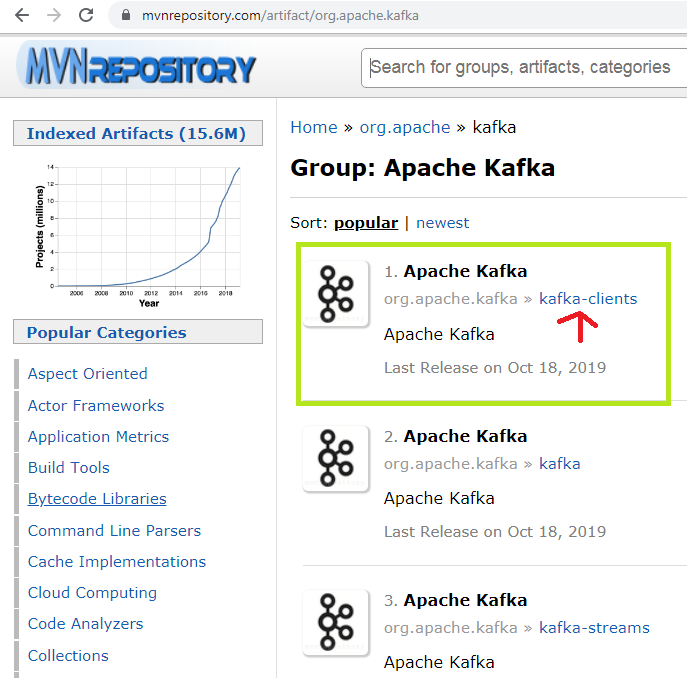

Click on the highlighted link and select the 'Apache Kafka, Kafka-Clients' repository. A sample is shown in the below snapshot:

Step4: Select the repository version according to the downloaded Kafka version on the system. For example, in this tutorial, we are using 'Apache Kafka 2.3.0'. Thus, we require the repository version 2.3.0 (the highlighted one).

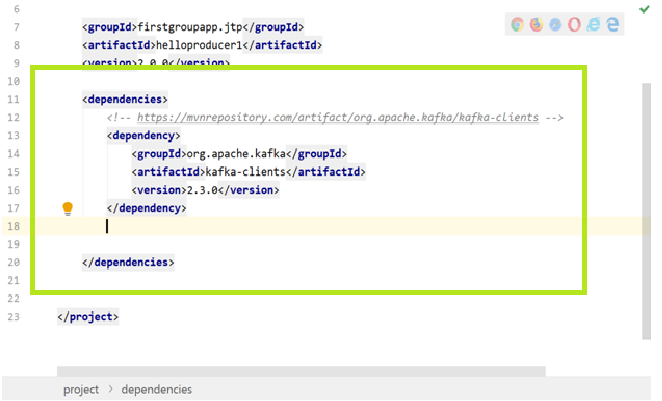

Step5: After clicking on the repository version, a new window will open. Copy the dependency code from there.

Since, we are using Maven, copy the Maven code. If the user is using Gradle, copy the Gradle written code. Step6: Paste the copied code to the '<dependencies>...</dependencies>' block, as shown below:

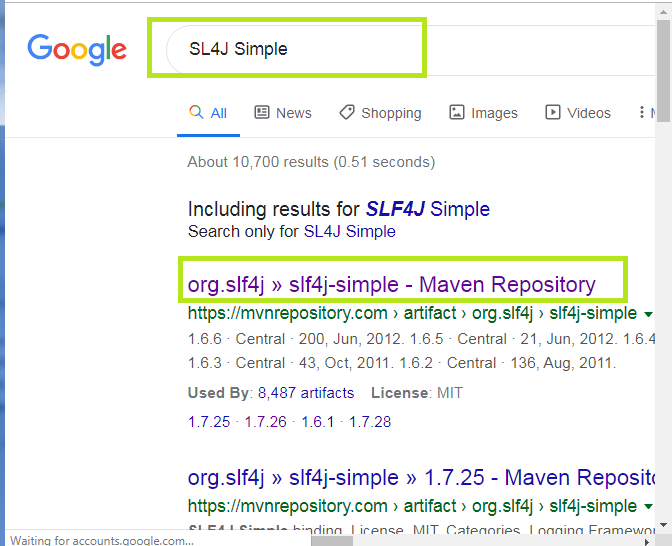

If the version number appears red in color, it means the user missed to enable the 'Auto-Import' option. If so, go to View>Tool Windows>Maven. A Maven Projects Window will appear on the right side of the screen. Click on the 'Refresh' button appearing right there. This will enable the missed Auto-Import Maven Projects. If the color changes to black, it means the missed dependency is downloaded. The user can proceed to the next step. Step7: Now, open the web browser and search for 'SL4J Simple' and open the highlighted link shown in the below snapshot:

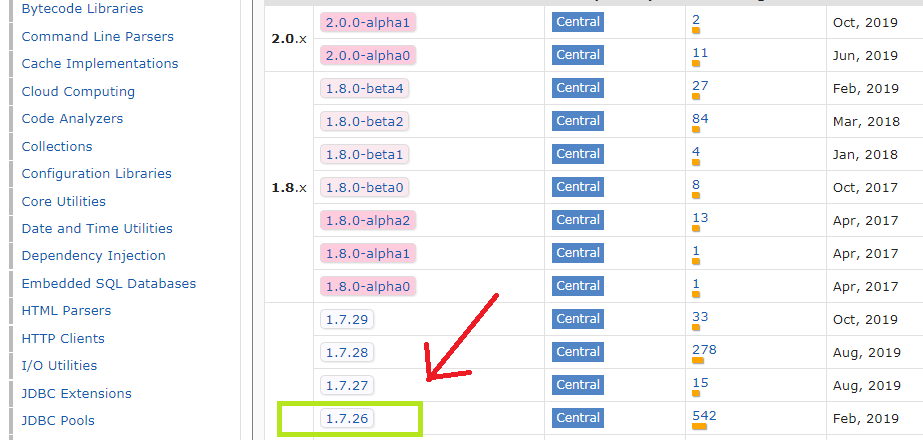

A bunch of repositories will appear. Click on the appropriate repository.

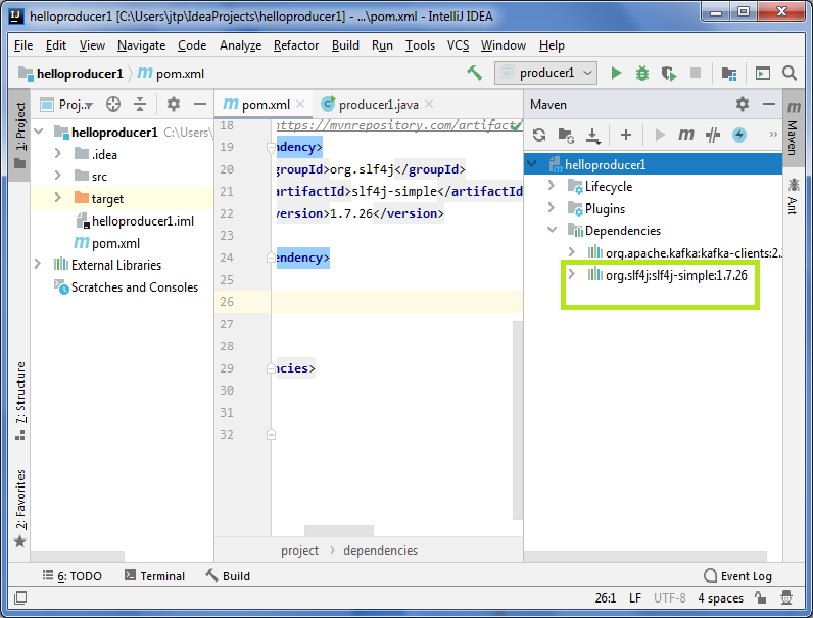

To know the appropriate repository, look at the Maven projects window, and see the slf4j version under 'Dependencies'.

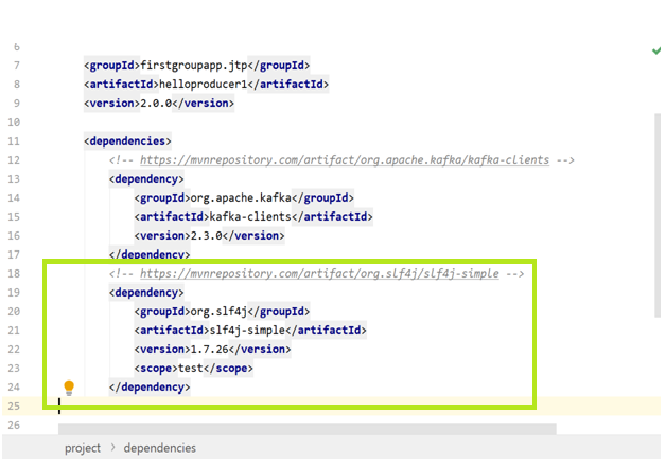

Click on the appropriate version and copy the code, and paste below the Kafka dependency in the 'pom.xml' file, as shown below:

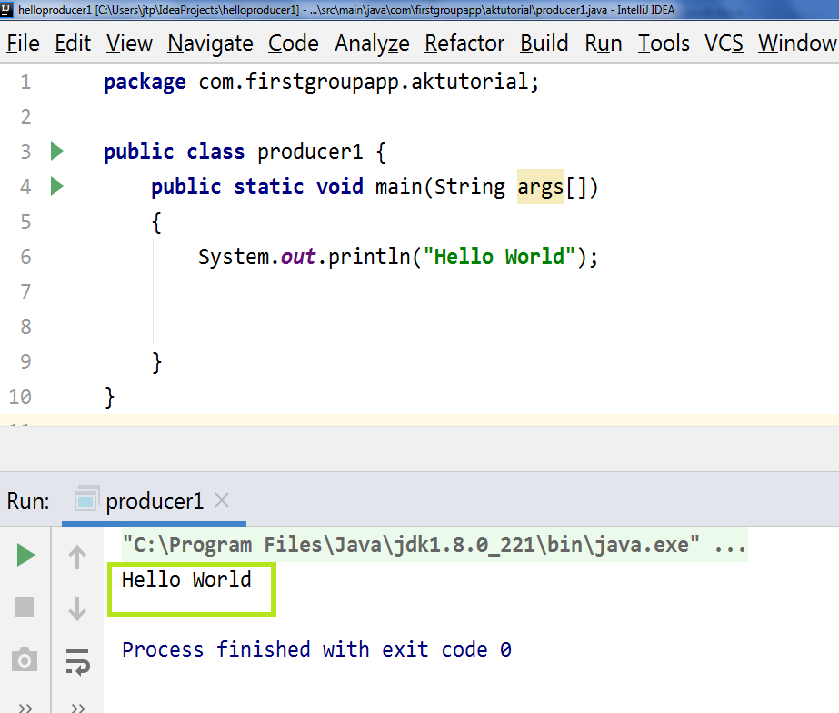

Note: Either put a comment or remove the <scope> test</scope> tag line from the code. Because this scope tag defines a limited scope for the dependency, and we need this dependency for all code, the scope should not be limited.Now, we have set all the required dependencies. Let's try the 'Simple Hello World' example. Firstly, create a java package say, 'com.firstgroupapp.aktutorial' and a java class beneath it. While creating the java package, follow the package naming conventions. Finally, create the 'hello world' program.

After executing the 'producer1.java' file, the output is successfully displayed as 'Hello World'. This tells the successful working of the IntelliJ IDEA. Creating Java ProducerBasically, there are four steps to create a java producer, as discussed earlier:

Creating Producer PropertiesApache Kafka offers various Kafka Properties which are used for creating a producer. To know about each property, visit the official site of Apache, i.e., 'https://kafka.apache.org'. Move to Kafka>Documentations>Configurations>Producer Configs. There the users can know about all the producer properties offered by Apache Kafka. Here, we will discuss the required properties, such as:

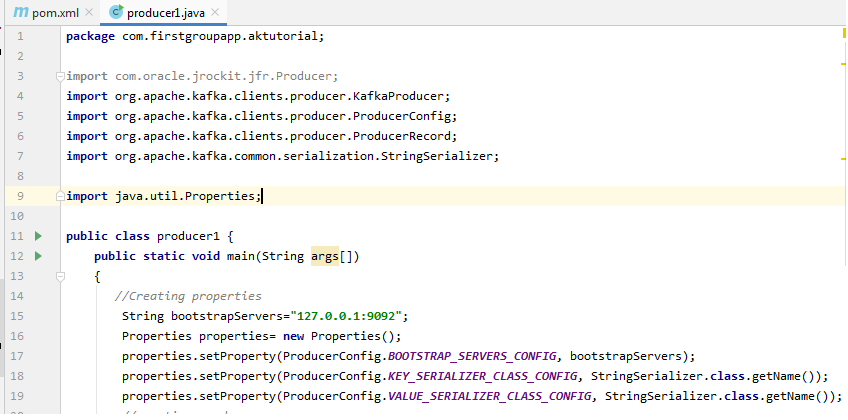

Now, let's see the implementation of the producer properties in the IntelliJ IDEA.

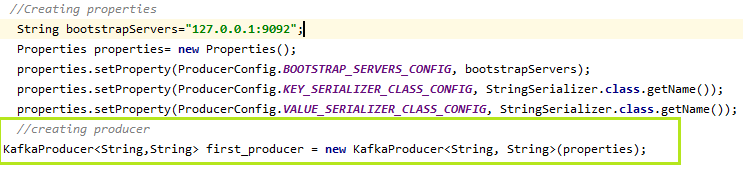

When we create the properties, it imports the 'java.util.Properties' to the code. So, in this way, the first step to create producer properties is completed. Creating the ProducerTo create a Kafka producer, we just need to create an object of KafkaProducer. The object of KafkaProducer can be created as: Here, 'first_producer' is the name of the producer we have chosen. The user can choose accordingly. Let's see in the below snapshot:

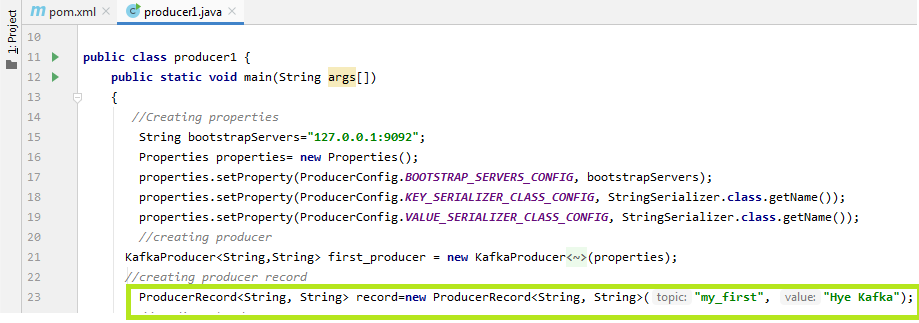

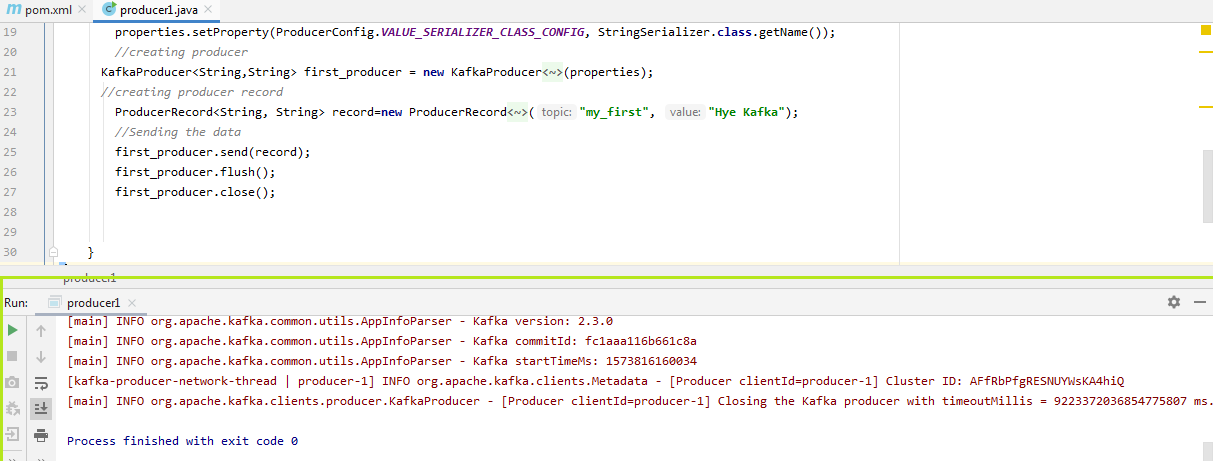

Creating the Producer RecordIn order to send the data to Kafka, the user need to create a ProducerRecord. It is because all the producers lie inside a producer record. Here, the producer specifies the topic name as well as the message which is to be delivered to Kafka. A ProducerRecord can be created as: Here, 'record' is the name chosen for creating the producer record, 'my_first' is the topic name, and 'Hye Kafka' is the message. The user can choose accordingly. Let's see in the below snapshot:

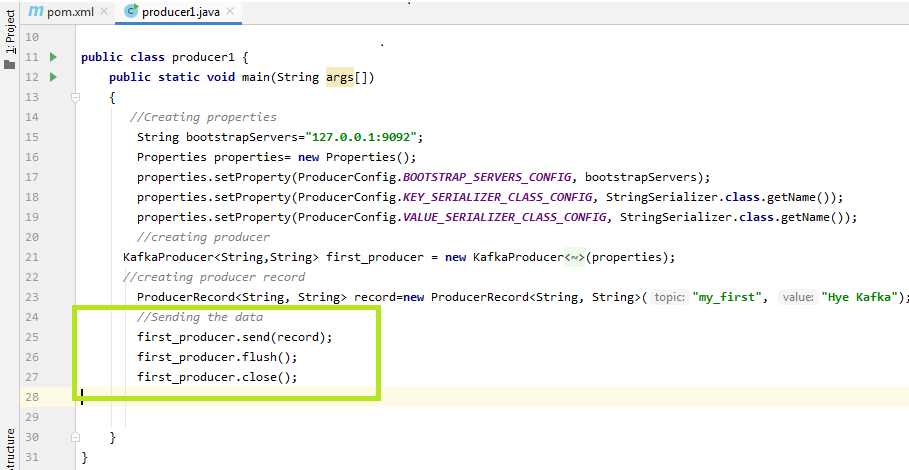

Sending the dataNow, the user is ready to send the data to Kafka. The producer just needs to invoke the object of the ProducerRecord as: Let's see in the below snapshot:

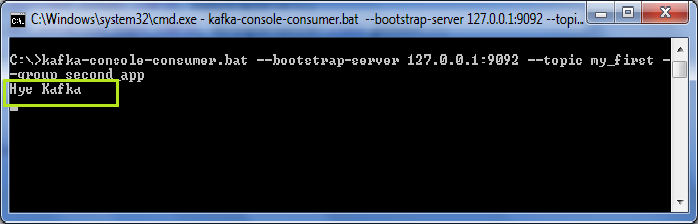

To know the output of the above codes, open the 'kafka-console-consumer' on the CLI using the command: 'kafka-console-consumer -bootstrap-server 127.0.0.1:9092 -topic my_first -group first_app' The data produced by a producer is asynchronous. Therefore, two additional functions, i.e., flush() and close() are required (as seen in the above snapshot). The flush() will force all the data to get produced and close() stops the producer. If these functions are not executed, data will never be sent to the Kafka, and the consumer will not be able to read it. The below shows the output of the code on the consumer console as:

On the terminal, users can see various log files. The last line on the terminal says the Kafka producer is closed. Thus, the message gets displayed on the consumer console asynchronously.

Next TopicKafka Producer Callbacks

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share