Docker InstallationWe can install docker on any operating system whether it is Mac, Windows, Linux or any cloud. Docker Engine runs natively on Linux distributions. Here, we are providing step by step process to install docker engine for Linux Ubuntu Xenial-16.04 [LTS]. Introduction to DockerDocker can be described as an open platform to develop, ship, and run applications. Docker allows us to isolate our applications from our infrastructure, so we can quickly deliver software. We can manage our infrastructure in similar ways we manage our applications with Docker. By taking the benefits of the methodologies of Docker to ship, test, and deploy code quickly, we can significantly decrease the delay between running code and writing it in production. Background of DockerContainers are separated from each other and bundle their configuration files, libraries, and software. They can negotiate with each other via well-defined channels. Containers utilize fewer resources as compared to virtual machines because each container distributes the services of one operating system kernel. OperationDocker can contain an app and its dependencies in an implicit container that can execute on macOS, Windows, or Linux computers. It allows the apps to execute in a range of locations, including in the private cloud, public, or on-premises. Docker utilizes the resource isolation aspects of the Linux kernel (like kernel namespaces and cgroups) and a union-capable file system (like OverlayFS) to permit containers to execute in one Linux instance, ignoring the overhead of initiating and managing virtual machines when running in Linux. On macOS, docker utilizes a Linux virtual machine to execute the containers. A single virtual machine or server can simultaneously run many containers, as docker containers are very lightweight. In 2018, an analysis stated that a typical use case of docker involves executing eight containers/host. Also, it can be installed on one board system, such as Raspberry Pi.

What is the Docker Platform?Docker gives the ability to package and execute an application in a loosely separated environment known as a container. This separation and security permit us to execute several containers simultaneously on the specified host. The containers are lightweight and include everything required to execute the applications, so we don't need to depend on what is installed on the host currently. We can easily distribute containers while we work and ensure that everyone we distribute with receives a similar container that operates in a similar way. Docker gives a platform and tooling facility to maintain the lifecycle of our containers:

Licensing model of DockerThe Dockerfile files are licensed upon an open-source license. It is important to understand that the capacity of this license statement is just the Dockerfile, not the container image. The Docker Engine runs on the 2.0 version of the Apache License. Docker Desktop shares some elements licensed upon the GNU General Public License. ComponentsAs a service, the offerings of the Docker software are composed of three different components, which are listed and discussed below:

Tools

History of DockerDocker Inc. was discovered by Sebastian Pahl, Solomon Hykes, and Kamel Founadi at the time of the startup incubator group of the Y Combinator Summer 2010 and introduced in 2011. Also, the startup was one of the twelve startups in the Den's first cohort of founder. In France, Hykes initiated the Docker project as an internal project in dotCloud, which is a platform-as-a-service enterprise. Docker was introduced to the public at PyCon in Santa Clara in 2013. In March 2013, it was published as open source. It utilized LXD as the default run environment at the time. One year later, Docker substituted LXD with its components, libcontainer, which was specified in the Go language with the publication of the 0.9 version. Docker made the Mody project in 2017 for open development and research. Adoption

Working of ContainersA container is made possible by visualization capabilities and process isolation created into the Linux kernel. The capabilities, including namespaces to restrict process visibility or access into other areas or resources of the system and Cgroups (control groups) to allocate resources between processes, enable two or more app components to distribute the resources of one host operating system instance in much a similar way that any hypervisor allows multiple VMs to share the memory, CPU, and other resources of one hardware server. Container technology provides every benefit and functionality of virtual machines, such as application disposability, cost-effective scalability, and isolation, plus additional important benefits:

Companies report other advantages of using containers, such as faster replies to market changes, developed app quality, and much more. Usage of Docker

Docker accumulates the development lifecycle by permitting developers to operate in standardized environments with local containers, which give our services and applications. Containers are ideal for continuous delivery and continuous integration workflows.

The container-based platform of Docker permits for highly compact workloads. The containers can execute on the local laptop, cloud providers, virtual or physical machines in the data center, or in a combination of the environments of a developer. The lightweight nature and portability of Docker also make it easier to dynamically maintain workloads and scale up and tear down services and applications as business requirements dictate.

Docker is fast and lightweight. It offers a cost-effective and viable replacement for hypervisor-based virtual machines, so we can use more of our server capacity to gain our business objectives. Docker is great for high-density platforms and for medium and small deployments where we require to work more using fewer resources. Prerequisites:Docker need two important installation requirements:

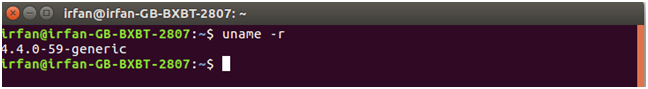

To check your current kernel version, open a terminal and type uname -r command to display your kernel version: Command:

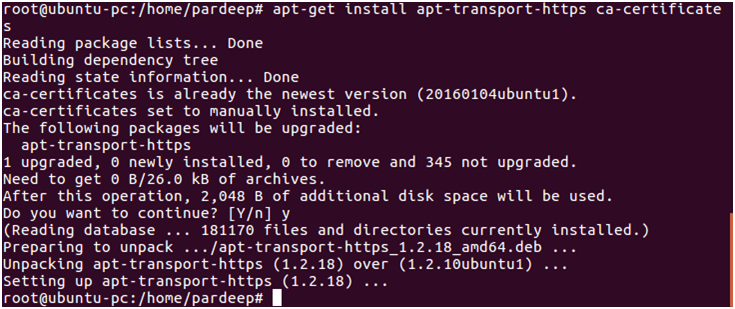

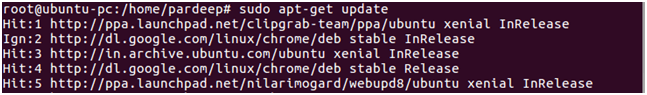

Update apt sourcesFollow following instructions to update apt sources. 1. Open a terminal window. 2. Login as a root user by using sudo command. 3. Update package information and install CA certificates. Command: See, the attached screen shot below.

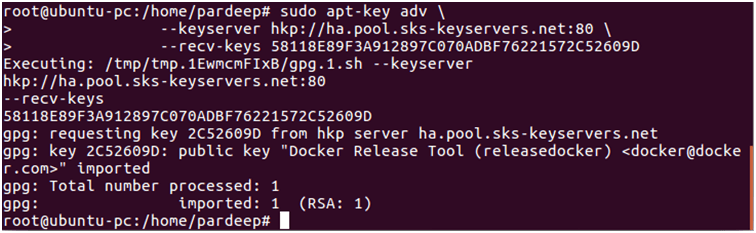

4. Add the new GPG key. Following command downloads the key. Command: Screen shot is given below.

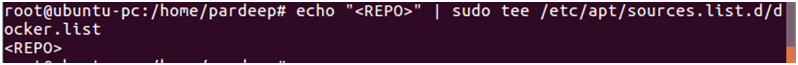

5. Run the following command, it will substitute the entry for your operating system for the file. See, the attached screen shot below.

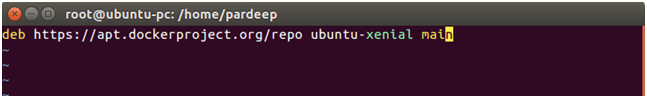

6. Open the file /etc/apt/sources.list.d/docker.listand paste the following line into the file.

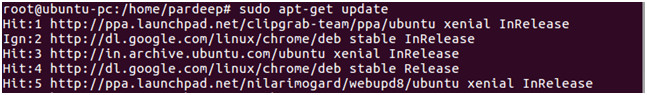

7. Now again update your apt packages index.

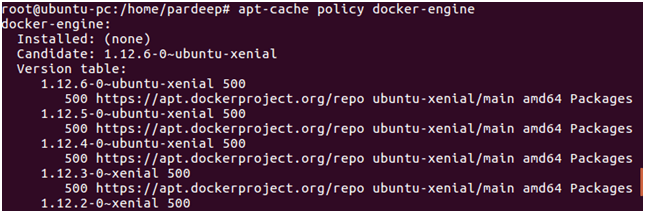

See, the attached screen shot below. 8. Verify that APT is pulling from the right repository. See, the attached screen shot below.

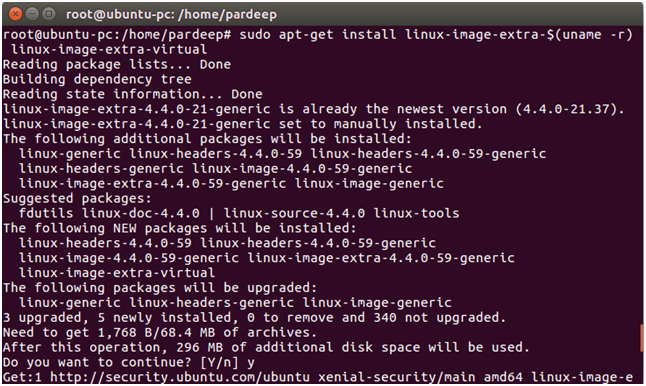

9. Install the recommended packages.

Install the latest Docker version.1. update your apt packages index. See, the attached screen shot below.

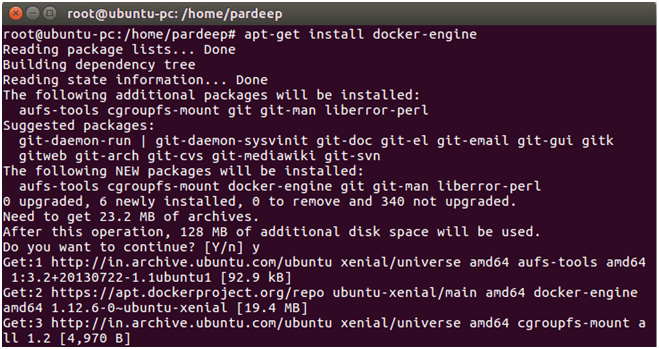

2. Install docker-engine. See, the attached screen shot below.

3. Start the docker daemon. See, the attached screen shot below.

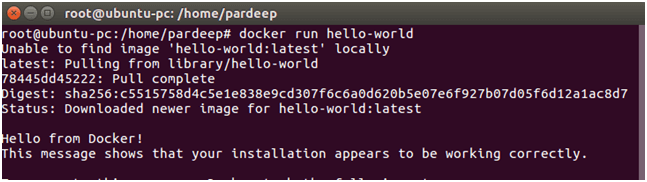

4. Verify that docker is installed correctly by running the hello-world image. See, the attached screen shot below.

This above command downloads a test image and runs it in a container. When the container runs, it prints a message and exits.

Next TopicInstall Docker on Windows

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share