Exploitation and Exploration in Machine LearningExploitation and exploration are the key concepts in Reinforcement Learning, which help the agent to build online decision making in a better way. Reinforcement learning is a machine learning method in which an intelligent agent (computer program) learns to interact with the environment and take actions to maximize rewards in a specific situation. This ML method is currently being used in so many industries such as automobile, healthcare, medicine, education, etc.

As in Reinforcement learning, the agent is not aware of the different states, actions for each state, associate rewards, and transition to the next state, but it learns it by exploring the environment. However, the knowledge of an agent about the state, actions, rewards, and resulting states is partial, and this results in Exploration-Exploitation Dilemma. In this topic, "Exploitation and Exploration in Machine Learning," we will discuss both these terms in detail with suitable examples. But before starting the topic, let's first understand reinforcement learning in ML. What is Reinforcement Learning?Unlike supervised and unsupervised learning, reinforcement learning is a feedback-based approach in which agent learns by performing some actions as well as their outcomes. Based on action status (good or bad), the agent gets positive or negative feedback. Further, for each positive feedback, they get rewarded, whereas, for each negative feedback, they also get penalized. Key points in Reinforcement Learning

Hence, we can define reinforcement learning as: "Reinforcement learning is a type of machine learning technique, where an intelligent agent (computer program) interacts with the environment, explore it by itself, and makes actions within that." What are Exploration and Exploitation in Reinforcement LearningBefore going to a brief description of exploration and exploitation in machine learning, let's first understand these terms in simple words. In reinforcement learning, whenever agents get a situation in which they have to make a difficult choice between whether to continue the same work or explore something new at a specific time, then, this situation results in Exploration-Exploitation Dilemma because the knowledge of an agent about the state, actions, rewards and resulting states is always partial. Now we will discuss exploitation and exploration in technical terms. Exploitation in Reinforcement LearningExploitation is defined as a greedy approach in which agents try to get more rewards by using estimated value but not the actual value. So, in this technique, agents make the best decision based on current information. Exploration in Reinforcement LearningUnlike exploitation, in exploration techniques, agents primarily focus on improving their knowledge about each action instead of getting more rewards so that they can get long-term benefits. So, in this technique, agents work on gathering more information to make the best overall decision. Examples of Exploitation and Exploration in Machine LearningLet's understand exploitation and exploration with some interesting real-world examples. Coal mining:Let's suppose people A and B are digging in a coal mine in the hope of getting a diamond inside it. Person B got success in finding the diamond before person A and walks off happily. After seeing him, person A gets a bit greedy and thinks he too might get success in finding diamond at the same place where person B was digging coal. This action performed by person A is called greedy action, and this policy is known as a greedy policy. But person A was unknown because a bigger diamond was buried in that place where he was initially digging the coal, and this greedy policy would fail in this situation. In this example, person A only got knowledge of the place where person B was digging but had no knowledge of what lies beyond that depth. But in the actual scenario, the diamond can also be buried in the same place where he was digging initially or some completely another place. Hence, with this partial knowledge about getting more rewards, our reinforcement learning agent will be in a dilemma on whether to exploit the partial knowledge to receive some rewards or it should explore unknown actions which could result in many rewards. However, both these techniques are not feasible simultaneously, but this issue can be resolved by using Epsilon Greedy Policy (Explained below). There are a few other examples of Exploitation and Exploration in Machine Learning as follows: Example 1: Let's say we have a scenario of online restaurant selection for food orders, where you have two options to select the restaurant. In the first option, you can choose your favorite restaurant from where you ordered food in the past; this is called exploitation because here, you only know information about a specific restaurant. And for other options, you can try a new restaurant to explore new varieties and tastes of food, and it is called exploration. However, food quality might be better in the first option, but it is also possible that it is more delicious in another restaurant. Example 2: Suppose there is a game-playing platform where you can play chess with robots. To win this game, you have two choices either play the move that you believe is best, and for the other choice, you can play an experimental move. However, you are playing the best possible move, but who knows new move might be more strategic to win this game. Here, the first choice is called exploitation, where you know about your game strategy, and the second choice is called exploration, where you are exploring your knowledge and playing a new move to win the game. Epsilon Greedy PolicyEpsilon greedy policy is defined as a technique to maintain a balance between exploitation and exploration. However, to choose between exploration and exploitation, a very simple method is to select randomly. This can be done by choosing exploitation most of the time with a little exploration.

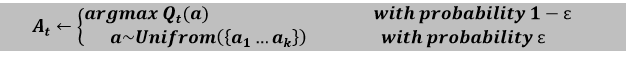

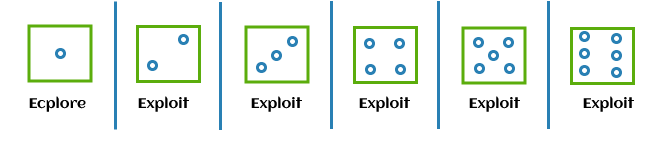

In the greedy epsilon strategy, an exploration rate or epsilon (denoted as ε) is initially set to 1. This exploration rate defines the probability of exploring the environment by the agent rather than exploiting it. It also ensures that the agent will start by exploring the environment with ε=1. As the agent start and learns more about the environment, the epsilon decreases by some rate in the defined rate, so the likelihood of exploration becomes less and less probable as the agent learns more and more about the environment. In such a case, the agent becomes greedy for exploiting the environment. To find if the agent will select exploration or exploitation at each step, we generate a random number between 0 and 1 and compare it to the epsilon. If this random number is greater than ε, then the next action would be decided by the exploitation method. Else it must be exploration. In the case of exploitation, the agent will take action with the highest Q-value for the current state. Examples- We can understand the above concept with rolling dice. Let's say the agent will explore if the dice land on 1; otherwise, he will exploit. This method is called an epsilon greedy action with the value of epsilon ε=1/6, which is the probability of getting 1 on dice. It can be expressed as follows:

In the above formula, the action selected at attempt 't' will be a greedy action (exploit) with probability 1- ε or maybe a random action (explore) with probability ε.

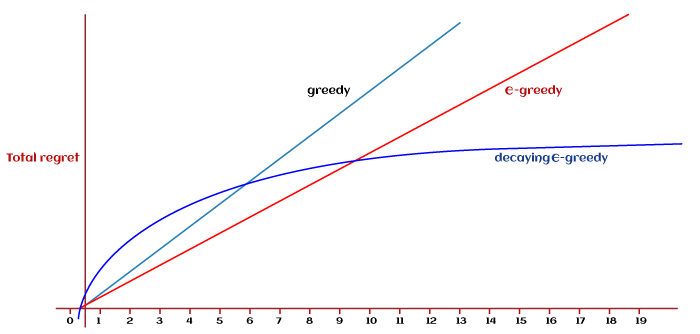

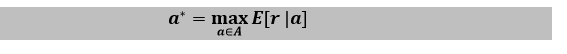

Notion of RegretWhenever we do something and don't find the proper outcome, then regret our decision as we have previously discussed an example of exploitation and exploration for choosing a restaurant. For that example, if we choose a new restaurant instead of our favorite, but the food quality and overall experience are poor, then we will regret our decision and will consider what we paid for as a complete loss. Moreover, if we order food from the same restaurant again, the regret level increases along with the number of losses. However, reinforcement learning methods can reduce the amount of loss and the level of regret. Regret in Reinforcement Learning Before understanding the regret in reinforcement learning, we must know the optimal action 'a*', which is the action that gives the highest rewards. It is given as follows:

Hence, the regret in reinforcement learning can be defined as the difference between the reward generated by the optimal action a* multiplied by T and the sum from 1 to T of each reward of arbitrary action. It can be expressed as follows: Regret:LT=TE[r?a^* ]-∑[r|at] ConclusionExploitation and exploration techniques in reinforcement machine learning have enhanced various types of parameters such as improved performance, increased learning rate, better decision making, etc. All these parameters are significant for learning the agents in the reinforcement learning method. Further, the disadvantage of exploitation and exploration techniques is that both require synchronization with these parameters as well as the specific environment, which may cause more supervision for reinforcement learning agents. This topic exposed some most used exploration techniques used in reinforcement learning. From the above examples, we can conclude that we must prefer exploration methods to reduce regrets and make the learning process faster and more significant.

Next TopicMachine Learning for Trading

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share