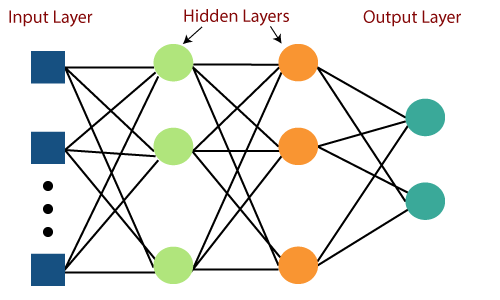

Multi-layer Perceptron in TensorFlowMulti-Layer perceptron defines the most complex architecture of artificial neural networks. It is substantially formed from multiple layers of the perceptron. TensorFlow is a very popular deep learning framework released by, and this notebook will guide to build a neural network with this library. If we want to understand what is a Multi-layer perceptron, we have to develop a multi-layer perceptron from scratch using Numpy. The pictorial representation of multi-layer perceptron learning is as shown below-

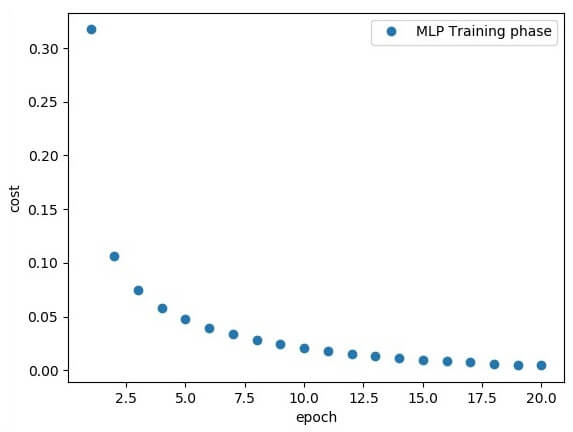

MLP networks are used for supervised learning format. A typical learning algorithm for MLP networks is also called back propagation's algorithm. A multilayer perceptron (MLP) is a feed forward artificial neural network that generates a set of outputs from a set of inputs. An MLP is characterized by several layers of input nodes connected as a directed graph between the input nodes connected as a directed graph between the input and output layers. MLP uses backpropagation for training the network. MLP is a deep learning method. Now, we are focusing on the implementation with MLP for an image classification problem. The above line of codes generating the following output-

Creating an interactive sectionWe have two basic options when using TensorFlow to run our code:

For this first part, we will use the interactive session that is more suitable for an environment like Jupiter notebook. Creating placeholdersIt's a best practice to create placeholder before variable assignments when using TensorFlow. Here we'll create placeholders to inputs ("Xs") and outputs ("Ys"). Placeholder "X": Represent the 'space' allocated input or the images.

Next TopicWhat is Machine Learning

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share