PySpark UDFThe Spark SQL provides the PySpark UDF (User Define Function) that is used to define a new Column-based function. It extends the vocabulary of Spark SQL's DSL for transforming Datasets. Register a function as a UDFWe can optionally set the return type of UDF. The default return type is StringType. Consider the following example: Call the UDF functionPySpark UDF's functionality is same as the pandas map() function and apply() function. These functions are used for panda's series and dataframe. In the below example, we will create a PySpark dataframe. The code will print the Schema of the Dataframe and the dataframe. Output root |-- integers: long (nullable = true) |-- floats: double (nullable = true) |-- integer_arrays: array (nullable = true) | |-- element: long (containsNull = true) +--------+------+--------------+ |integers|floats|integer_arrays| +--------+------+--------------+ | 1| -1.0| [1, 2]| | 2| 0.5| [3, 4, 5]| | 3| 2.7| [6, 7, 8, 9]| +--------+------+--------------+ Evaluation Order and null checkingPySpark SQL doesn't give the assurance that the order of evaluation of subexpressions remains the same. It is not necessary to evaluate Python input of an operator or function left-to-right or in any other fixed order. For example, logical AND and OR expressions do not have left-to-right "short-circuiting" semantics. Therefore, it is quite unsafe to depend on the order of evaluation of a Boolean expression. For example, the order of WHERE and HAVING clauses, since such expressions and clauses can be reordered during query optimization and planning. If a UDF depends on short-circuiting semantics (order of evaluation) in SQL for null checking, there's no surety that the null check will happen before invoking the UDF. Primitive type OutputsLet's consider a function square() that squares a number, and register this function as Spark UDF. Now we convert it into the UDF. While registering, we have to specify the data type using the pyspark.sql.types. The problem with the spark UDF is that it doesn't convert an integer to float, whereas, Python function works for both integer and float values. A PySpark UDF will return a column of NULLs if the input data type doesn't match the output data type. Let's consider the following program: Output: +--------+------+-----------+-------------+ |integers|floats|int_squared|float_squared| +--------+------+-----------+-------------+ | 1| -1.0| 1| null| | 2| 0.5| 4| null| | 3| 2.7| 9| null| +--------+------+-----------+-------------+ As we can see the above output, it returns null for the float inputs. Now have a look on another example. Register with UDF with float type outputOutput: +--------+------+-----------+-------------+ |integers|floats|int_squared|float_squared| +--------+------+-----------+-------------+ | 1| -1.0| null| 1.0| | 2| 0.5| null| 0.25| | 3| 2.7| null| 7.29| +--------+------+-----------+-------------+ Specifying float type output using the Python functionHere we force the output to be float also for the integer inputs. Output: +--------+------+-----------+-------------+ |integers|floats|int_squared|float_squared| +--------+------+-----------+-------------+ | 1| -1.0| 1.0| 1.0| | 2| 0.5| 4.0| 0.25| | 3| 2.7| 9.0| 7.29| +--------+------+-----------+-------------+ Composite Type OutputIf the output of Python functions is in the form of list, then the input value must be a list, which is specified with ArrayType() when registering the UDF. Consider the following code: Output: +--------------+------------------------+ |integer_arrays| Some Common UDF Problem

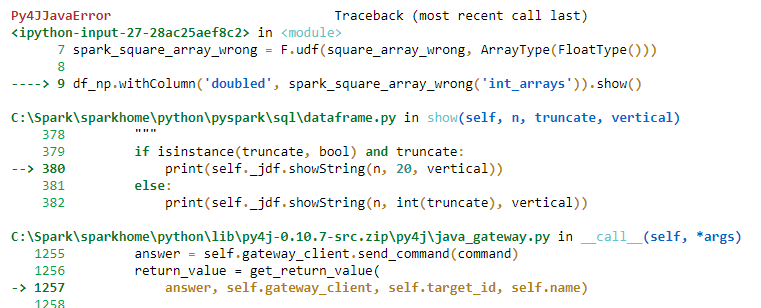

It is the most common exception while working with the UDF. It comes from a mismatched data type between Python and Spark. If the Python function uses a data type from a Python module like numpy.ndarray, then the UDF throws an exception. Output: +----------+ |int_arrays| +----------+ | [1, 2, 3]| | [4, 5, 6]| +----------+ In below example, we are creating a function which returns nd.ndarray. Their values are also Numpy objects Numpy.int32 instead of Python primitives. Output: array([1, 4, 9], dtype=int32) If we execute the below code, it will throw an exception Py4JavaError. Output:

The solution of this type of exception is to convert it back to a list whose values are Python primitives. Output: +----------+------------+ |int_arrays| squared| +----------+------------+ | [1, 2, 3]| [1, 4, 9]| | [4, 5, 6]|[16, 25, 36]| +----------+------------+ In the above code, we described the solution of the exception. Now do it your own and observe the difference between both programs.

PySpark has another demerit; it takes a lot of time to run compared to the Python counterpart. The small data-size in term of the file size is one of the reasons for the slowness. Spark sends the whole data frame to one and only one executor and leaves other executer waiting. The solution is to repartition the dataframe. For example: When we repartitioned the data, each executer processes one partition at a time, and thus reduces the execution time.

Next TopicPySpark RDD

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share