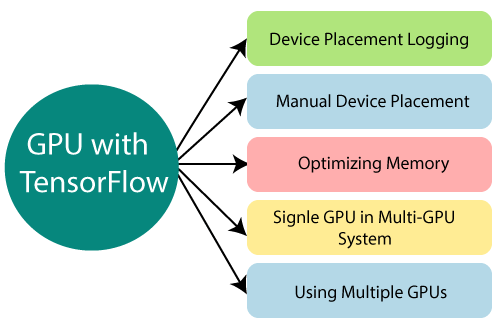

TensorFlow Single and Multiple GPUOur usual system can comprise multiple devices for computation, and as we already know TensorFlow, supports both CPU and GPU, which we represent a string. For example:

Device Placement LoggingWe can find out which devices handle the particular operations by creating a session where the log_device_placementconfiguration option is preset. The output of TensorFlow GPU device placement logging shown as below: Manual Device PlacementAt times we can want to decide on which device our operation should be running and we can do this by creating a context with tf.device wherein we assign the specific device, like. CPU or a GPU that should do the computation, as given below: The above code of TensorFlow GPU assigns the constants a and b to cpu:o. In the second part of the code, since there is no explicit declaration of which device is to perform the task, a GPU by default is chosen if available and it copies the multi-dimensional arrays between devices. Optimizing TensorFlow GPU MemoryMemory fragmentation is done to optimize memory resources by mapping almost all of the TensorFlow GPUs memory that is visible to the processor, thus saving a lot of potential resources. TensorFlow GPU offers two configuration options to control the allocation of memory if and when required by the processor to save memory and these TensorFlow GPU optimizations are described below: ConfigProto is used for this purpose: per_process_gpu_memory_fraction is the second choice, and it decides the segment of the total memory should be allocated for each GPU in use. Given below is an example which will be used in tensorflow to allocate 40% of the memory: It is using only in cases where we already specify the computation and are sure that we will not change during processing. Single GPU in Multi-GPU systemIn multi TensorFlow GPU systems, the device with the lowest identity is selected by default user does not need it. The InvalidArgumentError is obtained when the TensorFlow GPU specified by the user does not exist as shown below: if we want to specify the default Using Multiple GPU in TensorFlowWe are already aware of the towers in TensorFlow and each tower we can assign to a GPU, making a multi tower structural model for working with TensorFlow multiple GPUs. The output of TensorFlow GPU is as follows: We can test this multiple GPU model with a simple dataset such as CIFAR10 to experiment and understand working with GPUs.

Next TopicTensorFlow Mobile

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share