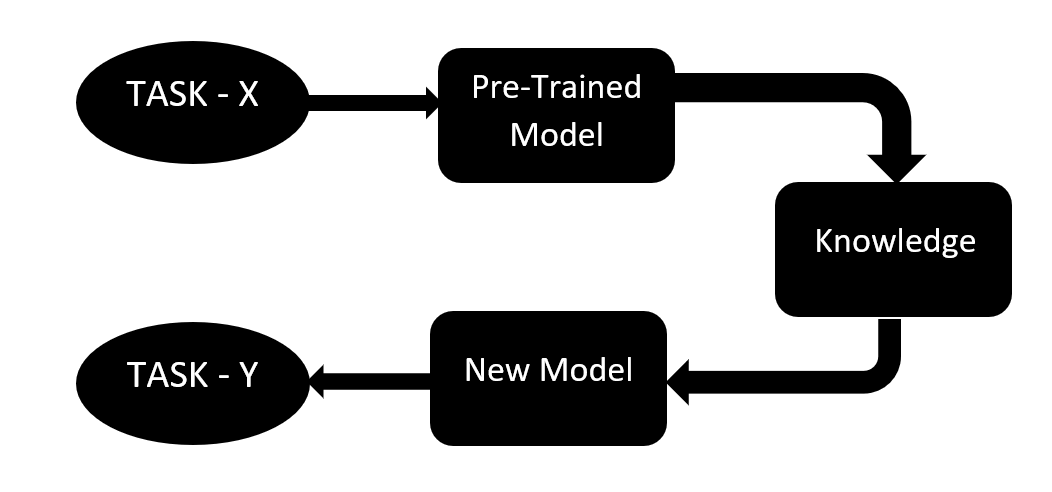

Introduction to Transfer Learning in MLHumans are extremely skilled at transferring knowledge from one task to another. This means that when we face a new problem or task, we immediately recognize it and use the relevant knowledge we have gained from previous learning experiences. This makes it easy to complete our tasks quickly and efficiently. If a user can ride a bike and are asked to drive a motorbike, this is a good example. Their experience with riding a bike will be helpful in such situations. They can balance the bike and steer the motorbike. This will make it easier than if they were a complete beginner. These lessons are extremely useful in real life because they make us better and allow us to gain more experience. The same approach was used to introduce Transfer learning into machine learning. This involves using knowledge from a task to solve a problem in the target task. Although most machine learning algorithms are designed for a single task, there is an ongoing interest in developing transfer learning algorithms. Why Transfer Learning?One curious feature that many deep neural networks built on images share is the ability to detect edges, colours, intensities variations, and other features in the early layers. These features are not specific to any particular task or dataset. It doesn't matter what kind of image we are using to detect lions or cars. These low-level features must be detected in both cases. These features are present regardless of whether the image data or cost function is exact. These features can be learned in one task, such as detecting lions. They can also be used to detect humans. Transfer learning is exactly what this is. Nowadays, it isn't easy to find people who train whole convolutional neural networks from scratch. Instead, it is common to use pre-trained models that have been trained using a variety of images for similar tasks, such as ImageNet (1.2million images with 1000 categories), and then use the features to solve a new task. Blocked Diagram:

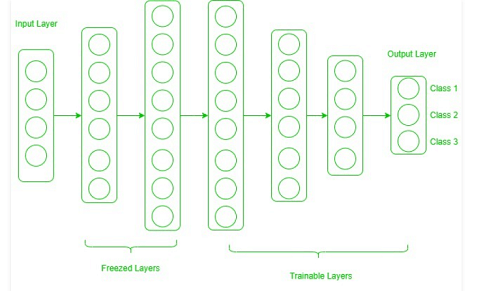

The freezing of layers characterizes transfer learning. When a layer is unavailable to train, it is called a "Frozen Layer". It can be either a CNN layer or a hidden layer. Layers that have not been frozen are subject to regular training. Training will not update the layers' weights that have been frozen. Transfer learning is a method of solving problems using knowledge from a pre-trained model. There are two ways to make use of knowledge from the pre-trained model. The first is to freeze some layers from the pre-trained model and then train layers using our new dataset. The second way is to create a new model but also remove some features from the layer in the pre-trained model. These features can then be used in a new model. Both cases involve removing some of the previously learned features and trying to train the rest. This ensures that only one feature is used in each task. The rest of the model can then be trained to adapt to the new dataset. Freezed and Trainable Layers:

One might wonder how to decide which layers are best to freeze and which ones to train. It is easy to see that layers must be frozen if we wish to inherit features from a pre-trained model. We need to find new species if the model that detected some flowers is not working. A new dataset with new species will contain many similar features to the model. Therefore, we keep fewer layers in order to make the most of that model's knowledge. Consider another example: If a model that detects people in images is already trained and we want to use this knowledge to detect cars in those images, it's not a good idea to freeze many layers. This is because high-level features such as noses, eyes, mouth, etc., will be lost, making them useless for the new dataset (car detection). We only use low-level features of the base network to train the network using a new dataset. Let's look at all scenarios where the target task size and data set differ from the base network.

ConclusionTransfer learning can be a quick and effective way to solve a problem. Transfer learning gives us the direction to go. Most of the best results can be achieved by this method. |

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share