AlexNet in Artificial Intelligence

In the landscape of synthetic intelligence and deep getting to know, the name "AlexNet" stands as a pivotal milestone that has shaped the trajectory of modern-day systems gaining knowledge of studies. Developed by means of Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton, AlexNet marked a turning factor in the subject, pushing the boundaries of image class, paving the manner for the resurgence of neural networks, and inspiring numerous next advancements within the area of convolutional neural networks (CNNs).

Birth of AlexNet

The emergence of AlexNet can be traced again to the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) in 2012. This annual competition aimed to evaluate algorithms for object detection and photo type on a huge dataset containing hundreds of thousands of classified snap shots. The mission changed into discovering objects within 1,000 special classes.

Before AlexNet, deep mastering became no longer as extensively everyday or utilized due to computational limitations and vanishing gradient troubles. Convolutional neural networks were around for a while, however they had no longer shown their full capability until AlexNet got here into the image.

Major Innovations of AlexNet:

- Architecture: AlexNet brought a deep convolutional neural community architecture with an exceptional depth of eight layers. Prior to this, most networks have been rather shallow because of the difficulty of deep education networks. This depth enabled the community to learn complex hierarchical functions from raw photo statistics.

- ReLU Activation: AlexNet popularized the usage of the Rectified Linear Unit (ReLU) activation function. ReLU replaces conventional activation capabilities like sigmoid or tanh with a less complicated characteristic that accelerates education by warding off the vanishing gradient hassle. This helped in training deeper networks extra correctly.

- Data Augmentation: To combat overfitting and decorate generalization, AlexNet employed fact augmentation techniques consisting of cropping, flipping, and altering the coloration scheme of schooling pics. This strategy accelerated the powerful length of the schooling dataset and caused stepped forward model performance.

- Local Response Normalization: Local response normalization (LRN) became another innovation introduced by AlexNet. This approach allowed the community to decorate the reaction of neurons at special scales, in addition enhancing the capability of the network to generalize throughout versions in input statistics.

- Dropout: Although not delivered with the aid of AlexNet, the authors hired a form of dropout in their architecture. Dropout entails randomly deactivating a few neurons in the course of schooling, stopping the community from depending too closely on precise neurons and enhancing its robustness.

Impact on Deep Learning and AI

- Deep Learning Renaissance: Prior to AlexNet, neural networks have been frequently overshadowed with the aid of different gadget studying strategies. AlexNet's leap forward outcomes tested the monstrous power of deep mastering, prompting a surge of interest and investment in neural network research.

- Architectural Innovations: AlexNet brought numerous architectural ideas which have turned out to be staples in CNN layout. Concepts like deep architectures, ReLU activations, and dropout were integrated into next fashions, continually improving their overall performance.

- Transfer Learning: AlexNet's pretrained version, which had found out to apprehend a extensive range of functions from the ImageNet dataset, paved the way for switch getting to know. Researchers realized that these pre learned capabilities will be first-rate-tuned on smaller datasets for numerous specific tasks, significantly lowering the quantity of schooling data needed.

- Computational Advances: The achievement of AlexNet highlighted the need for extra effective hardware, spurring advancements in graphical processing units (GPUs) and other hardware accelerators. This development changed instrumental in allowing the education of large and deeper neural networks.

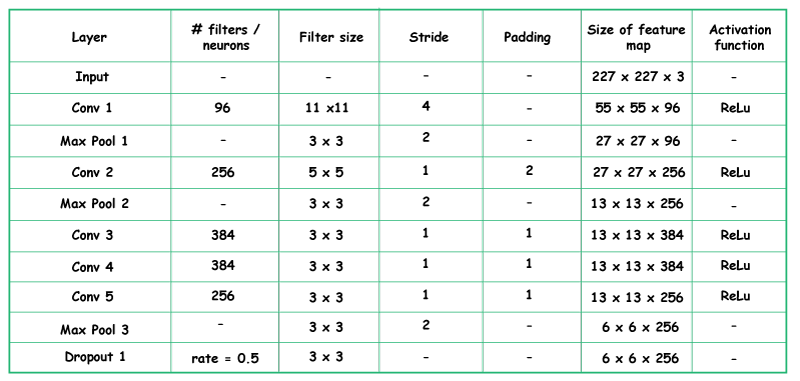

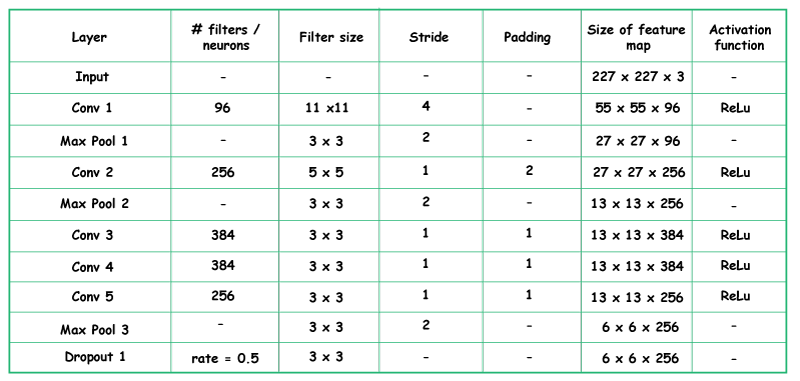

Architecture of AlexNet

One element to observe here, given that Alexnet is a deep structure, the authors added padding to save you the dimensions of the feature maps from lowering notably. The entry to this version is the images of length 227X227X3.

- output= ((Input-filter size)/ stride)+1

- Example Output = (227-11/4) + 1 = 55

- Output = 55 * 55 * 96

- (27 - 3) / 2 + 1 = 12 + 1 = 13

Output = 13 * 13 * 256

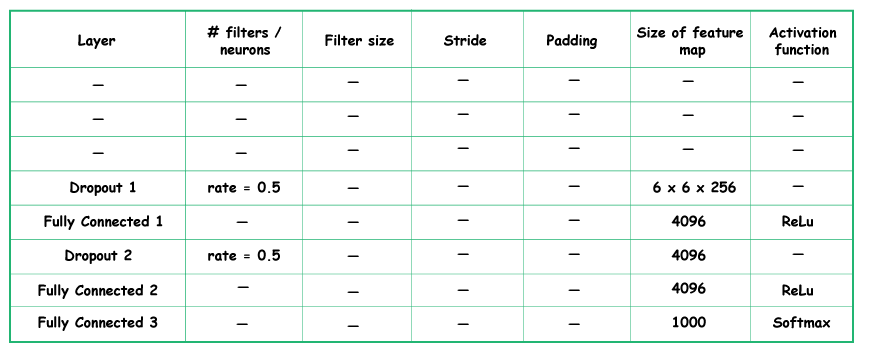

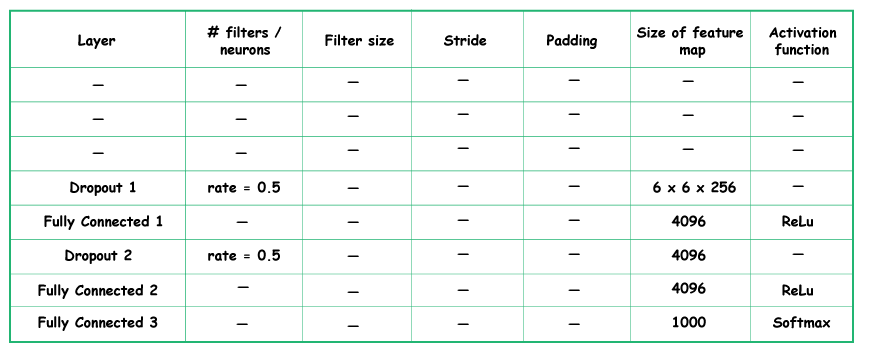

Fully Connected and Dropout Layers

- We have our first dropout layer. The drop-out rate is set to be 0.Five.

- Then we have the first fully related layer with a relu activation function. The length of the output is 4096. Next comes any other dropout layer with the dropout charge constant at zero.5.

- This followed with the aid of a 2nd completely related layer with 4096 neurons and relu activation.

- Finally, we've got the remaining fully linked layer or output layer with one thousand neurons as we have 10000 training inside the information set. The activation characteristic used in this sediment is Softmax.

- It has a total of 62.3 million learnable parameters.

Applications of AlexNet

AlexNet, as one of the pioneering deep getting to know fashions, has had a profound effect on various fields, leading to its application in a number of domain names beyond its initial success in photo class. Here are a few notable applications of AlexNet:

- Image Classification: The primary utility that propelled AlexNet to repute changed into photo type. It showcased unparalleled accuracy in classifying pix right into a wide variety of classes. This capability has located applications in content moderation, photograph search engines like google and yahoo, and automatic tagging systems.

- Object Detection: AlexNet's structure, in conjunction with subsequent improvements, has been tailored for item detection obligations. By using its learned capabilities and hierarchical representation, it is feasible to no longer handiest apprehend gadgets however additionally discover and classify them inside an photograph.

- Medical Imaging: The scientific area has embraced AlexNet for numerous duties, including identifying illnesses in medical pics like X-rays, MRIs, and CT scans. Its potential to come across patterns in complicated scientific records has assisted in early prognosis and treatment planning.

- Autonomous Vehicles: AlexNet's convolutional layers and hierarchical characteristic extraction make it appropriate for object detection in independent automobiles. It aids in identifying pedestrians, motors, traffic symptoms, and different objects, contributing to the notion abilities of self-using motors.

- Visual Surveillance: In security programs, AlexNet is hired for video surveillance and anomaly detection. It can recognize unusual styles or sports inside a video movement, alerting security employees to ability threats.

- Fashion and Retail: The fashion industry makes use of AlexNet for duties such as visible search, product recommendation, and fashion category. It enables systems to understand visible possibilities and endorse gadgets that match users' tastes.

- Agriculture: In agriculture, AlexNet's skills had been harnessed for crop disorder detection and yield estimation. It can discover plant illnesses from pix of leaves, supporting farmers to take well timed action to protect their plants.

- Emotion Recognition: By education on facial expression datasets, AlexNet can be used to analyze human emotions from facial pixels or movies. This has applications in areas like marketplace research, human-pc interaction, and intellectual health tracking.

- Art and Creativity: AlexNet's features have been used in creative applications including fashion transfer, in which the creative fashion of one image is applied to the content material of some other. This results in precise and innovative visual outputs.

- Natural Language Processing (NLP): While AlexNet's direct software is in pc imaginative and prescient, its architecture has inspired similar designs within the NLP area. Concepts from CNNs had been adapted to create fashions capable of processing and know-how sequential statistics like textual content.

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now