AI Ethics (AI Code of Ethics)

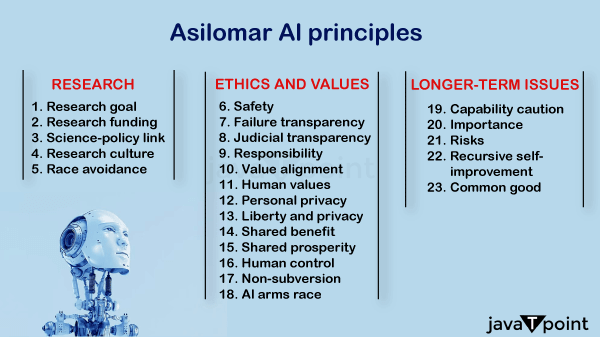

Artificial intelligence (AI) is a fast-evolving technology that has altered many parts of our lives, including healthcare, banking, entertainment, and transportation. However, with such enormous potential comes enormous ethical difficulties. AI's increasing presence in our society needs the establishment of a set of moral principles and standards to ensure its appropriate development and use. This framework is often referred to as AI ethics or the AI code of ethics. The Asilomar AI Principles, a collection of 23 guidelines developed through cooperation between AI researchers, developers, and academics from diverse fields, are one noteworthy effort in this respect. These principles emphasize the significance of AI development that is safe, secure, compassionate, and ecologically friendly, laying the groundwork for responsible AI development.

Beyond theoretical talks, AI ethics has become an important aspect of the agenda for both organizations and governments. Leading technological corporations like IBM, Google, and Meta have dedicated teams to address ethical problems raised by AI applications. Importance of AI ethics:AI ethics are critical for a number of reasons:

Stakeholders in AI Ethics: Collaborators in Responsible AI DevelopmentThe establishment of ethical guidelines for the responsible use and growth of artificial intelligence (AI) involves a wide range of stakeholders who must work together to solve the complex confluence of social, economic, and political challenges that AI technology raises. Each stakeholder group is critical in fostering justice, decreasing prejudice, and mitigating the hazards associated with AI technologies. Academics:Academic scientists and scholars are in charge of producing theoretical insights, conducting investigations, and proposing theories that serve as the foundation for AI ethics. Their work educates governments, enterprises, and non-profit organizations on the most recent advances and difficulties in AI technology. Government:The involvement of government agencies and committees in promoting AI ethics inside a country is critical. They can develop laws and regulations to control artificial intelligence technology, guaranteeing its responsible usage and addressing social concerns. Intergovernmental Organizations:The involvement of international organizations such as the United Nations and the World Bank in creating global awareness regarding AI ethics is essential. They create worldwide agreements and protocols to support safe AI use. For example, UNESCO's endorsement of the first-ever worldwide accord on Artificial Intelligence Ethics in 2021 emphasizes the significance of preserving human rights and dignity in AI development and deployment. Non-profit Organizations:Non-profit organizations such as Black in AI and Queer in AI work to promote diversity and representation in the field of artificial intelligence. They strive to guarantee that various viewpoints and demographics are taken into account while developing AI technology. Organizations like the Future of Life Institute have contributed to AI ethics by developing recommendations like the Asilomar AI Principles, which identify particular dangers, problems, and intended results for AI technology. Private Companies:Private sector firms, including gigantic IT companies such as Google and Meta, as well as organizations in finance, consulting, healthcare, and other AI-enabled industries, must build ethical teams and standards of conduct. In doing so, they establish guidelines for responsible AI research and application within their particular businesses. Ethical Challenges of AI:The ethical concerns of artificial intelligence are broad and complicated, including different facets of AI technology and its applications.

Benefits of ethical AI:

Examples of AI Code of Ethics:Mastercard's AI code of ethics:

Using AI to Transform Images with Lensa AILensa AI is an example of where ethical concerns arose as a result of the use of AI to turn conventional images into styled, cartoon-like profile shots. Critics cited a number of moral concerns:

ChatGPT's AI FrameworkChatGPT is an AI model that uses text to react to user inquiries; however, there are ethical issues with its use.

Resources of AI EthicsA large number of organizations, legislators, and regulatory bodies are actively working to establish and advance moral AI practices. These organizations have a significant impact on how artificial intelligence is used responsibly, how urgent ethical issues are resolved, and how the industry moves towards more moral AI applications. Nvidia NeMo Restrictions:Nemo Guardrails from Nvidia provides an adaptable interface for setting up certain behavioural rules for AI bots, especially chatbots. These rules aid in making sure AI systems adhere to moral, legal, or sector-specific regulations. The Human-Centered Artificial Intelligence (HAI) Institute at Stanford University:Stanford HAI conducts continuous research and offers recommendations on human-centered AI best practices. "Responsible AI for Safe and Equitable Health," one of its projects, tackles moral and security issues with AI applications in healthcare. AI Now Institute:The AI Now Institute is an organization dedicated to researching responsible AI practices and analyzing the societal repercussions of AI. Their study encompasses a wide range of topics, such as worker data rights, privacy, large-scale AI models, algorithmic responsibility, and antitrust issues. Studies such as "AI Now 2023 Landscape: Confronting Tech Power" offer insightful analyses of the moral issues that should guide the development of AI regulations. Harvard University's Berkman Klein Centre for Internet & Society:This centre focuses on investigating the fundamental issues related to AI governance and ethics. Their areas of interest include algorithmic accountability, AI governance frameworks, algorithms in criminal justice, and information quality. The centre's funded research helps shape the ethical standards for artificial intelligence. Joint Technical Committee on Artificial Intelligence (JTC 21) of CEN-CENELEC:The European Union is currently working to create responsible AI standards through JTC 21. These guidelines are meant to support ethical principles, direct the European market, and inform EU legislation. JTC 21 is also tasked with defining the technical specifications for AI systems' accuracy, robustness, and transparency. AI Risk Management Framework (RMF 1.0) developed by NIST:Governmental organizations and the commercial sector can use the National Institute of Standards and Technology's (NIST) guidelines to manage emerging AI risks and encourage ethical AI practices. It offers comprehensive advice on how to put rules and regulations in place to manage AI systems in diverse organizational settings. "The Presidio Recommendations on Responsible Generative AI" published by World Economic Forum:This white paper provides thirty practical suggestions for navigating the ethical complexities of generative AI. It addresses social advancement with AI technology, open innovation, international cooperation, and responsible development. Future of AI Ethics:A more proactive approach is needed to ensure the ethical AI of the future. It is not enough to try to remove bias from AI systems because bias can be ingrained in data. Rather, the emphasis should be on establishing social norms and fairness in order to direct AI towards making moral decisions. As part of this proactive approach, principles are established as opposed to a checklist of "do's and don'ts." Human intervention is vital to ensure responsible AI. Programming for goods and services should put the needs of people first and refrain from discriminating against underrepresented groups. Because AI adoption may deepen societal divisions, it is imperative to address the economic disparities that result from this. Furthermore, it's critical to prepare for the possibility that malicious actors will exploit AI. The creation of safeguards to stop unethical AI behavior is crucial as AI technology develops quickly. Future developments of highly autonomous, unethical AIs may make the need for safeguards to be developed in order to reduce risks and guarantee the development of ethical AIs.

Next TopicPros and Cons of AI-Generated Content

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share