Hyperparameters in Machine LearningHyperparameters in Machine learning are those parameters that are explicitly defined by the user to control the learning process. These hyperparameters are used to improve the learning of the model, and their values are set before starting the learning process of the model.

In this topic, we are going to discuss one of the most important concepts of machine learning, i.e., Hyperparameters, their examples, hyperparameter tuning, categories of hyperparameters, how hyperparameter is different from parameter in Machine Learning? But before starting, let's first understand the Hyperparameter. What are hyperparameters?In Machine Learning/Deep Learning, a model is represented by its parameters. In contrast, a training process involves selecting the best/optimal hyperparameters that are used by learning algorithms to provide the best result. So, what are these hyperparameters? The answer is, "Hyperparameters are defined as the parameters that are explicitly defined by the user to control the learning process." Here the prefix "hyper" suggests that the parameters are top-level parameters that are used in controlling the learning process. The value of the Hyperparameter is selected and set by the machine learning engineer before the learning algorithm begins training the model. Hence, these are external to the model, and their values cannot be changed during the training process. Some examples of Hyperparameters in Machine Learning

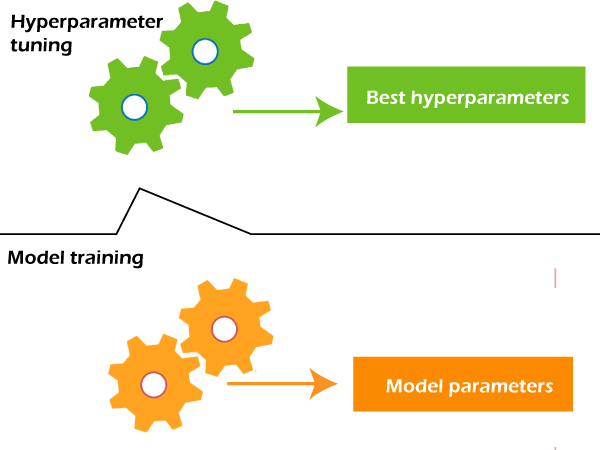

Difference between Parameter and Hyperparameter?There is always a big confusion between Parameters and hyperparameters or model hyperparameters. So, in order to clear this confusion, let's understand the difference between both of them and how they are related to each other. Model Parameters:Model parameters are configuration variables that are internal to the model, and a model learns them on its own. For example, W Weights or Coefficients of independent variables in the Linear regression model. or Weights or Coefficients of independent variables in SVM, weight, and biases of a neural network, cluster centroid in clustering. Some key points for model parameters are as follows:

Model Hyperparameters:Hyperparameters are those parameters that are explicitly defined by the user to control the learning process. Some key points for model parameters are as follows:

Categories of HyperparametersBroadly hyperparameters can be divided into two categories, which are given below:

Hyperparameter for OptimizationThe process of selecting the best hyperparameters to use is known as hyperparameter tuning, and the tuning process is also known as hyperparameter optimization. Optimization parameters are used for optimizing the model.

Some of the popular optimization parameters are given below:

Note: Learning rate is a crucial hyperparameter for optimizing the model, so if there is a requirement of tuning only a single hyperparameter, it is suggested to tune the learning rate.

Hyperparameter for Specific ModelsHyperparameters that are involved in the structure of the model are known as hyperparameters for specific models. These are given below:

It is important to specify the number of hidden units hyperparameter for the neural network. It should be between the size of the input layer and the size of the output layer. More specifically, the number of hidden units should be 2/3 of the size of the input layer, plus the size of the output layer. For complex functions, it is necessary to specify the number of hidden units, but it should not overfit the model.

ConclusionHyperparameters are the parameters that are explicitly defined to control the learning process before applying a machine-learning algorithm to a dataset. These are used to specify the learning capacity and complexity of the model. Some of the hyperparameters are used for the optimization of the models, such as Batch size, learning rate, etc., and some are specific to the models, such as Number of Hidden layers, etc.

Next TopicImportance of Machine Learning

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share