Big Data Technologies

Before big data technologies were introduced, the data was managed by general programming languages and basic structured query languages. However, these languages were not efficient enough to handle the data because there has been continuous growth in each organization's information and data and the domain. That is why it became very important to handle such huge data and introduce an efficient and stable technology that takes care of all the client and large organizations' requirements and needs, responsible for data production and control. Big data technologies, the buzz word we get to hear a lot in recent times for all such needs.

In this article, we are discussing the leading technologies that have expanded their branches to help Big Data reach greater heights. Before we discuss big data technologies, let us first understand briefly about Big Data Technology.

What is Big Data Technology?

Big data technology is defined as software-utility. This technology is primarily designed to analyze, process and extract information from a large data set and a huge set of extremely complex structures. This is very difficult for traditional data processing software to deal with.

Among the larger concepts of rage in technology, big data technologies are widely associated with many other technologies such as deep learning, machine learning, artificial intelligence (AI), and Internet of Things (IoT) that are massively augmented. In combination with these technologies, big data technologies are focused on analyzing and handling large amounts of real-time data and batch-related data.

Types of Big Data Technology

Before we start with the list of big data technologies, let us first discuss this technology's board classification. Big Data technology is primarily classified into the following two types:

Operational Big Data Technologies

This type of big data technology mainly includes the basic day-to-day data that people used to process. Typically, the operational-big data includes daily basis data such as online transactions, social media platforms, and the data from any particular organization or a firm, which is usually needed for analysis using the software based on big data technologies. The data can also be referred to as raw data used as the input for several Analytical Big Data Technologies.

Some specific examples that include the Operational Big Data Technologies can be listed as below:

- Online ticket booking system, e.g., buses, trains, flights, and movies, etc.

- Online trading or shopping from e-commerce websites like Amazon, Flipkart, Walmart, etc.

- Online data on social media sites, such as Facebook, Instagram, Whatsapp, etc.

- The employees' data or executives' particulars in multinational companies.

Analytical Big Data Technologies

Analytical Big Data is commonly referred to as an improved version of Big Data Technologies. This type of big data technology is a bit complicated when compared with operational-big data. Analytical big data is mainly used when performance criteria are in use, and important real-time business decisions are made based on reports created by analyzing operational-real data. This means that the actual investigation of big data that is important for business decisions falls under this type of big data technology.

Some common examples that involve the Analytical Big Data Technologies can be listed as below:

- Stock marketing data

- Weather forecasting data and the time series analysis

- Medical health records where doctors can personally monitor the health status of an individual

- Carrying out the space mission databases where every information of a mission is very important

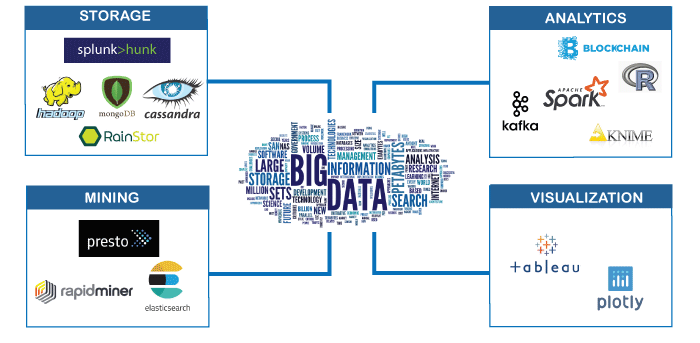

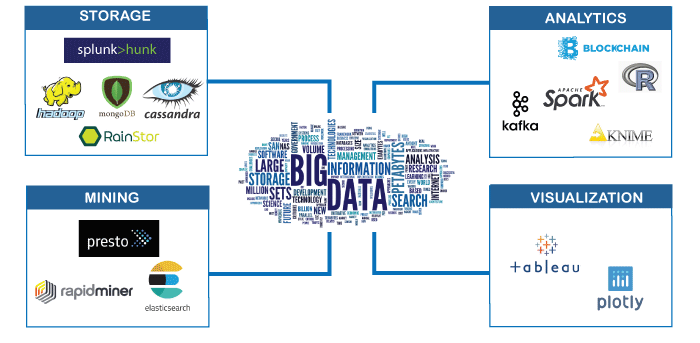

Top Big Data Technologies

We can categorize the leading big data technologies into the following four sections:

- Data Storage

- Data Mining

- Data Analytics

- Data Visualization

Data Storage

Let us first discuss leading Big Data Technologies that come under Data Storage:

- Hadoop: When it comes to handling big data, Hadoop is one of the leading technologies that come into play. This technology is based entirely on map-reduce architecture and is mainly used to process batch information. Also, it is capable enough to process tasks in batches. The Hadoop framework was mainly introduced to store and process data in a distributed data processing environment parallel to commodity hardware and a basic programming execution model.

Apart from this, Hadoop is also best suited for storing and analyzing the data from various machines with a faster speed and low cost. That is why Hadoop is known as one of the core components of big data technologies. The Apache Software Foundation introduced it in Dec 2011. Hadoop is written in Java programming language.

- MongoDB: MongoDB is another important component of big data technologies in terms of storage. No relational properties and RDBMS properties apply to MongoDb because it is a NoSQL database. This is not the same as traditional RDBMS databases that use structured query languages. Instead, MongoDB uses schema documents.

The structure of the data storage in MongoDB is also different from traditional RDBMS databases. This enables MongoDB to hold massive amounts of data. It is based on a simple cross-platform document-oriented design. The database in MongoDB uses documents similar to JSON with the schema. This ultimately helps operational data storage options, which can be seen in most financial organizations. As a result, MongoDB is replacing traditional mainframes and offering the flexibility to handle a wide range of high-volume data-types in distributed architectures.

MongoDB Inc. introduced MongoDB in Feb 2009. It is written with a combination of C++, Python, JavaScript, and Go language.

- RainStor: RainStor is a popular database management system designed to manage and analyze organizations' Big Data requirements. It uses deduplication strategies that help manage storing and handling vast amounts of data for reference.

RainStor was designed in 2004 by a RainStor Software Company. It operates just like SQL. Companies such as Barclays and Credit Suisse are using RainStor for their big data needs.

- Hunk: Hunk is mainly helpful when data needs to be accessed in remote Hadoop clusters using virtual indexes. This helps us to use the spunk search processing language to analyze data. Also, Hunk allows us to report and visualize vast amounts of data from Hadoop and NoSQL data sources.

Hunk was introduced in 2013 by Splunk Inc. It is based on the Java programming language.

- Cassandra: Cassandra is one of the leading big data technologies among the list of top NoSQL databases. It is open-source, distributed and has extensive column storage options. It is freely available and provides high availability without fail. This ultimately helps in the process of handling data efficiently on large commodity groups. Cassandra's essential features include fault-tolerant mechanisms, scalability, MapReduce support, distributed nature, eventual consistency, query language property, tunable consistency, and multi-datacenter replication, etc.

Cassandra was developed in 2008 by the Apache Software Foundation for the Facebook inbox search feature. It is based on the Java programming language.

Data Mining

Let us now discuss leading Big Data Technologies that come under Data Mining:

- Presto: Presto is an open-source and a distributed SQL query engine developed to run interactive analytical queries against huge-sized data sources. The size of data sources can vary from gigabytes to petabytes. Presto helps in querying the data in Cassandra, Hive, relational databases and proprietary data storage systems.

Presto is a Java-based query engine that was developed in 2013 by the Apache Software Foundation. Companies like Repro, Netflix, Airbnb, Facebook and Checkr are using this big data technology and making good use of it.

- RapidMiner: RapidMiner is defined as the data science software that offers us a very robust and powerful graphical user interface to create, deliver, manage, and maintain predictive analytics. Using RapidMiner, we can create advanced workflows and scripting support in a variety of programming languages.

RapidMiner is a Java-based centralized solution developed in 2001 by Ralf Klinkenberg, Ingo Mierswa, and Simon Fischer at the Technical University of Dortmund's AI unit. It was initially named YALE (Yet Another Learning Environment). A few sets of companies that are making good use of the RapidMiner tool are Boston Consulting Group, InFocus, Domino's, Slalom, and Vivint.SmartHome.

- ElasticSearch: When it comes to finding information, elasticsearch is known as an essential tool. It typically combines the main components of the ELK stack (i.e., Logstash and Kibana). In simple words, ElasticSearch is a search engine based on the Lucene library and works similarly to Solr. Also, it provides a purely distributed, multi-tenant capable search engine. This search engine is completely text-based and contains schema-free JSON documents with an HTTP web interface.

ElasticSearch is primarily written in a Java programming language and was developed in 2010 by Shay Banon. Now, it has been handled by Elastic NV since 2012. ElasticSearch is used by many top companies, such as LinkedIn, Netflix, Facebook, Google, Accenture, StackOverflow, etc.

Data Analytics

Now, let us discuss leading Big Data Technologies that come under Data Analytics:

- Apache Kafka: Apache Kafka is a popular streaming platform. This streaming platform is primarily known for its three core capabilities: publisher, subscriber and consumer. It is referred to as a distributed streaming platform. It is also defined as a direct messaging, asynchronous messaging broker system that can ingest and perform data processing on real-time streaming data. This platform is almost similar to an enterprise messaging system or messaging queue.

Besides, Kafka also provides a retention period, and data can be transmitted through a producer-consumer mechanism. Kafka has received many enhancements to date and includes some additional levels or properties, such as schema, Ktables, KSql, registry, etc. It is written in Java language and was developed by the Apache software community in 2011. Some top companies using the Apache Kafka platform include Twitter, Spotify, Netflix, Yahoo, LinkedIn etc.

- Splunk: Splunk is known as one of the popular software platforms for capturing, correlating, and indexing real-time streaming data in searchable repositories. Splunk can also produce graphs, alerts, summarized reports, data visualizations, and dashboards, etc., using related data. It is mainly beneficial for generating business insights and web analytics. Besides, Splunk is also used for security purposes, compliance, application management and control.

Splunk Inc. introduced Splunk in the year 2014. It is written in combination with AJAX, Python, C ++ and XML. Companies such as Trustwave, QRadar, and 1Labs are making good use of Splunk for their analytical and security needs.

- KNIME: KNIME is used to draw visual data flows, execute specific steps and analyze the obtained models, results, and interactive views. It also allows us to execute all the analysis steps altogether. It consists of an extension mechanism that can add more plugins, giving additional features and functionalities.

KNIME is based on Eclipse and written in a Java programming language. It was developed in 2008 by KNIME Company. A list of companies that are making use of KNIME includes Harnham, Tyler, and Paloalto.

- Spark: Apache Spark is one of the core technologies in the list of big data technologies. It is one of those essential technologies which are widely used by top companies. Spark is known for offering In-memory computing capabilities that help enhance the overall speed of the operational process. It also provides a generalized execution model to support more applications. Besides, it includes top-level APIs (e.g., Java, Scala, and Python) to ease the development process.

Also, Spark allows users to process and handle real-time streaming data using batching and windowing operations techniques. This ultimately helps to generate datasets and data frames on top of RDDs. As a result, the integral components of Spark Core are produced. Components like Spark MlLib, GraphX, and R help analyze and process machine learning and data science. Spark is written using Java, Scala, Python and R language. The Apache Software Foundation developed it in 2009. Companies like Amazon, ORACLE, CISCO, VerizonWireless, and Hortonworks are using this big data technology and making good use of it.

- R-Language: R is defined as the programming language, mainly used in statistical computing and graphics. It is a free software environment used by leading data miners, practitioners and statisticians. Language is primarily beneficial in the development of statistical-based software and data analytics.

R-language was introduced in Feb 2000 by R-Foundation. It is written in Fortran. Companies like Barclays, American Express, and Bank of America use R-Language for their data analytics needs.

- Blockchain: Blockchain is a technology that can be used in several applications related to different industries, such as finance, supply chain, manufacturing, etc. It is primarily used in processing operations like payments and escrow. This helps in reducing the risks of fraud. Besides, it enhances the transaction's overall processing speed, increases financial privacy, and internationalize the markets. Additionally, it is also used to fulfill the needs of shared ledger, smart contract, privacy, and consensus in any Business Network Environment.

Blockchain technology was first introduced in 1991 by two researchers, Stuart Haber and W. Scott Stornetta. However, blockchain has its first real-world application in Jan 2009 when Bitcoin was launched. It is a specific type of database based on Python, C++, and JavaScript. ORACLE, Facebook, and MetLife are a few of those top companies using Blockchain technology.

Data Visualization

Let us discuss leading Big Data Technologies that come under Data Visualization:

- Tableau: Tableau is one of the fastest and most powerful data visualization tools used by leading business intelligence industries. It helps in analyzing the data at a very faster speed. Tableau helps in creating the visualizations and insights in the form of dashboards and worksheets.

Tableau is developed and maintained by a company named TableAU. It was introduced in May 2013. It is written using multiple languages, such as Python, C, C++, and Java. Some of the list's top companies are Cognos, QlikQ, and ORACLE Hyperion, using this tool.

- Plotly: As the name suggests, Plotly is best suited for plotting or creating graphs and relevant components at a faster speed in an efficient way. It consists of several rich libraries and APIs, such as MATLAB, Python, Julia, REST API, Arduino, R, Node.js, etc. This helps interactive styling graphs with Jupyter notebook and Pycharm.

Plotly was introduced in 2012 by Plotly company. It is based on JavaScript. Paladins and Bitbank are some of those companies that are making good use of Plotly.

Emerging Big Data Technologies

Apart from the above mentioned big data technologies, there are several other emerging big data technologies. The following are some essential technologies among them:

- TensorFlow: TensorFlow combines multiple comprehensive libraries, flexible ecosystem tools, and community resources that help researchers implement the state-of-art in Machine Learning. Besides, this ultimately allows developers to build and deploy machine learning-powered applications in specific environments.

TensorFlow was introduced in 2019 by Google Brain Team. It is mainly based on C++, CUDA, and Python. Companies like Google, eBay, Intel, and Airbnb are using this technology for their business requirements.

- Beam: Apache Beam consists of a portable API layer that helps build and maintain sophisticated parallel-data processing pipelines. Apart from this, it also allows the execution of built pipelines across a diversity of execution engines or runners.

Apache Beam was introduced in June 2016 by the Apache Software Foundation. It is written in Python and Java. Some leading companies like Amazon, ORACLE, Cisco, and VerizonWireless are using this technology.

- Docker: Docker is defined as the special tool purposely developed to create, deploy, and execute applications easier by using containers. Containers usually help developers pack up applications properly, including all the required components like libraries and dependencies. Typically, containers bind all components and ship them all together as a package.

Docker was introduced in March 2003 by Docker Inc. It is based on the Go language. Companies like Business Insider, Quora, Paypal, and Splunk are using this technology.

- Airflow: Airflow is a technology that is defined as a workflow automation and scheduling system. This technology is mainly used to control, and maintain data pipelines. It contains workflows designed using the DAGs (Directed Acyclic Graphs) mechanism and consisting of different tasks. The developers can also define workflows in codes that help in easy testing, maintenance, and versioning.

Airflow was introduced in May 2019 by the Apache Software Foundation. It is based on a Python language. Companies like Checkr and Airbnb are using this leading technology.

- Kubernetes: Kubernetes is defined as a vendor-agnostic cluster and container management tool made open-source in 2014 by Google. It provides a platform for automation, deployment, scaling, and application container operations in the host clusters.

Kubernetes was introduced in July 2015 by the Cloud Native Computing Foundation. It is written in the Go language. Companies like American Express, Pear Deck, PeopleSource, and Northwestern Mutual are making good use of this technology.

These are emerging technologies. However, they are not limited because the ecosystem of big data is constantly emerging. That is why new technologies are coming at a very fast pace based on the demand and requirements of IT industries.

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now