Recursion in C++Recursion is a magical concept in the world of programming. It is a technique that allows a function to perform a fascinating feat - calling itself. Picture a function as if it were a magical creature looking into a mirror. When it sees its reflection, something magical happens. It creates another mirror, and then another, and it keeps going on like a chain reaction. Each mirror shows the same thing - the creature and its reflection. It's a bit like when you stand between two mirrors, and you see your image repeating again and again, but in this case, the function keeps making more mirrors of itself, like a never-ending magical mirror show. This self-replicating quality is what makes recursion both enigmatic and profoundly significant in the realm of programming. Defining Recursion:When a function is called within the same function, it is known as recursion in C++. The function which calls the same function is known as a recursive function. At its core, recursion is a mesmerizing concept that empowers a function to solve problems by breaking them down into smaller instances of the same problem. It is like breaking a puzzle down into smaller bits until you get to the simplest element that can be solved immediately. Consider the Fibonacci sequence, where each number is the sum of the two numbers before it. The nth Fibonacci number can be written as a function of the (n-1)th and (n-2)nd Fibonacci numbers. This recursive definition is elegant and reflects the mathematical nature of the problem. The Significance of Recursion:Recursion's significance in programming is nothing short of magical: Simplicity between Complexities: Recursion simplifies complex problems by dividing them into smaller, more understandable subproblems. It allows you to tackle intricate challenges with ease. Elegance and Clarity: Recursive solutions often result in elegant, readable code that closely resembles the problem's structure. Versatility Unleashed: Recursive algorithms are versatile; once you grasp the recursive approach, you can apply it to an array of problems, from searching and sorting to tree traversal and beyond. Efficiency and Optimization: In certain scenarios, recursion can lead to efficient solutions. For instance, divide-and-conquer algorithms divide a problem into smaller parts, often resulting in faster execution times. Direct vs. Indirect Recursion:The journey into the world of recursion introduces two intriguing paths: Direct Recursion: In this method, a function calls itself directly within its own body. It's a straightforward form of recursion, where a function unapologetically invokes itself. Example: Indirect Recursion: Indirect recursion is occurs when two or more functions or routines take turns calling each other in a loop. They keep passing control back and forth, like a game of tag. Eventually, one of these functions decides to break the loop by calling itself directly, which stops the back-and-forth and ends the process. It's a bit like a group of friends playing a game where they take turns tagging each other, and at some point, one friend decides to tag themselves to stop the game. Example: Direct and indirect recursion are two distinct facets of the recursive journey, each with its own allure. Direct recursion is straightforward, while indirect recursion weaves a complex web of function calls. Let us see a simple example of recursion. Base Case and Recursive Case:The Recursion Pillars: Base Case and Recursive Case Recursion is an interesting journey through a room of mirrors, where you meet layers of reflections. The base and recursive cases act as guiding features along this journey. They are the fundamental building pieces that keep infinite loops from occurring and provide structure to the recursive process. The Base Case: A Dead End Assume you're investigating a house of mirrors and come across a door labeled "Exit". In recursion, this door represents the base case. It is the point at which the recursive function decides to pause and begin unwinding the layers of calls it has made. The base case is like a safety net, ensuring that the recursion doesn't spiral into an infinite loop. Without it, the function would keep calling itself indefinitely, leading to a stack overflow error or an infinite loop. Therefore, the base case is essential in any recursive algorithm. For example, consider the calculation of the factorial of a number: In this example, when n reaches 0, the base case triggers, and the recursion stops. Without this base case, the function would continue decrementing n indefinitely. The Recursive Case: Breaking down the problem, you will notice as you travel around the house of mirrors that each room is a slightly altered reflection of the one before it. As you progress around the house of mirrors, you notice that each chamber is a reflection of the one before it but slightly altered. The recursive case comes into play here. In the example above, the recursive case in the factorial is defined as return n * factorial(n - 1). The difficulty of determining the factorial of n is reduced to determining the factorial of n - 1. This process continues until n reaches the base case of 0. The recursive case illustrates the essence of recursion: it involves breaking down a problem into smaller examples of the same problem until it can be solved directly. Recursion's brilliance and strength as a programming tool are due to the idea of self-reference. How Recursion Works:Flow of Control in Recursion:In a recursive function, the flow of control begins with an initial function call. As the function executes, it may encounter a recursive call to itself. This new call creates a separate instance of the function, which begins execution. This process continues until a base case is reached, at which point the calls start to return, each passing its result to the previous call. The flow of control moves back up the call stack until the original call completes and the final result is obtained. Creation and Management of Stack Frames:Each function call in a recursive chain creates a new stack frame in memory. This frame stores local variables, parameters, and the return address as the recursive calls pile up, and a stack-like structure forms, known as the call stack. When a call returns, its stack frame is removed, freeing up memory. The call stack ensures that the program can keep track of where to resume execution after each recursive call and prevents memory overflow. It's a crucial mechanism for managing the recursion process efficiently. Algorithm: StepsCertainly, here are the steps for using recursion in a function, presented concisely:

A mathematical InterpretationLet us provide a mathematical interpretation of how recursion works: Base Case (n₀): It defines a condition, often represented as n₀, where the recursion stops. It is the point at which the function returns a known result without further iteration. It can be stated mathematically as follows: F(n₀) = Result Recursive Case (n > n₀): It expresses the problem in terms of a smaller instance of itself. It involves a mathematical link between F(n) and F(n - 1), where n > n0. This relationship can be written as: F(n) = Function(F(n - 1)) Function Application: It implements the function that converts F(n - 1) to F(n). This function can be represented mathematically as: F(n) = g(F(n - 1)) Iteration: As the recursion proceeds, you pass over lesser values of n, applying the function g recursively until you reach the base case n0. Result Computation: In the base case n₀, the recursion stops, and the final result is computed directly using the given condition. Recursive vs. Iterative Solutions:Here's a comparison between recursion and iteration in table form:

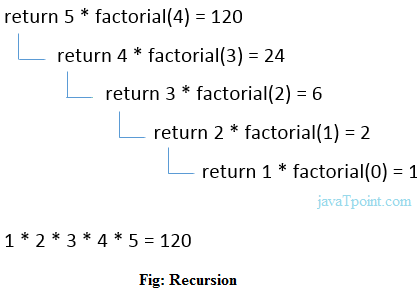

Memory Allocation in Recursive Functions:Recursive functions use memory in an unusual way, relying mostly on the call stack, which adheres to the Last-In-First-Out (LIFO) structure similar to the stack data structure. Stack Allocation: A new stack frame is constructed and pushed onto the call stack with each recursive call. This stack frame stores local variables, function parameters, and the return address. Local variables and function parameters are stack frame specific and not shared with other function calls. These variables' values are retained until the function call is done. Base Case Termination: When the function reaches the base case, it yields a value. This return value is passed back up the call stack, and the related stack frame is freed from memory. Base Condition in Recursion:In recursion, the base condition or base case is a critical element. It serves as the termination condition that stops the recursion and provides a solution to the simplest version of the problem. The base case is expressed in terms of smaller instances of the same problem, and it helps build up the solution for larger instances. For example, consider the factorial function: In this example, the base case is n <= 1, and it returns 1. For larger values of n, the problem is expressed in terms of n and factorial(n - 1). This recursive definition continues until the base case is reached, allowing the solution to be built step by step. C++ Recursion ExampleLet us see an example to print factorial numbers using recursion in C++ language. C++ code to implement factorial Output Enter any number: 5 Factorial of a number We can understand the above program of recursive method call by the figure given below:

C++ code to implement Fibonacci series Output Enter a Number: 5 0 1 1 2 3 5 Advantages and disadvantages of Recursion in C++There are several advantages and disadvantage of recursion in C++. Some main advantages and disadvantages of recursion are as follows: Advantages of Recursion in C++:

Disadvantages of Recursion in C++:

Difference between direct and indirect recursionDirect Recursion in C++:Direct recursion occurs when a function calls itself directly. Here are some key points about direct recursion:

Indirect Recursion in C++:Indirect recursion occurs when two or more functions call each other in a cycle. Here are some important aspects to remember regarding indirect recursion:

Real-world issues and recursion applicationsTree Recursion: Tree recursion occurs when a function makes multiple recursive calls in its recursive case. It creates a branching pattern similar to a tree structure. Each branch represents a separate call sequence. Managing base cases and understanding the branching behavior is important to ensure termination and correct results. Dynamic Programming and Memoization: Dynamic programming is a technique that uses recursion and memoization (caching) to avoid redundant calculations. By storing the results of function calls in a data structure and looking them up when needed, you can significantly improve the efficiency of recursive algorithms. Recursive Data Structures: Recursion is not limited to functions; it can also apply to data structures. For instance, a linked list or a binary tree can be defined recursively. In this context, a linked list node contains a reference to the next node, which is another linked list node. Recursion with Arrays and Strings: Recursion can be applied to arrays and strings as well. For example, you can use recursion to reverse an array, find the maximum element, or perform string manipulation tasks like palindrome checking and pattern matching. Mutually Recursive Data Structures: In some cases, you might encounter data structures that are mutually recursive, where two or more types reference each other in their definitions. Handling such structures requires careful design to ensure proper initialization and prevent infinite loops. Infinite Recursion: Infinite recursion occurs when a recursive function doesn't have a proper base case, or the base case is never reached due to incorrect logic. It leads to an infinite loop of function calls, causing the program to crash due to a stack overflow. Tail Call Optimization (TCO): Some programming languages and compilers support tail call optimization. This optimization reduces the stack space used for tail-recursive functions, making them as efficient as iterative solutions. However, C++ does not guarantee TCO, so it's important to be mindful of stack usage in recursive functions. Handling Deep Recursion: When dealing with deep recursion (a large number of nested calls), consider strategies to reduce memory usage. Using a stack, you can use techniques like memoization, iterative solutions, or converting recursive solutions to iterative ones. Divide and Conquer: The divide-and-conquer technique involves breaking down a problem into smaller sub-problems, solving them recursively, and then combining their solutions to solve the original problem. Algorithms like merge sort and quicksort use this approach. Backtracking: Backtracking is a technique that involves trying out various possibilities to solve a problem and reverting when a solution is not feasible. Problems like the "Eight Queens" puzzle and the "Sudoku" solver often utilize backtracking through recursion. Recursion in Object-Oriented Programming: In object-oriented programming, recursion can be used to traverse complex object structures like trees and graphs. Recursive methods can navigate through object relationships and perform operations at each level. Nested Recursion:Nested recursion involves a function that calls itself with the result of a previous recursive call. It can lead to complex behavior as each recursive call generates multiple new calls. Proper handling of base cases is crucial in nested recursion to prevent infinite loops and ensure correct results.

Next TopicC++ Storage Classes

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share