Difference between Ammeter & VoltmeterAmmeter

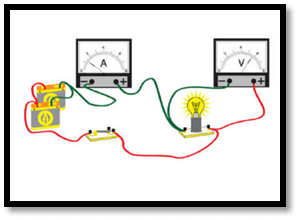

A device used to detect the current flowing across a circuit is called an ammeter, which is short for an ampere metre. Amperes (A) are units used to measure electric currents. The ammeter and the circuit within which the current flow is to be measured are linked in series to allow for direct measurement. In order to avoid significantly lowering the voltage in the circuit getting monitored, an ammeter often has low resistance. Microammeters and milliammeters are terms used to describe devices that measure currents in the milliampere / microampere range. The Earth's magnetic field was necessary to operate the earliest ammeters, which were laboratory instruments. Improved instruments were created by the late 19th century that could be put in any orientation and permitted precise measurements in electrical power systems. In a circuit, it is typically symbolized by the letter "A." The circuit is to be monitored, and the ammeters must be linked in series. The entire circuit current can pass through an ammeter at relatively low currents (up to a few amperes). A shunt resistor typically carries the majority of a circuit current for bigger direct currents, and only a small, precisely measured portion of the current flows through the metre movement. A current transformer can be used to conveniently supply a little current, such as 1 or 5 amperes, to drive an instrument in alternating current circuits. In contrast, the primary current to be monitored is much bigger (up to thousands of amperes). When a shunt or transformer is used, the indicating metre can be placed conveniently without having to run any heavy circuit connections up to the site of observation. When working with alternating current, a current transformer protects the metre from the primary circuit's high voltage. Direct-current ammeters cannot be isolated in this way by shunts. Still, in situations involving high voltages, it may be possible to install the ammeter on the "return" side of the circuit, which may have a low potential relative to earth. Voltmeter

A voltmeter is a device that determines the difference in voltage between two locations in an electronic circuit. It is connected at the same time. It usually has a higher resistance, drawing very little current from the circuit. A series resistor and galvanometer can be used to create analog voltmeters, which move a needle across a scale in accordance with the voltage detected. Meters that make use of amplifiers are capable of measuring microvolts or less. An analog-to-digital converter is used by digital voltmeters to display voltage as numeric values. Voltmeters come in a broad variety of designs, some powered independently (by a battery, for example), and some powered directly by the source of the voltage being measured. Generators and other stationary equipment are monitored by instruments that are permanently positioned in a panel. Standard test tools are used in electrical and electronic work and are often portable instruments with a multimeter that can also measure current & resistance. A properly calibrated metre can show any quantity that can be converted into a voltage, including pressure, temperature, flow, and level in a biochemical processing center. The accuracy of general-purpose analog voltmeters, which are used with voltages ranging from a portion of a volt to several 1000 volts, may be as little as a few per cent of the total scale. High-accuracy digital meters, often better than 1%, can be produced. Laboratory tools can measure to accuracies of a few parts per million, whereas specially certified test instruments possess higher accuracies. Calibrating a voltmeter to ensure accuracy is a challenge in the development of an accurate voltmeter. The Weston cell is a standard voltage used in laboratories for precise operations. It is possible to get precise voltage references that are relied on electronic circuits. Difference between Ammeter and VoltmeterThe main differences between an ammeter and a voltmeter are as follows:

Next TopicDifference between

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share