Caching Mechanism in Java

Caching is the process of storing and accessing data from memory (cache memory). The main feature of caching is to reduce the time to access specific data. Caching aims at storing data that can be helpful in the future. The reason for caching is that accessing the data from persistent memory (hard drives like HDD, SDD) used to take considerable time, thus, slowing the process.

Hence, caching reduces the time to acquire the data from memory. Cache memory is used to store the data which is a high-speed data storage layer and works for the purpose to reduce the need to access the data storage layer. Cache memory is implemented by fast access hardware (RAM).

Cache makes it possible to implement the mechanism for reusing previously computed data. Whenever hardware or software requests the specific data, the requested data is firstly searched in cache memory, if the data is found cache hit occurs and if data is not found cache miss occurs.

Why caching is important?

- It plays a vital role in improving system performance.

- It reduces the overall time and makes the system time efficient because it stores data locally.

- Caching is unavoidable as it provides high performance in computer technology.

- It often does not make any new requests.

- It avoids reprocessing of the data.

Use Case of Caching Memory

- Mainly caching is used to speed up database applications. Here, a portion of the database is replaced by cache and the result is removing latency which is used to come from frequently accessing the data. These use cases are seen in case of a larger volume of accessing the data, for instance, high traffic dynamic websites.

- Another use case can be an acceleration of query. Here, the cache is used for storing the result of a complex query. Queries such as the order and grouping take a considerable time for execution. If executing the queries is repeated then using cache for storing the result gives a higher response.

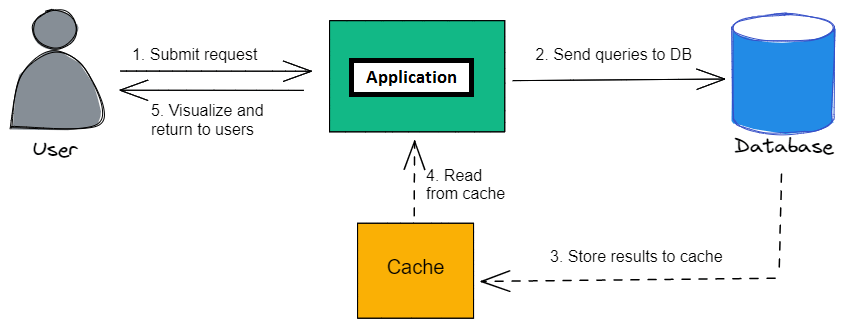

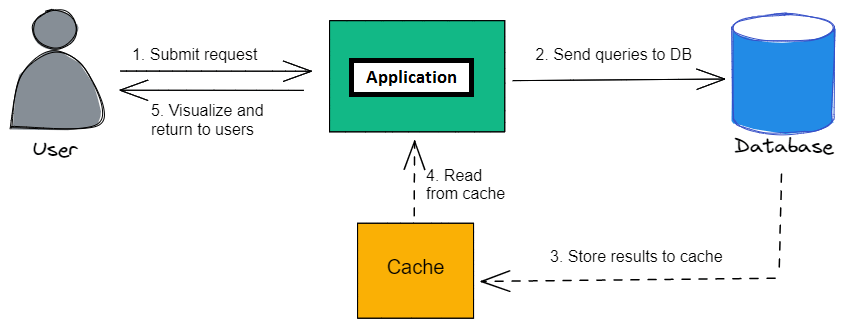

How caching mechanism works?

The Java Object Cache manages Java objects within a process, across processes, or on a local disk. The Java Object Cache provides a powerful, flexible, and easy-to-use service that significantly improves Java performance by managing local copies of Java objects. There are very few restrictions on the types of Java objects that can be cached or on the original source of the objects. Programmers use the Java Object Cache to manage objects that, without cache access, are expensive to retrieve or to create.

The Java Object Cache is easy to integrate into new and existing applications. Objects can be loaded into the object cache, using a user-defined object, the CacheLoader, and can be accessed through a CacheAccess object. The CacheAccess object supports local and distributed object management. Most of the functionality of the Java Object Cache does not require administration or configuration. Advanced features support configuration using administration application programming interfaces (APIs) in the Cache class. Administration includes setting configuration options, such as naming local disk space or defining network ports. The administration features allow applications to fully integrate the Java Object Cache.

Each cached Java object has a set of associated attributes that control how the object is loaded into the cache, where the object is stored, and how the object is invalidated. Cached objects are invalidated based on time or an explicit request (notification can be provided when the object is invalidated). Objects can be invalidated by group or individually.

Types of Caching

- Database Caching: A certain level of caching is already present in the Database. This internal cache is utilized for avoiding repeated queries. The last executed query result can be provided by the database immediately. The most commonly used database caching algorithm is based on storing key-value pairs in the hash table.

- Memory-caching: RAM is directly used for storing the cached data, this approach is faster than common database storage systems (Hard drives). This method is based on a set of key-value pairs in the database. The value is the cached data and the key is the unique value. Each set is uniquely identified. It is Fast, efficient, and easy to implement.

- Web Caching: It is divided into 2 parts:

- Web client caching: This caching method is on the client-side and is commonly used by all internet users. Also known as web browser caching. It is started when the web page is loaded by the browser, it accumulates page resources like images, texts, media files, and scripts. When the same page is hit, the browser can grab the resources from the cache. It is faster than downloading from the internet.

- Web server caching: Here, the resources are saved on the server-side aiming for the reuse of the resources. This approach is helpful in the case of dynamic web pages and this might not be useful in the case of static web pages. It reduces the overloading of the server, reduces the work, and increases the speed of page delivery.

- CDN Caching: Content Delivery Network caching is aimed at caching resources in proxy servers such as scripts, stylesheets, media files, and web pages. It acts as the gateway between the origin server and the user. When the user requests a resource, the proxy server comes into action to identify if it has a copy. If a copy of the found resource is carried to the use: else the request is processed by the origin server. It removes network latency and decreases the calling of the origin server.

Challenges with Caching

- Local Cache: A cache coherence problem may occur as the locally cached resources are unevenly distributed from system to system, this process may slow down caching efficiency.

- Cache Coherence problem: When one of the processors modifies its local copy of data that is shared among several caches, keeping numerous local caches in synchronized order becomes a challenge.

- Cache penetration: When a user queries for data, the request is first sent to cache memory if the required data is present then it is sent to the user but if cache memory has no such data the request will be retransmitted to the main memory, and if the data is not present in the main memory it will result in NULL which causes cache penetration. This challenge can be overridden by the use of a bloom filter.

- Cache avalanche: If cache memory fails at the same time that a user requests a large query, the database will be put under a tremendous amount of stress, perhaps causing the database to crash. The challenge can be overcome by using cache cluster and hystrix.

Advantages of Cache

- Cache reduces the time taken to process a query; Cache minimizes the trip for requesting the same data.

- Cache decreases the load on the server.

- Cache increases the efficiency of system hardware.

- Web page downloading/rendering speed is increased with the help of caching.

Disadvantages of Cache

- Cache algorithms are hard and complex to implement.

- Cache increases the complexity of an application.

- High maintenance cost.

Applications of Caching

The caching mechanism is used in the following industries:

- Health and wellness: We'll be able to deliver quick speed, save overall spending, and expand as our usage grows with an effective caching architecture.

- Advertising technology: A millisecond can mean the difference between placing a bid on time and it becoming irrelevant when developing real-time bidding software. This necessitates a lightning-fast retrieval of bidding information from the database. Database caching, which can retrieve bidding data in milliseconds or less, is a terrific way to achieve that high level of speed.

- Mobile: Our mobile apps can achieve the performance your consumers need, grow exponentially, and decrease your total cost with appropriate caching solutions.

- Gaming and media: Caching helps to keep the game operating smoothly by delivering sub-millisecond query responses for commonly asked data.

- Ecommerce: Well-executed caching management is a strategic part of response time that can be the key differentiator, between making a sale and losing a customer.

Conclusion

Caching mechanisms in Java applications are powerful tools for enhancing performance, reducing resource consumption, and improving the user experience. By choosing the right caching strategy, implementing cache policies, and following best practices, we can efficiently integrate caching into our Java applications. When used judiciously, caching can be a game-changer in optimizing the performance of our Java applications, making them faster, more efficient, and ultimately more user-friendly.

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now