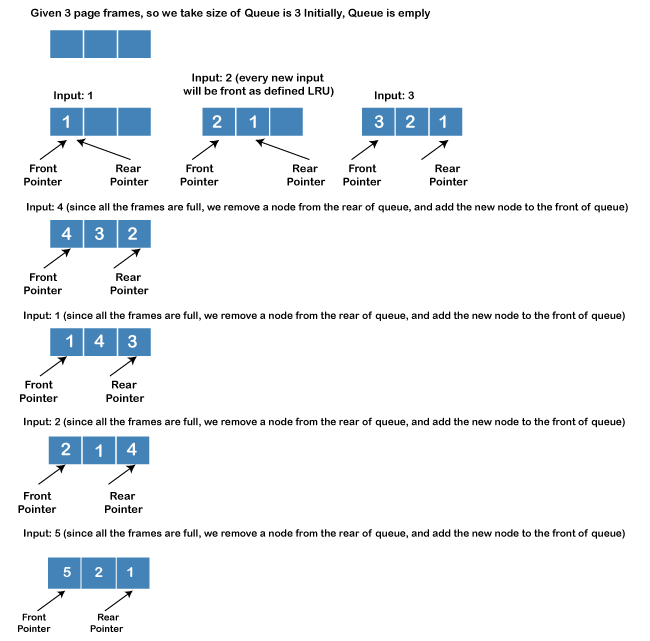

LRU Cache Implementation In JavaThe term LRU Cache stands for Least Recently Used Cache. It means LRU cache is the one that was recently least used, and here the cache size or capacity is fixed and allows the user to use both get () and put () methods. When the cache becomes full, via put () operation, it removes the recently used cache. In this section of Java, we will discuss LRU cache brief introduction, its implementation in Java, and what are the ways through which we can achieve LRU Cache. What is LRU CacheEveryone might be aware that cache is a part of computer memory that is used for storing the frequently used data temporarily. But the size of the cache memory is fixed, and there exists the management requirement where it can remove the unwanted data and store new data there. Here, LRU comes into the role. Thus, LRU is a cache replacement algorithm used for freeing the memory space for the new data by removing the least recently used data. Implementing LRU Cache in JavaFor implementing LRU Cache in Java, we have the following two data structures through which we can implement LRU Cache: Queue: Using a doubly-linked list, one can implement a queue where the max size of the queue will be equal to the cache size (the total number of frames that exist). It is quite simple to find that the most recently used pages are present near the front end, and on the other hand, we can find the least recently used pages near the rear end of the doubly linked list. Hash: A key here represents a hash with page number, and a value represents the address of the corresponding queue node. Understanding LRUWhenever a user references a page, there may be two cases. Either the page may exist within the memory, and if so, just detach the node of the list and bring that page to the front of the queue. Or, if the page is not available (does not exist) in the memory, then it is initially moved in the memory. For it, the user inserts a new node to the front of the queue and, after that, update the address of the corresponding node in the hash. In case if the user determines that the queue is already full (all frames are full), just remove a node from the rear end and, after that, add the new node to the front end of the queue. Example of LRUThere is the following given reference string: 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5 Thus using the LRU page replacement algorithm, one can find the number of page faults where page frames are 3. In the below-shown diagram, you can see how we have performed the LRU algorithm to find the number of page faults:

Implementing LRU Cache via QueueTo implement the LRU cache via a queue, we need to make use of the Doubly linked list. Although the code is lengthy enough, it is the basic implementation version for the LRU Cache. Below is the following code: Code Explanation:

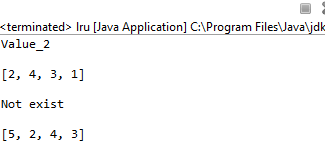

Thus, on executing the above code, we got the below-shown output:

Implementing LRU Cache using LinkedHashMapA LinkedHashMap is similar to a HashMap, but in LinkedHashMap, there is a feature that allows the user to maintain the order of elements that are inserted into the LinkedHashMap. As a result, such a feature of LinkedHashMap makes the LRU cache implementation simple and short. If we implement using HashMap, we will get a different sequence of the elements, and thus, we may further code to arrange those elements but using LinkedHashMap, we do not need to increase more code lines. Below is the given code that will let you implement LRU cache using LinkedHashMap Code Explanation:

When we executed the above code, we got the below-shown output:

It is clear from the output that we got the appropriate result with fewer lines of code in comparison to the above one. Therefore, in this way, we can implement LRU Cache in Java and represent it.

Next TopicGoldbach Number in Java

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share