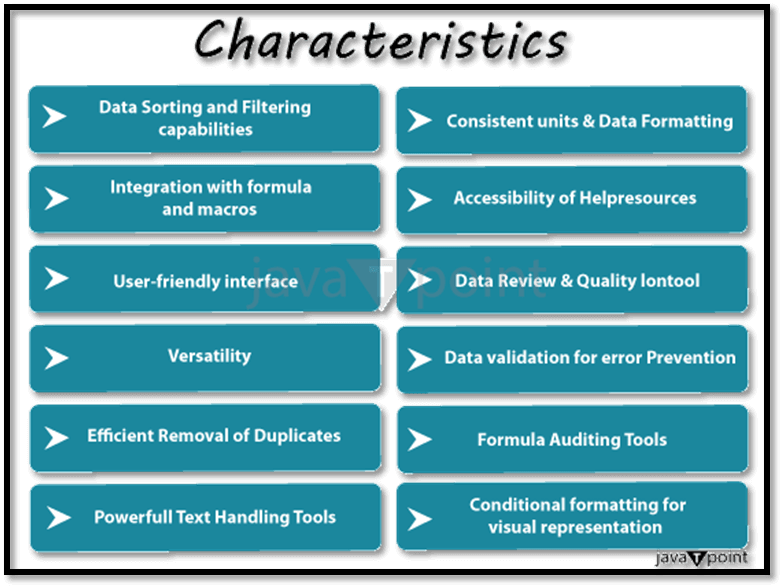

Data Cleaning in Microsoft Excel"Data cleaning" stands as a crucial pillar in the realm of the data analysis and acts as the gatekeeper in order to ensure the accuracy as well as the reliability of the particular datasets. More often, in the ever-evolving landscape of data-driven decision-making, the need for the pristine, error-free data has never been more pronounced. Microsoft Excel, with its diverse arsenal of tools and the functions that usually emerge as a stalwart companion in this journey, offers a versatile platform for meticulous data cleaning. And here in this tutorial, we will embark on a deep dive into the nuances of the data cleaning within the Excel environment. From handling duplicates to addressing out the missing values and correcting errors, we will unravel a spectrum of techniques as well as strategies which are aimed at systematically cleansing and also to organize our data effectively. Each step in this process is akin to wielding a powerful instrument in the symphony of the data preparation, orchestrating harmony for the subsequent analyses as well as the insights. So, let us navigate this intricate landscape and unveil the artistry of data cleaning in Microsoft Excel. What is meant by the term Data cleaning in Microsoft Excel?It is well known that Data cleaning, sometimes also known as data cleansing or scrubbing, is like giving our dataset a thorough checkup. Imagine our dataset as a collection of information, but it is only sometimes in perfect shape when we first encounter it. There could be identical copies, or some of the information might need to be included, formats might be all over the place, and there might even be mistakes that mess up our analysis as well. So, data cleaning is the process of fixing all these issues. More often, it is like tidying up our main dataset so that it is accurate and ready for us to dig into and draw meaningful conclusions from it. It is just like going through a checklist, and ensuring that everything is where it should be and looks how it's supposed to. This careful attention to the detail ensures that when we analyze our data, we can easily trust the results which we get effectively What is the key characteristic of using Data cleaning in Microsoft Excel?The various key characteristics which are associated with the use of Data cleaning in Microsoft Excel are as follows:

1) User-Friendly Interface: Microsoft Excel basically provides an intuitive as well as a user-friendly interface, making it accessible even for those with limited technical expertise. The menu-driven options and familiar spreadsheet layout simplify the data-cleaning process as well. 2) Versatility of Functions: Microsoft Excel primarily offers a diverse set of functions, from basic arithmetic to advanced statistical operations. This versatility allows users to apply various functions for data cleaning tasks, like handling errors, manipulating text, and managing duplicates, respectively. 3) Powerful Text Handling Tools: Microsoft Excel excels in handling text data. Functions like "Text to Columns," "PROPER," "UPPER," and "LOWER" empower users to clean and standardize text, addressing issues such as inconsistent formatting as well as capitalization. 4) Data Sorting and Filtering Capabilities: Sorting, as well as the filtering functionalities in Microsoft Excel, is instrumental for the purpose of organizing data effectively. These features facilitate the identification and also handling of the duplicates, as well as the selective viewing and manipulation of the specific data subsets. 5) Conditional Formatting for Visual Inspection: More often, Microsoft Excel's conditional formatting allows users to visually highlight the specific data which are based on the defined criteria. This feature aids in the quick identification of outliers or patterns during the data-cleaning process. 6) Formula Auditing Tools: Microsoft Excel primarily provides tools like "Trace Precedents" and "Trace Dependents" in order to audit and validate the formulas. These tools are essential for ensuring the accuracy of the calculations and identifying potential errors in complex formulas. 7) Data Validation for Error Prevention: Data validation rules in Microsoft Excel help us to prevent errors during the entry of the data. By just specifying the criteria for all the cell entries, users can minimize the risk of introducing inaccuracies, contributing to cleaner datasets from the start. 8) Consistent Units and Date Formatting: Microsoft Excel's "Text to Columns" and formatting options assist in standardizing units of the measurement and date/time formats. Consistency in these aspects is crucial for accurate analyses and reporting. 9) Efficient Removal of Duplicates: The "Remove Duplicates" feature in Microsoft Excel simplifies the process of identifying and eliminating duplicate entries, streamlining data cleaning and enhancing dataset reliability. 10) Integration with Formulas and Macros: Excel allows users to create custom formulas and automates repetitive tasks through the macros. This integration enhances the efficiency of the data-cleaning processes, especially when dealing with large datasets. 11) Data Review and Quality Control: Microsoft Excel's spreadsheet format facilitates the manual review and quality control of the cleaned data. Users can easily scroll through, compare, and validate data, ensuring it aligns with the expectations and business requirements. 12) Accessibility of Help Resources: Microsoft Excel provides extensive online help resources, tutorials, and a vibrant community, offering assistance to users encountering challenges during the data cleaning process. This support system enhances the learning curve for individuals looking to master data cleaning in Excel. These characteristics collectively make Microsoft Excel a powerful and accessible tool for data cleaning, catering to a broad range of users with varying levels of expertise in data management and analysis. What are the Data Cleaning Steps and the Techniques?We have six basic steps for the data cleaning process to make sure that our selected data is ready to go.

Step 1: First of all, remove all the irrelevant data Step 2: Then, after that, deduplicate our selected data Step 3: In this step, we are required to fix all the structural errors Step 4: Then dealing with the missing data Step 5: Filter out all the data outliers Step 6: Validate our selected data efficiently Now we will be deep diving into the better understanding of the steps: 1. Remove irrelevant dataFirstly we are required to figure out what type of the analyses we all are running with and what are our downstream needs. We need to address the questions for which we want to have a solutions or problems do we want to solve? Now let us take a good look at our main data and after that we need to infer an idea of what is relevant and what we may not need as well. Filter out data or observations that aren't relevant to our downstream needs. Suppose we are analyzing the SUV owners, but our data set contains data on the Sedan owners. In that case, this information is very much irrelevant to our specific needs and would only skew our results. Despite this, we should also consider removing things like hashtags, URLs, emojis, HTML tags, etc., unless they are necessarily a part of our analysis. 2. Deduplicate your dataIf we are collecting the data from multiple sources or multiple departments, make use of the scraped data for the analysis, or have received multiple surveys or client responses, we will often end up with data duplicates effectively. Duplicate records slow down analysis and may require more storage. Even more importantly, if a machine learning model is on a dataset with duplicate results, the model will likely give more weight to the duplicates, depending on how many times they have been duplicated. So they need to be removed for the well-balanced results. Even simplistic data cleaning tools can be helpful to deduplicate our data because duplicate records are easy for AI programs to recognize. 3. Fix structural errorsIt was well known that the respective Structural errors mainly include things such as misspellings, incongruent naming conventions, improper capitalization, use of incorrect word, etc. And these can affect the analysis because, while they may be obvious to humans, most machine learning applications wouldn't recognize out the mistakes, and our analyses would be skewed.

4. Dealing with the missing dataIn this section we are required to scan our data or run it through a cleaning process for the purpose of locating the missing cells, blank spaces in the text. This could be due to incomplete data or due to the error caused by the human beings. More often, we are required to determine whether everything connected to this missing data - an entire column or row, a whole survey, etc. -should be completely discarded, individual cells entered manually. Furthermore, the best course of action is to just deal with the missing data that will depend upon the analysis that we actually want to do and how we plan to preprocess our data. Sometimes, we can restructure our data so that missing values won't affect our analysis. 5. Filter out data outliersOutliers are the data points that fall far outside of the norm and may skew our analysis too far in a certain direction. For example, if we are averaging a class's test scores and one student refuses to answer any of the questions, then their 0% would have a big impact on the overall average. As in this case, we must need to consider deleting this data point altogether. This may give results that are "actually" very much closer to the average. However, just because a number is much smaller or larger than the other numbers we are analyzing doesn't mean that the ultimate analysis will be inaccurate. Just because an outlier exists, it does not mean that it shouldn't be considered. 6. Validate our dataWe all know that Data validation is termed to be the final data cleaning technique which can be effectively used for the purpose of authenticating our data and confirming that it's high quality, consistent, and properly formatted for the downstream processes.

After that, we are required to validate that our data is regularly structured and sufficiently clean for our specific needs as well. Cross-check, check the corresponding data points and make sure everything is present and accurate. Moreover the respective Machine learning as well as the AI tools can be used for the purpose of verifying that our selected data is valid or not and ready to be put to use in an effective manner, once we have gone through the proper data cleaning steps, we can easily make use of the data wrangling techniques and also the tools that help to automate the process respectively. What are the Data Cleaning Tips?Creation of the right process and use it consistently. We are advised to setup a data cleaning process that is right for our data and for our needs as well and also the specific tools that can be effectively use for the analysis. This is an iterative process, so once we have our specific steps and also the techniques in place, we all need to follow them religiously for all the subsequent data and analyses. More often, it is very much important to remember that although data cleaning may be tedious, it is absolutely vital to our downstream processes. If we don't start with the clean data, we all undoubtedly regret it in the future when our analysis produces "garbage results." Make use of tools It was well known that, there are a number of the helpful data cleaning tools which we can put to use and it will help the process - from free and basic to advanced and machine learning augmented. And in accordance to our dataset and aims we are required do some research and find out what data-cleaning tools are best for us. If we know how to code, then in that case we can easily build models for our specific needs, but there are great tools even for the non-coders. Check out tools with an efficient UI so that we can preview the effect of our filters and can quickly test them on different data samples in an effective manner. Paying attention to the errors and track where dirty data comes from We are required to Track and annotate common errors as well as the trends in our data so we all know what kinds of cleaning techniques we all need to use on the data from the different sources respectively. What are the advantages of using Data cleaning in Microsoft Excel?The various advantages which are effectively associated with the use of Data cleaning in Microsoft Excel are as follows: 1) Advancing Accuracy: It was well known that, at the core of data cleaning, lies the pursuit of accuracy, as inaccurate data can easily taint analyses and can misguide the decision-makers. Through the systematic approach of data cleaning in Microsoft Excel, users can easily pinpoint and can effectively rectify errors, thus creating a foundation of trustworthiness. The meticulous attention to detail during the data cleaning ensures that the insights are effectively derived from the dataset and are not only reliable but also serve as a dependable basis for informed decision-making. 2) Ensuring Consistency: The respective Datasets often arrive with the information in diverse formats, complicating the analysis process. Data cleaning comes to the rescue by standardizing the data formats across the entire dataset. This consistency is not merely a cosmetic improvement, as it usually simplifies the analysis workflow, thus allowing users to rely on the uniform data structures as well. The result is a more cohesive dataset, which primarily facilitates more accurate and meaningful analyses. 3) Completing the Picture: The completeness of a dataset is imperative for a comprehensive understanding of the underlying information. The data cleaning basically addresses the issue of missing data points, whether through the imputation techniques or by the other methods as well. By ensuring that gaps in the selected information are filled, data cleaning contributes to a more robust dataset, free from the biases which are introduced by incomplete data. This completeness is instrumental in producing thorough and reliable analyses, respectively. 4) Efficiency Unleashed: Beyond its impact on data quality, data cleaning in Microsoft Excel is a practical boon for efficiency. Streamlining data processing tasks allows analysts to dedicate more time to the actual analysis rather than grappling with errors and inconsistencies. Moreover, the efficiency which has been efficiently gained through the data cleaning not only accelerates the analysis process but it also reduces the likelihood of the errors, resulting in more reliable and timely results respectively. 5) Elevating Data Quality: Quality data is the bedrock of meaningful insights. Duplicates, outliers, and irrelevant information can compromise the integrity of a dataset. Data cleaning ensures the removal of these elements, maintaining the relevance and the meaning of the information at hand. The commitment to high-quality data is not just a best practice; it is an essential component of producing accurate as well as valuable insights. 6) Compatibility Across Platforms: In today's interconnected data landscape, compatibility is key. And more often, the cleaned data is more likely to integrate with the various systems or tools seamlessly. This compatibility not only facilitates the smooth sharing of the data but also enhances the overall utility of the information. The cleaned dataset becomes a versatile asset, ready to be utilized across different applications without the headaches of compatibility issues in an effective manner. 7) Visualizing Insights: Effective data visualization hinges on clean and well-organized data. Cleaned data sets the stage for more meaningful and insightful visual representations. Whether through charts, graphs, or dashboards, clean data enhances the clarity and impact of data visualization, transforming it into a powerful tool for communication and decision-making. 8) Mitigating Risks: Reducing risks which are associated with flawed or incomplete data is a fundamental advantage of the cleaning of the data. Decision-makers can confidently base their choices on accurate and also complete information, thus minimizing the potential for adverse consequences. In a landscape where decisions carry significant weight, the risk mitigation provided by data cleaning is a strategic imperative. Compliance Assurance: Meeting data quality standards and regulatory requirements is a non-negotiable aspect for many industries. Data cleaning in Microsoft Excel rises to this challenge by ensuring that the data aligns with these standards. Compliance is not just a checkbox; it is a proactive stance towards data integrity, safeguarding against legal or regulatory pitfalls, respectively. What are the various disadvantages associated with the use of Data cleaning in Microsoft Excel.The various disadvantages which are associated with the use of Data cleaning in Microsoft Excel are as follows: 1) Prone to Human Error:

2) Time-Consuming for Large Datasets:

3) Complexity and Skill Dependency:

4) Limited Automation:

5) Inconsistencies and Oversight:

6) Inadequate for Very Large Datasets:

7) Version Control Challenges:

8) Difficulty in Tracking Changes:

9) Dependency on Formula Accuracy:

10) Scalability Issues:

Next TopicMicrosoft Excel VS CSV

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share