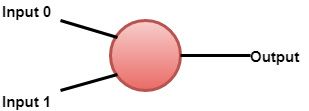

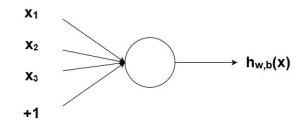

Artificial Neural Network in TensorFlowNeural Network or artificial neural network (ANN) are modeled the same as the human brain. The human brain has a mind to think and analyze any task in a particular situation. But how can a machine think like that? For the purpose, an artificial brain was designed is known as a neural network. The neural network is made up many perceptrons. Perceptron is a single layer neural network. It is a binary classifier and part of supervised learning. A simple model of the biological neuron in an artificial neural network is known as the perceptron. The artificial neuron has input and output.

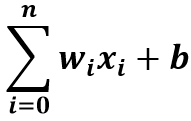

Representation of perceptron model mathematically.

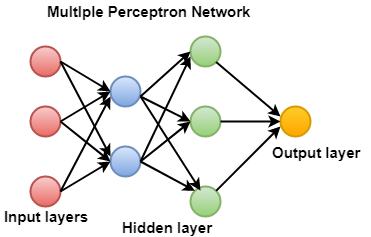

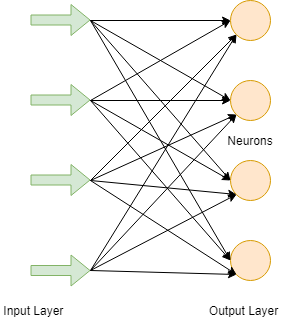

Human brain has neurons for passing information, similarly neural network has nodes to perform the same task. Nodes are the mathematical functions. A neural network is based on the structure and function of biological neural networks. A neural network itself changes or learn based on input and output. The information flows through the system affect the structure of the artificial neural network because of its learning and improving the property. A Neural Network is also defined as: A computing system made of several simple, highly interconnected processing elements, which process information by its dynamic state response to external inputs. A neural network can be made with multiple perceptrons. Where there are three layers-

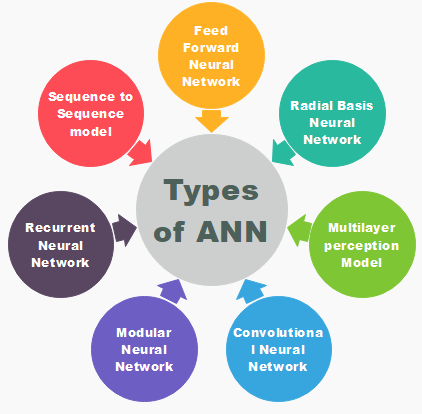

Types of Artificial Neural NetworkNeural Network works the same as the human nervous system functions. There are several types of neural network. These networks implementation are based on the set of parameter and mathematical operation that are required for determining the output.

Feedforward Neural Network (Artificial Neuron)FNN is the purest form of ANN in which input and data travel in only one direction. Data flows in an only forward direction; that's why it is known as the Feedforward Neural Network. The data passes through input nodes and exit from the output nodes. The nodes are not connected cyclically. It doesn't need to have a hidden layer. In FNN, there doesn't need to be multiple layers. It may have a single layer also.

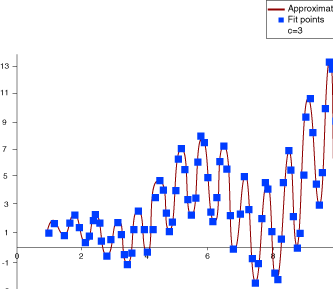

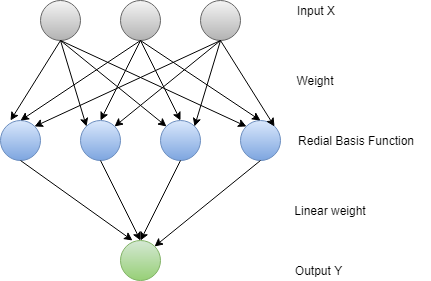

It has a front propagate wave that is achieved by using a classifying activation function. All other types of neural network use backpropagation, but FNN can't. In FNN, the sum of the product's input and weight are calculated, and then it is fed to the output. Technologies such as face recognition and computer vision are used FNN. Redial basis function Neural NetworkRBFNN find the distance of a point to the centre and considered it to work smoothly. There are two layers in the RBF Neural Network. In the inner layer, the features are combined with the radial basis function. Features provide an output that is used in consideration. Other measures can also be used rather than Euclidean.

Redial Basis Function

Redial Function=Φ(r) = exp (- r2/2σ2), where σ > 0 This Neural Network is used in power restoration system. In the present era power system have increased in size and complexity. It's both factors increase the risk of major power outages. Power needs to be restored as quickly and reliably as possible after a blackout.

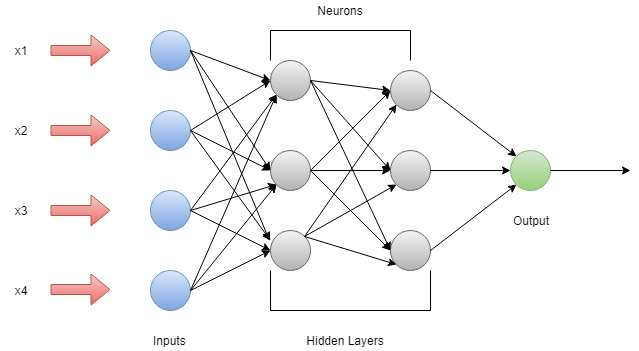

Multilayer PerceptronA Multilayer Perceptron has three or more layer. The data that cannot be separated linearly is classified with the help of this network. This network is a fully connected network that means every single node is connected with all other nodes that are in the next layer. A Nonlinear Activation Function is used in Multilayer Perceptron. It's input and output layer nodes are connected as a directed graph. It is a deep learning method so that for training the network it uses backpropagation. It is extensively applied in speech recognition and machine translation technologies.

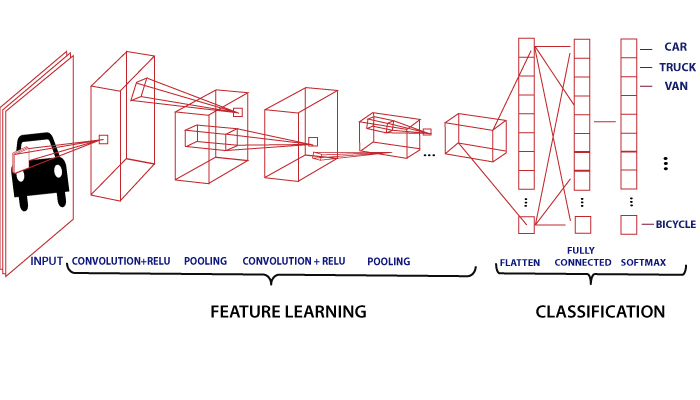

Convolutional Neural NetworkIn image classification and image recognition, a Convolutional Neural Network plays a vital role, or we can say it is the main category for those. Face recognition, object detection, etc., are some areas where CNN are widely used. It is similar to FNN, learn-able weights and biases are available in neurons. CNN takes an image as input that is classified and process under a certain category such as dog, cat, lion, tiger, etc. As we know, the computer sees an image as pixels and depends on the resolution of the picture. Based on image resolution, it will see h * w * d, where h= height w= width and d= dimension. For example, An RGB image is 6 * 6 * 3 array of the matrix, and the grayscale image is 4 * 4 * 3 array of the pattern. In CNN, each input image will pass through a sequence of convolution layers along with pooling, fully connected layers, filters (Also known as kernels). And apply Soft-max function to classify an object with probabilistic values 0 and 1.

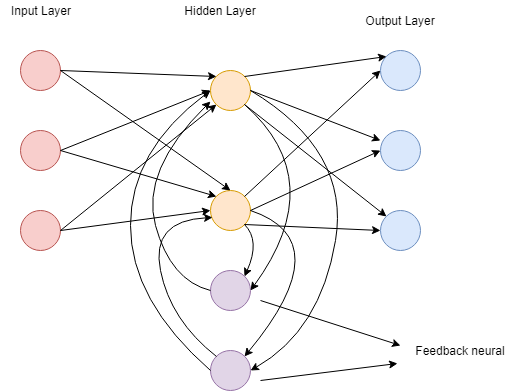

Recurrent Neural NetworkRecurrent Neural Network is based on prediction. In this neural network, the output of a particular layer is saved and fed back to the input. It will help to predict the outcome of the layer. In Recurrent Neural Network, the first layer is formed in the same way as FNN's layer, and in the subsequent layer, the recurrent neural network process begins. Both inputs and outputs are independent of each other, but in some cases, it required to predict the next word of the sentence. Then it will depend on the previous word of the sentence. RNN is famous for its primary and most important feature, i.e., Hidden State. Hidden State remembers the information about a sequence.

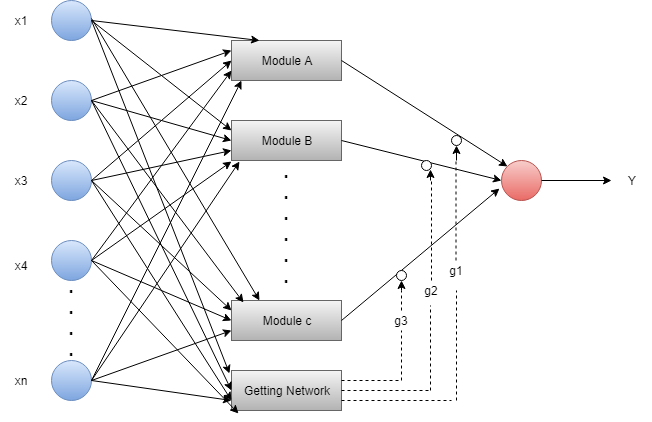

RNN has a memory to store the result after calculation. RNN uses the same parameters on each input to perform the same task on all the hidden layers or data to produce the output. Unlike other neural networks, RNN parameter complexity is less. Modular Neural NetworkIn Modular Neural Network, several different networks are functionally independent. In MNN the task is divided into sub-task and perform by several systems. During the computational process, networks don't communicate directly with each other. All the interfaces are work independently towards achieving the output. Combined networks are more powerful than flat and unrestricted. Intermediary takes the production of each system, process them to produce the final output.

Sequence to Sequence NetworkIt is consist of two recurrent neural networks. Here, encoder processes the input and decoder processes the output. The encoder and decoder can either use for same or different parameter. Sequence-to-sequence models are applied in chatbots, machine translation, and question answering systems. Components of an Artificial Neural NetworkNeuronsNeurons are similar to the biological neurons. Neurons are nothing but the activation function. Artificial neurons or Activation function has a "switch on" characteristic when it performs the classification task. We can say when the input is higher than a specific value; the output should change state, i.e., 0 to 1, -1 to 1, etc. The sigmoid function is commonly used activation function in Artificial Neural Network. F (Z) = 1/1+EXP (-Z) NodesThe biological neuron is connected in hierarchical networks, with the output of some neurons being the input to others. These networks are represented as a connected layer of nodes. Each node takes multiple weighted inputs and applies to the neuron to the summation of these inputs and generates an output.

BiasIn the neural network, we predict the output (y) based on the given input (x). We create a model, i.e. (mx + c), which help us to predict the output. When we train the model, it finds the appropriate value of the constants m and c itself. The constant c is the bias. Bias helps a model in such a manner that it can fit best for the given data. We can say bias gives freedom to perform best. AlgorithmAlgorithms are required in the neural network. Biological neurons have self-understanding and working capability, but how an artificial neuron will work in the same way? For this, it is necessary to train our artificial neuron network. For this purpose, there are lots of algorithms used. Each algorithm has a different way of working. There are five algorithms which are used in training of our ANN

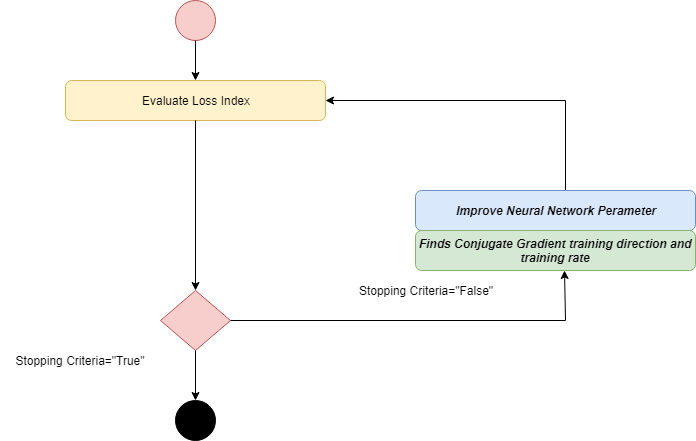

Gradient DescentGradient descent algorithm is also known as the steepest descent algorithm. It is the most straightforward algorithm which requires information from the gradient vector. GD algorithm is a first-order method. For simplicity we denote ƒ(w(i))=ƒ(i) and ∇ƒ(w(i))=g(i). The method starts from w(0) and moves from w(i) to w(i+1) in the training direction d(i)=-g(i) until a stopping criterion is satisfied. So the gradient descent method iterates in the following way. w(i+1) = w(i) - g(i)n(i). Newton's MethodNewton's method is a second-order algorithm. It makes use of the Hessian matrix. Its main task is to find better training directions by using the second derivatives of the loss function. Newton's method iterates as follows. w(i+1) = w(i) - H(i)-1.g(i)For i = 0, 1..... Here, H(i)-1.g(i) is known as Newton's step. The change for parameters may move toward a maximum rather than a minimum. Below is the diagram of the training of a neural network with Newton's method. The improvement of the parameter is made by obtaining the training direction and a suitable training rate. Conjugate gradientConjugate gradient works in between gradient descent and Newton's method. Conjugate gradient avoids the information requirements associated with evaluation, inversion of the Hessian matrix, and storage as required by Newton's method. In the CG algorithm, searching is done in a conjugate direction, which gives faster convergence rather than gradient descent direction. The training is done in a conjugate direction concerning the Hessian matrix. The improvement of the parameter is made by computing the conjugate training direction and then suitable training rate in that direction.

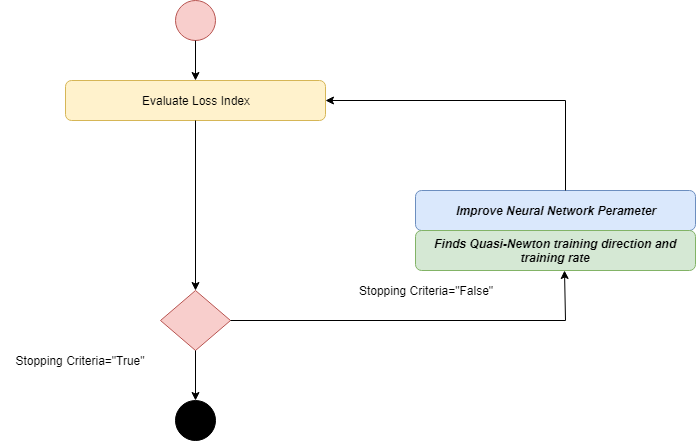

Quasi-Newton MethodApplications of Newton's method are costly in terms of computation. To evaluate the Hessian matrix, it requires many operations to do. For resolving this drawback, Quasi-Newton Method developed. It is also known as variable matric method. At each iteration of an algorithm, it builds up an approximation to the inverse hessian rather than calculating the hessian directly. Information on the first derivative of the loss function is used to compute approximation. The improvement of the parameter is made by obtaining a Quasi-Newton training direction and then finds a satisfactory training rate.

Levenberg MarquardtLevenberg Marquardt is also known as a damped least-squares method. This algorithm is designed to work with loss function specifically. This algorithm does not compute the Hessian matrix. It works with the Jacobian matrix and the gradient vector. In Levenberg Marquardt, the First step is to find the loss, the gradient, and the Hessian approximation, and then the dumpling parameter is adjusted. Advantages and Disadvantages of Artificial Neural NetworkAdvantages of ANN

Disadvantages of ANN

Next TopicImplementation of Neural Network

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share