PipeliningThe term Pipelining refers to a technique of decomposing a sequential process into sub-operations, with each sub-operation being executed in a dedicated segment that operates concurrently with all other segments. The most important characteristic of a pipeline technique is that several computations can be in progress in distinct segments at the same time. The overlapping of computation is made possible by associating a register with each segment in the pipeline. The registers provide isolation between each segment so that each can operate on distinct data simultaneously. The structure of a pipeline organization can be represented simply by including an input register for each segment followed by a combinational circuit. Let us consider an example of combined multiplication and addition operation to get a better understanding of the pipeline organization. The combined multiplication and addition operation is done with a stream of numbers such as:

Ai* Bi + Ci for i = 1, 2, 3, ......., 7

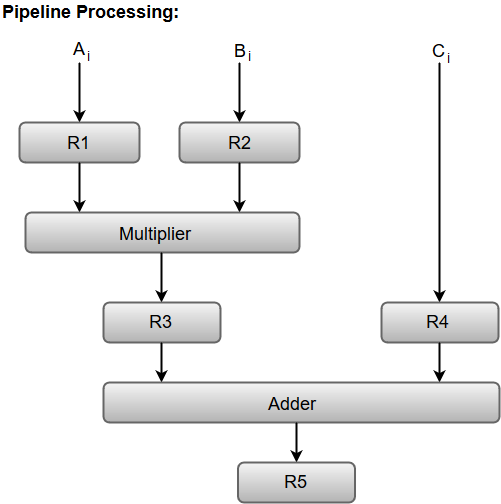

The operation to be performed on the numbers is decomposed into sub-operations with each sub-operation to be implemented in a segment within a pipeline. The sub-operations performed in each segment of the pipeline are defined as: R1 ← Ai, R2 ← Bi Input Ai, and Bi R3 ← R1 * R2, R4 ← Ci Multiply, and input Ci R5 ← R3 + R4 Add Ci to product The following block diagram represents the combined as well as the sub-operations performed in each segment of the pipeline.

Registers R1, R2, R3, and R4 hold the data and the combinational circuits operate in a particular segment. The output generated by the combinational circuit in a given segment is applied as an input register of the next segment. For instance, from the block diagram, we can see that the register R3 is used as one of the input registers for the combinational adder circuit. In general, the pipeline organization is applicable for two areas of computer design which includes: We will discuss both of them in our later sections.

Next TopicArithmetic Pipeline

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share