Thread Synchronization in C++In C++, "thread synchronization" refers to the methods and systems applied to synchronize the tasks carried out by several threads, ensuring that they exist smoothly and under strict supervision. Multiple threads of execution may operate simultaneously in a multi-threaded program, accessing shared resources and causing problems like race situations, inconsistent data, and deadlocks. Thread synchronization includes restricting access to shared resources, making sure that only one thread is using a resource at once, or making sure that threads wait for specific criteria to be met before continuing. Maintaining data integrity and avoiding conflicts that may happen when several threads edit shared data at once are the objectives. Why We Need Thread Synchronization?Thread synchronization is commonly needed in the following situations:

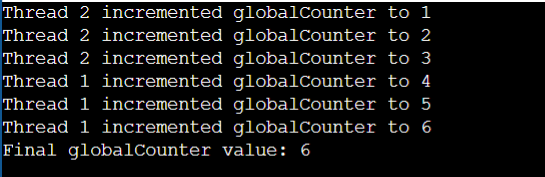

Example for the Thread SynchronizationOutput:

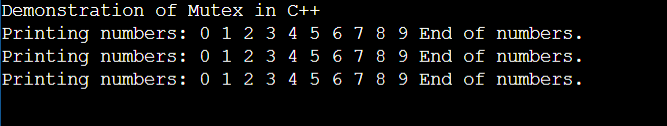

Explanation: This C++ code demonstrates how to use a mechanism for thread synchronization to allow two threads to access a shared counter safely at the same time. One thread at a time can only modify the globalCounter thanks to the incrementGlobalCounter function's mutex-protected access protection. In order to simulate concurrent processes, the main function generates two threads that call the incrementing function. The code then shows the final value of the globalCounter when both threads have finished their task. The sample illustrates how to avoid race problems and preserve data integrity in multi-threaded situations by using mutex synchronization. This method is known as Thread synchronization, and the implementation of this code is simple. Techniques of Thread Synchronization in C++Techniques are available in C++ for thread synchronization: 1. Threads Synchronization Using Mutex (Std::mutex (mutex)):In C++, a mutex is a synchronization tool that helps manage access to shared resources between several threads. In order to avoid data races and guarantee thread safety, it makes sure that only one thread may access a crucial part of the code at once. Threads synchronize their operations by obtaining and releasing the mutex, which reduces conflicts and promotes orderly execution. Multi-threaded programs are more reliable and predictable when race problems are avoided and regulated concurrent access to shared data is enabled via mutexes. Due to the non-deterministic sequence of thread execution, race situations can occur when many threads simultaneously access and customize shared data. Mutexes deal with this problem by only allowing one thread at a time to access a significant portion of code, preventing many threads from simultaneously accessing the same resource. The std::mutex class is part of the C++ Standard Library and works for mutex-based synchronization. When a thread is trying to lock a mutex using the lock() method, it is successful if the mutex wasn't previously locked. The tying thread is stopped until the mutex unlocks if another thread has locked it. Only one thread at a time may perform the essential part because of this blocking behavior. It's crucial to remember that mutexes can cause issues like deadlocks and contention if they are used improperly. When two or more threads are holding for each other to release resources, a deadlock occurs, and the program halts. When threads regularly fight for the same mutex, contention results, possibly slowing down the program due to a lot of locking and unlocking. Modern C++ offers higher-level abstractions like std::lock_guard and std::unique_lock, which streamline mutex management and lessen the likelihood of errors to address these problems. These RAII (Resource Acquisition Is Initialization) classes automatically lock and unlock the mutex as they are created and deleted, guaranteeing appropriate synchronization even in the face of exceptions. Example: Output:

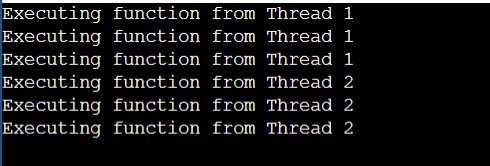

Explanation: This C++ code serves as an example of how to synchronize threads and provide correct concurrent access to shared resources. The program first includes input-output, threading, and mutex headers. In order to control access to shared resources, a std::mutex called mtx is declared. The printNumbers() method depicts the important component that several threads will access at the same time. The method outputs a succession of digits from 0 to 9, each one separated by a space, after using mtx. lock() to lock the mutex. The mutex is then made accessible to other threads by using mtx. unlock(), which unlocks it. The program shows multithreading by generating three threads (thread1, thread2, and thread3) that all simultaneously run the printNumbers() method. This demonstrates how the mutex makes sure that only one thread visits the crucial area at a time, preventing output overlap. Before the program ends, the join() functions wait for each thread to complete its execution. In conclusion, the code shown here demonstrates the core idea of utilizing a mutex to synchronize threads, prevent race situations, and provide predictable and ordered results while concurrently accessing shared resources. 2. Lock Guard (std::lock_guard) in C++:The std::lock_guard function in C++ is a robust and practical RAII (Resource Acquisition Is Initialization) wrapper that makes using mutexes for thread synchronization simple. It offers a secure, automatic method for handling mutex locking and unlocking, assisting in avoiding common threading pitfalls like forgetting to unlock mutexes or running into exceptions in crucial sections. When constructing a std::lock_guard object, the constructor accepts a mutex as an input and locks the mutex. When the std::lock_guard object runs away from the scope (for example, when the function finishes), the mutex is immediately unlocked. This guarantees that the mutex is always correctly released, even if exceptions are raised. Example: Output:

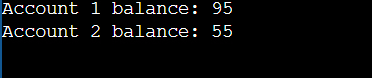

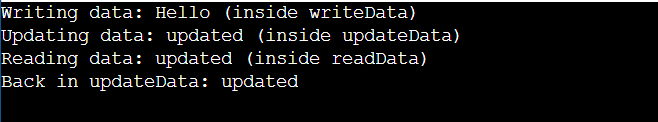

Explanation : This sample explains how to use mutexes and std::lock_guard to provide synchronized output in a multi-threaded context. The program begins by adding the appropriate headers and defining an outputMutex mutex. This mutex was created to prevent several threads from interleaving their output while accessing the standard output (std::cout). Multiple threads are allowed to run the function printFunction(). A std::lock_guard called lock is generated within a ten-time loop. This lock protects the outputMutex automatically, guaranteeing that only one thread may use std::cout at a time. This keeps the output of the threads from being jumbled. The main() function starts two threads, thread1 and thread2, which both run the printFunction() function. Because the mutex restricts access to std::cout, the output of these threads is synchronized and coherent. The join() functions ensure the main program waits for both threads to finish before exiting. 3. Unique lock (std::unique_lock)In C++, std::unique_lock is a powerful synchronization mechanism that may be used to manage mutexes and provide controlled thread synchronization. Std::unique_lock, like std::lock_guard, allows safe and coordinated access to shared resources, but it provides more flexibility. It supports delayed locking, which means it can be locked and unlocked several times inside the same scope, as opposed to std::lock_guard, which locks the mutex at construction and unlocks it upon destruction. The std::unique_lock class also has condition variables, which allow for more complex synchronization patterns, such as waiting for certain criteria to be satisfied before proceeding. This makes it an excellent candidate for cases requiring fine-grained control over mutex locking, unlocking, and waiting. Furthermore, std::unique_lock allows for both exclusive (unique) and shared (many) ownership of the mutex, allowing for thread-safe resource sharing in more complicated situations. The benefits of utilizing std::unique_lock for thread synchronization in C++ include its increased flexibility and sophisticated functionality. In contrast to std::lock_guard, std::unique_lock supports delayed locking and unlocking, allowing for more dynamic control over mutex access inside a given scope. This adaptability is especially useful when dealing with complicated synchronization settings or when mutex ownership must change throughout execution. Example: Output:

Explanation: This code demonstrates how to safely transfer money between two accounts concurrently using multiple threads while avoiding possible deadlocks and assuring synchronized access to shared resources using std::unique_lock and std::lock. The Account struct represents a bank account with a balance and a mutex (m) to prevent unregistered access to the account's balance. This mutex guarantees that only one thread at a time has access to the balance, preventing race situations. The task of moving money between two accounts falls under the authority of the transfer() function. It creates locks without locking the accompanying mutexes by using std::unique_lock objects with the std::defer_lock option. This prevents any immediate locking and allows the locks to be obtained simultaneously with std::lock(). This method prevents deadlocks even if many threads attempt to lock the mutexes at the same time. Two threads (thread1 and thread2) are created in the main() method to perform the transfer() function concurrently. The threads transport funds between the two accounts in opposing directions. The join() methods guarantee that the main program waits for both threads to finish their jobs before moving on. After both threads have finished, the final balances of the accounts are presented, demonstrating the effect of the concurrent transfers. Mutexes, std::unique_lock, and std::lock are used to guarantee that transfers are completed properly and without race situations. 4. Recursive Mutex (std::recursive_mutex) :A std::recursive_mutex is a type of mutex in thread synchronization in C++ that allows a single thread to lock frequently or repeatedly by preventing deadlock. The C++ std::recursive_mutex is a useful tool for handling complicated synchronization issues. To avoid race circumstances and guarantee the consistent and orderly execution of several activities at once, appropriate synchronization in multi-threaded programming is crucial. The recursive mutex adds a remarkable feature: a single thread can lock the same mutex numerous times without resulting in a deadlock, in contrast to the normal mutex (std::mutex), which only permits one thread to retain the lock at a time. This determining feature makes it particularly helpful in moments where a function may invoke additional functions that require locking using a single mutex, preventing deadlocks that might otherwise occur with a conventional mutex. While restoring flexibility, the std::recursive_mutex additionally complies with thread synchronization requirements by maintaining that only one thread has the mutex at any given moment. This approach works by tracking the thread that locked it and allowing that thread to lock it again, essentially calculating how many times that thread has locked the mutex. Because improper use might lead to misuse and serious performance issues, it is extremely important only to utilize this kind of mutex when essential. Overall, the std::recursive_mutex is a useful instrument when functions or procedures inside the same thread require access to shared resources secured by a mutex, enabling more flexible and maintainable multi-threaded programs. Example: Output:

Explanation: In this code, a recursive mutex, especially std::recursive_mutex, is used for obtaining synchronized access to shared resources in a multi-threaded context. The program constructs the SharedResource class, which has a recursive_mutex named mtx to prevent concurrent access to the shared data member sharedData. 5. Read-Write Mutex (std:: shared_mutex):A read-write mutex, or std::shared_mutex in C++, is a synchronization tool that offers more precise control over concurrent access to shared resources than normal mutexes. While guaranteeing exclusive access for writing activities, it enables several threads to read from a shared resource simultaneously. This is especially helpful in situations when simultaneous access to writing is required, but reads are frequent and may be done simultaneously. There are two lock modes on the std::shared_mutex: shared and exclusive.

When readers exceed writes, the std::shared_mutex is useful for increasing concurrency and overall speed. It reduces conflict and improves speed by enabling many threads to read simultaneously. However, the exclusive lock protects data integrity when writes are required. To utilize this synchronization method, you must have a suitable compiler and library because the std::shared_mutex is only accessible in C++17 and later. Example: Output:

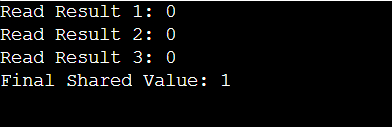

Explanation: This code provides an example of how to utilize a mutex in a multi-threaded environment to guarantee synchronized access to a shared resource, sharedValue. It aims to show how threads may access and change this shared property simultaneously while still keeping suitable synchronization in place to avoid data races and inconsistent results. At the very beginning of the programs, sharedValue and sharedMutex, mutexes used to prevent unauthorized access to this shared variable, are declared. The methods readSharedValue and setSharedValue are both defined. After acquiring the mutex lock, reading the shared value, securing the lock, and pretending a delay with sleep, the former sets the value in the readResult variable. The latter sets the shared value following the acquiring of the mutex lock, another iteration of the delay simulation, and the assignment of the new value to sharedValue. Three threads-readThread1, readThread2, and readThread3-are launched in the main() method to read the sharedValue simultaneously, and one thread-setThread-is established to edit it. To guarantee that the program waits for each thread's completion before moving on, all threads are connected. The code then shows the sharedValue's final value as well as the read results from each thread. In order to avoid data inconsistencies and race scenarios, the mutex makes sure that several threads cannot access and alter the shared item concurrently. 6. Condition Variable (std::condition_variable):A std::condition_variable is fundamental for synchronization in C++ that enables threads to wait until certain conditions are satisfied before proceeding. It interacts with a mutex to control thread synchronization and concurrent execution. A condition variable is used when a thread has to wait for a specific condition to become true before continuing its execution, as opposed to a mutex, which protects shared resources. For example, in producer-consumer contexts, where one thread creates data and another consumes it, the std::condition_variable is essential in building more complex synchronization patterns. It helps avoid busy-waiting, which may be resource-intensive and ineffective when a thread repeatedly checks a condition without giving up control. Example: Explanation: The main thread and a worker thread are shown to be able to communicate and synchronize using this code, which makes use of a condition variable. The main thread sends data preparation and processing start signals to the worker thread. After receiving this signal, the worker thread processes the data and notifies the main thread that the processing is finished. Before producing the final processed data, the main thread waits for this signal. With this method, performance in a multi-threaded system is increased since the worker thread only processes data when it is ready, and busy waiting is avoided. The stability of multi-threaded programs is increased by the use of mutexes and condition variables, which provide synchronized and coordinated interaction between the threads. 7. Semaphore (std::counting_semaphore):The synchronization primitive std::counting_semaphore was first introduced in C++20. It is a flexible utility that controls a permit count, enabling a certain number of threads to use a resource simultaneously. Threads wait for permission to be granted before continuing; if it is granted, they proceed. This is useful in circumstances when access to a pool of worker threads, for example, is limited in order to optimize resource utilization and manage conflict. With regulated concurrent access and less competition for shared resources, it's a more adaptable substitute for binary semaphores. 8. Atomic Operations (std::atomic):Without the use of locks, atomic operations offer a mechanism to carry out certain actions on shared variables in an atomic manner. For straightforward activities like incrementing counts, they are very helpful. Advantages of Thread Synchronization in C++

Next TopicLeaders in an array in C++

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share