fminsearch in MATLABIntroductionIn the realm of numerical computing and optimization, MATLAB provides an array of powerful tools to tackle complex mathematical and engineering problems. One of these essential tools is fminsearch, a function designed to minimize a scalar function of several variables, commonly referred to as a single-variable function. Developed based on the Nelder-Mead simplex method, fminsearch is particularly useful for optimizing functions that lack an analytical form, have noisy behaviour, or exhibit discontinuities. Notably, it is a popular choice when the gradient information of the function is either unavailable or challenging to obtain. Syntax The basic syntax for using fminsearch is as follows: Here, fun represents the objective function that needs to be minimized, while x0 denotes the initial guess for the minimum. Additionally, the optional options argument allows for customization, providing a mechanism to specify various parameters like display options, termination tolerance, maximum iterations, and more.

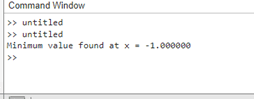

Working with fminsearchWhen employing fminsearch, the primary step involves defining the objective function to be minimized. This function must accept a vector input and return a scalar output. In MATLAB, one can conveniently use anonymous functions to define the objective function inline, thereby streamlining the code structure. For instance, consider the following example: Output:

It is crucial to note that the selection of the initial guess significantly impacts the convergence behaviour of the algorithm. In cases where the objective function has multiple local minima, the algorithm might converge to a local minimum instead of the global minimum. Therefore, researchers and practitioners must exercise caution and understand the nature of the specific optimization problem at hand. The Nelder-Mead Simplex AlgorithmUnder the hood, fminsearch leverages the Nelder-Mead simplex method, a heuristic optimization technique suitable for problems where the gradient information is either inaccessible or unreliable. This direct search method aims to iteratively update a simplex, a geometric figure consisting of n+1 vertices in an n-dimensional space, with the goal of reducing the objective function value at each step. The algorithm comprises several key steps: Initialization: Begin with an initial simplex in the parameter space, often constructed around the initial guess provided by the user. Reflection: Calculate the reflection point by extrapolating the simplex through the centroid, excluding the worst point. Expansion: If the reflected point yields better results than the best current point, consider expanding the simplex further in that direction. Contraction: If the reflected point is not the best but still better than the second-worst point, perform a contraction to explore the region between the reflected and the centroid. Reduction: If all the above steps fail, perform a reduction to shrink the simplex towards the best point. By iteratively applying these steps, the algorithm attempts to approximate the minimum of the objective function within a specified tolerance or after a certain number of iterations. The Nelder-Mead simplex approach, used by fminsearch, is a direct search technique without the need for the function's gradient. Because of this, it may be used to optimize complicated functions without the need for derivative data. To minimize the objective function, the algorithm first builds a simplex, which is a multidimensional generalization of a triangle. It then iteratively reshapes and transforms the simplex. Function: In this case, the option is an optional argument that lets you alter the optimization procedure; fun is the function handle that represents the objective function, and x0 is the starting point or first estimate for the optimization. Objective function: A vector input x should be able to be passed to fminsearch by the function, and it should be able to return a scalar value that represents the value of the objective function at that particular point. For the optimization process to be successful, the function must be defined precisely. Customization and Optionsfminsearch provides various options to fine-tune the optimization process based on specific requirements. Users can control the display of iterative information, set termination criteria, adjust the step size, and more. Understanding and utilizing these options can significantly impact the convergence rate and the accuracy of the results. Some of the key options available include: Display Options: Adjust the display level of iterative information, allowing users to monitor the optimization process closely. Tolerance: Set the termination tolerance to define the acceptable accuracy level for the minimum value. Max Iterations: Specify the maximum number of iterations allowed for the algorithm to converge. Step Size: Adjust the step size used for the updates in the simplex to control the exploration and exploitation balance. By judiciously setting these options, users can tailor the behaviour of the algorithm to achieve the desired balance between computational efficiency and accuracy. Best Practices and ConsiderationsTo utilize fminsearch effectively, it is crucial to consider several best practices and factors that influence the optimization process: Initial Guess: Selecting an appropriate initial guess is paramount to ensure convergence to the global minimum. Experimenting with different initial guesses can provide valuable insights into the behaviour of the objective function. Objective Function Characteristics: Understanding the characteristics of the objective function, such as the presence of multiple local minima or noisy behaviour, is vital for successful optimization. Tolerance and Iterations: Carefully setting the termination tolerance and maximum iterations allows users to balance the trade-off between computational resources and desired accuracy. Display Information: Monitoring the optimization process by adjusting the display options can aid in diagnosing potential issues and understanding the algorithm's behaviour. Global Optimization: For complex problems with multiple local minima, consider using global optimization methods, such as genetic algorithms or simulated annealing, to ensure convergence to the global minimum. By adhering to these best practices and considerations, users can harness the full potential of fminsearch and address a wide range of optimization challenges efficiently and effectively

Advanced Usage:You can investigate alternative optimization techniques offered by MATLAB, such as fmincon for restricted optimization or other derivative-based optimization algorithms, for more difficult optimization problems or particular cases. When an objective function must be optimized while adhering to a set of equality or inequality constraints, the fmincon function is specifically designed to solve constrained optimization issues.

Moreover, MATLAB offers a variety of derivative-based optimization algorithms that perform better than fminsearch in terms of convergence time and efficiency for jobs requiring the use of gradient information. Gradient information is used by algorithms such as the sequential quadratic programming (SQP) method, the interior-point method, and the trust-region reflective algorithm (TRR) to navigate the optimization landscape more efficiently. This often results in faster convergence and more accurate results, especially in high-dimensional and complex optimization problems.

Through the combination of sophisticated numerical optimization techniques and the adaptability of MATLAB's programming environment, users can customize optimization algorithms for particular problem domains, adjusting them to fit certain restrictions, peculiar issue structures, or performance needs. Multidisciplinary: Fields from engineering to science and beyond depend heavily on optimization. One of the most useful functions in MATLAB's computational toolkit is fminsearch, which provides a strong method of minimizing functions without depending on their gradients. Fminsearch has established itself as the go-to tool for resolving optimization issues involving non-differentiable, noisy, or discontinuous functions thanks to its effective Nelder-Mead simplex technique implementation.

Next TopicSummation in MATLAB

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share