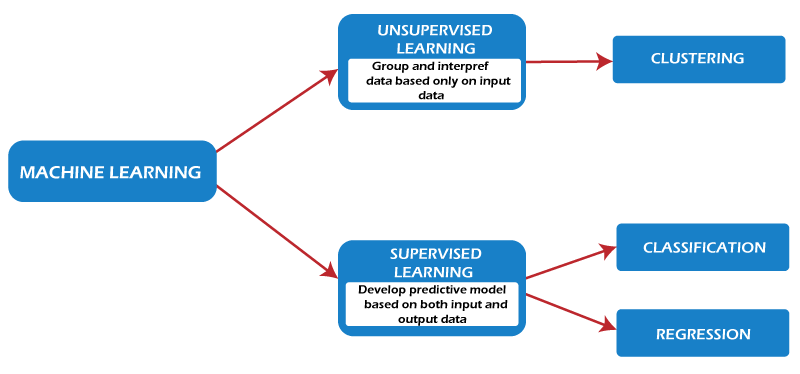

Machine Learning TechniquesMachine learning is a data analytics technique that teaches computers to do what comes naturally to humans and animals: learn from experience. Machine learning algorithms use computational methods to directly "learn" from data without relying on a predetermined equation as a model. As the number of samples available for learning increases, the algorithm adapts to improve performance. Deep learning is a special form of machine learning. How does machine learning work?Machine learning uses two techniques: supervised learning, which trains a model on known input and output data to predict future outputs, and unsupervised learning, which uses hidden patterns or internal structures in the input data.

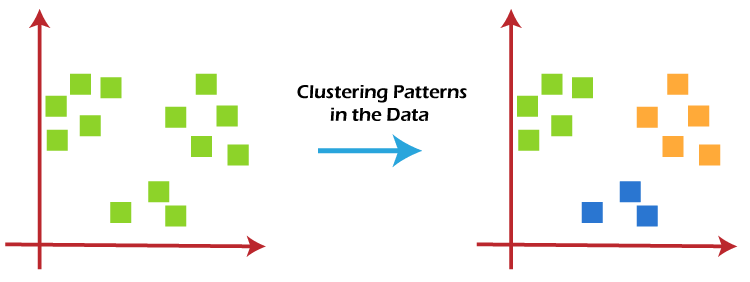

Supervised learningSupervised machine learning creates a model that makes predictions based on evidence in the presence of uncertainty. A supervised learning algorithm takes a known set of input data and known responses to the data (output) and trains a model to generate reasonable predictions for the response to the new data. Use supervised learning if you have known data for the output you are trying to estimate. Supervised learning uses classification and regression techniques to develop machine learning models. Classification models classify the input data. Classification techniques predict discrete responses. For example, the email is genuine, or spam, or the tumor is cancerous or benign. Typical applications include medical imaging, speech recognition, and credit scoring. Use taxonomy if your data can be tagged, classified, or divided into specific groups or classes. For example, applications for handwriting recognition use classification to recognize letters and numbers. In image processing and computer vision, unsupervised pattern recognition techniques are used for object detection and image segmentation. Common algorithms for performing classification include support vector machines (SVMs), boosted and bagged decision trees, k-nearest neighbors, Naive Bayes, discriminant analysis, logistic regression, and neural networks. Regression techniques predict continuous responses - for example, changes in temperature or fluctuations in electricity demand. Typical applications include power load forecasting and algorithmic trading. If you are working with a data range or if the nature of your response is a real number, such as temperature or the time until a piece of equipment fails, use regression techniques. Common regression algorithms include linear, nonlinear models, regularization, stepwise regression, boosted and bagged decision trees, neural networks, and adaptive neuro-fuzzy learning. Using supervised learning to predict heart attacks Physicians want to predict whether someone will have a heart attack within a year. They have data on previous patients, including age, weight, height, and blood pressure. They know if previous patients had had a heart attack within a year. So the problem is to combine existing data into a model that can predict whether a new person will have a heart attack within a year. Unsupervised LearningDetects hidden patterns or internal structures in unsupervised learning data. It is used to eliminate datasets containing input data without labeled responses. Clustering is a common unsupervised learning technique. It is used for exploratory data analysis to find hidden patterns and clusters in the data. Applications for cluster analysis include gene sequence analysis, market research, and commodity identification. For example, if a cell phone company wants to optimize the locations where they build towers, they can use machine learning to predict how many people their towers are based on. A phone can only talk to 1 tower at a time, so the team uses clustering algorithms to design the good placement of cell towers to optimize signal reception for their groups or groups of customers. Common algorithms for performing clustering are k-means and k-medoids, hierarchical clustering, Gaussian mixture models, hidden Markov models, self-organizing maps, fuzzy C-means clustering, and subtractive clustering.

Ten methods are described and it is a foundation you can build on to improve your machine learning knowledge and skills:

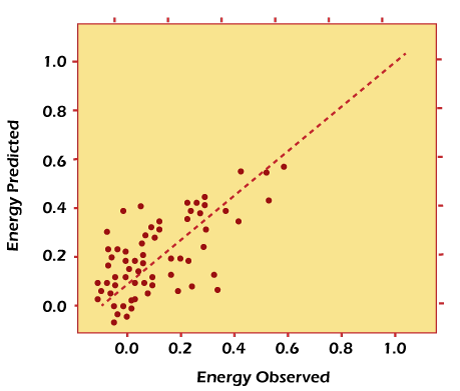

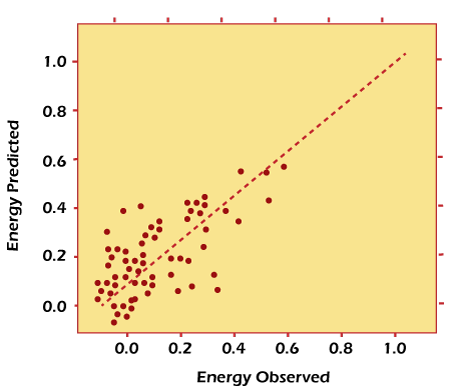

Let's differentiate between two general categories of machine learning: supervised and unsupervised. We apply supervised ML techniques when we have a piece of data that we want to predict or interpret. We use the previous and output data to predict the output based on the new input. For example, you can use supervised ML techniques to help a service business that wants to estimate the number of new users that will sign up for the service in the next month. In contrast, untrained ML looks at ways of connecting and grouping data points without using target variables to make predictions. In other words, it evaluates data in terms of traits and uses traits to group objects that are similar to each other. For example, you can use unsupervised learning techniques to help a retailer who wants to segment products with similar characteristics-without specifying in advance which features to use. 1. RegressionRegression methods fall under the category of supervised ML. They help predict or interpret a particular numerical value based on prior data, such as predicting an asset's price based on past pricing data for similar properties. The simplest method is linear regression, where we use the mathematical equation of the line (y = m * x + b) to model the data set. We train a linear regression model with multiple data pairs (x, y) by computing the position and slope of a line that minimizes the total distance between all data points and the line. In other words, we calculate the slope (M) and the y-intercept (B) for a line that best approximates the observations in the data. Let us consider a more concrete example of linear regression. I once used linear regression to predict the energy consumption (in kW) of some buildings by gathering together the age of the building, the number of stories, square feet, and the number of wall devices plugged in. Since there was more than one input (age, square feet, etc.), I used a multivariable linear regression. The principle was similar to a one-to-one linear regression. Still, in this case, the "line" I created occurred in a multi-dimensional space depending on the number of variables. Now imagine that you have access to the characteristics of a building (age, square feet, etc.), but you do not know the energy consumption. In this case, we can use the fitted line to estimate the energy consumption of the particular building. The plot below shows how well the linear regression model fits the actual energy consumption of the building. Note that you can also use linear regression to estimate the weight of each factor that contributes to the final prediction of energy consumed. For example, once you have a formula, you can determine whether age, size, or height are most important.

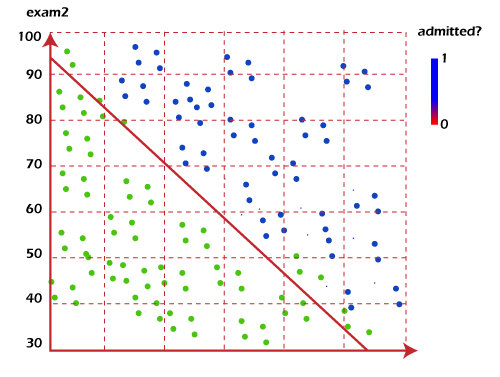

Linear regression model estimates of building energy consumption (kWh). Regression techniques run the gamut from simple (linear regression) to complex (regular linear regression, polynomial regression, decision trees, random forest regression, and neural nets). But don't get confused: start by studying simple linear regression, master the techniques, and move on. 2. ClassificationIn another class of supervised ML, classification methods predict or explain a class value. For example, they can help predict whether an online customer will purchase a product. Output can be yes or no: buyer or no buyer. But the methods of classification are not limited to two classes. For example, a classification method can help assess whether a given image contains a car or a truck. The simplest classification algorithm is logistic regression, which sounds like a regression method, but it is not. Logistic regression estimates the probability of occurrence of an event based on one or more inputs. For example, logistic regression can take two test scores for a student to predict that the student will get admission to a particular college. Because the guess is a probability, the output is a number between 0 and 1, where 1 represents absolute certainty. For the student, if the predicted probability is greater than 0.5, we estimate that they will be admitted. If the predicted probability is less than 0.5, we estimate it will be rejected. The chart below shows the marks of past students and whether they were admitted. Logistic regression allows us to draw a line that represents the decision boundary.

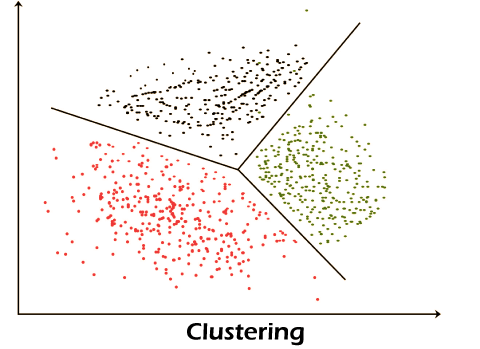

Because logistic regression is the simplest classification model, it is a good place to start for classification. As you progress, you can dive into nonlinear classifiers such as decision trees, random forests, support vector machines, and neural nets, among others. 3. ClusteringWe fall into untrained ML with clustering methods because they aim to group or group observations with similar characteristics. Clustering methods do not use the output information for training but instead let the algorithm define the output. In clustering methods, we can only use visualization to observe the quality of the solution. The most popular clustering method is K-Means, where "K" represents the number of clusters selected by the user. (Note that there are several techniques for selecting the value of K, such as the elbow method.)

Otherwise, we return to step 2. (To prevent ending in an infinite loop if the centers continue to change, set the maximum number of iterations in advance.) The process is over if the centers do not change (or change very little). The next plot applies the K-means to the building's data set. The four measurements pertain to air conditioning, plug-in appliances (microwave, refrigerator, etc.), household gas, and heating gas. Each column of the plot represents the efficiency of each building.

Linear regression model estimates of building energy consumption (kWh). Regression techniques run the gamut from simple (linear) to complex (regular linear, polynomial, decision trees, random forest, and neural nets). But don't get confused: start by studying simple linear regression, master the techniques, and move on.

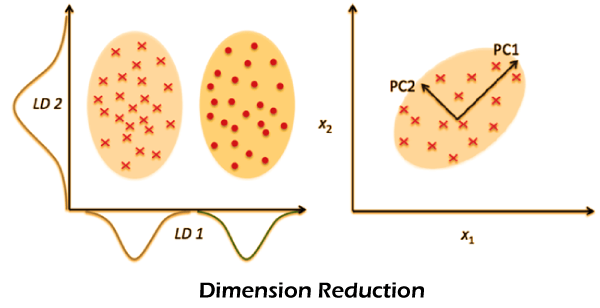

Clustering Buildings into Efficient (Green) and Inefficient (Red) Groups. As you explore clustering, you will come across very useful algorithms such as Density-based Spatial Clustering of Noise (DBSCAN), Mean Shift Clustering, Agglomerative Hierarchical Clustering, and Expectation-Maximization Clustering using the Gaussian Mixture Model, among others. 4. Dimensionality ReductionWe use dimensionality reduction to remove the least important information (sometimes unnecessary columns) from the data setFor example, and images may consist of thousands of pixels, which are unimportant to your analysis. Or, when testing microchips within the manufacturing process, you may have thousands of measurements and tests applied to each chip, many of which provide redundant information. In these cases, you need a dimensionality reduction algorithm to make the data set manageable. The most popular dimensionality reduction method is Principal Component Analysis (PCA), which reduces the dimensionality of the feature space by finding new vectors that maximize the linear variance of the data. (You can also measure the extent of information loss and adjust accordingly.) When the linear correlations of the data are strong, PCA can dramatically reduce the dimension of the data without losing too much information. Another popular method is t-stochastic neighbor embedding (t-SNE), which minimizes nonlinear dimensions. People usually use t-SNE for data visualization, but you can also use it for machine learning tasks such as feature space reduction and clustering, to mention a few. The next plot shows the analysis of the MNIST database of handwritten digits. MNIST contains thousands of images of numbers 0 to 9, which the researchers use to test their clustering and classification algorithms. Each row of the data set is a vector version of the original image (size 28 x 28 = 784) and a label for each image (zero, one, two, three, …, nine). Therefore, we are reducing the dimensionality from 784 (pixels) to 2 (the dimensions in our visualization). Projecting to two dimensions allows us to visualize higher-dimensional original data sets.

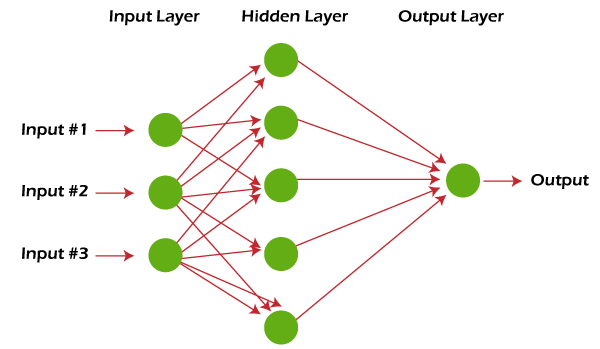

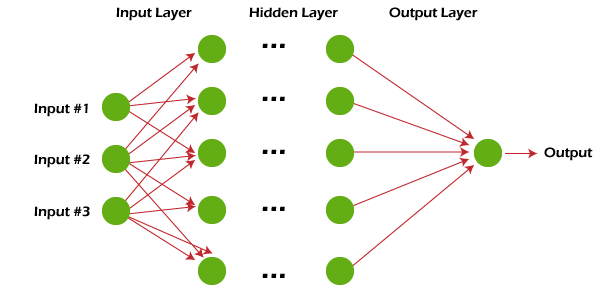

5. Ensemble MethodsImagine that you have decided to build a bicycle because you are not happy with the options available in stores and online. Once you've assembled these great parts, the resulting bike will outlast all other options. Each model uses the same idea of combining multiple predictive models (supervised ML) to obtain higher quality predictions than the model. For example, the Random Forest algorithm is an ensemble method that combines multiple decision trees trained with different samples from a data set. As a result, the quality of predictions of a random forest exceeds the quality of predictions predicted with a single decision tree. Think about ways to reduce the variance and bias of a single machine learning model. By combining the two models, the quality of the predictions becomes balanced. With another model, the relative accuracy may be reversed. It is important because any given model may be accurate under some conditions but may be inaccurate under other conditions. Most of the top winners of Kaggle competitions use some dressing method. The most popular ensemble algorithms are Random Forest, XGBoost, and LightGBM. 6. Neural networks and deep learningUnlike linear and logistic regression, which is considered linear models, neural networks aim to capture nonlinear patterns in data by adding layers of parameters to the model. The simple neural net has three inputs as in the image below, a hidden layer with five parameters and an output layer.

Neural network with a hidden layer. The neural network structure is flexible enough to construct our famous linear and logistic regression. The term deep learning comes from a neural net with many hidden layers and encompasses a variety of architectures. It is especially difficult to keep up with development in deep learning as the research and industry communities redouble their deep learning efforts, spawning whole new methods every day.

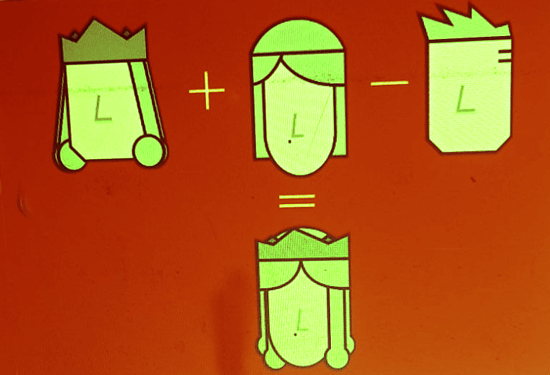

Deep learning: A neural network with multiple hidden layers. Deep learning techniques require a lot of data and computation power for best performance as this method is self-tuning many parameters within vast architectures. It quickly becomes clear why deep learning practitioners need powerful computers with GPUs (Graphical Processing Units). In particular, deep learning techniques have been extremely successful in vision (image classification), text, audio, and video. The most common software packages for deep learning are Tensorflow and PyTorch. 7. Transfer learningLet's say you are a data scientist working in the retail industry. You've spent months training a high-quality model to classify images as shirts, t-shirts, and polos. Your new task is to create a similar model to classify clothing images like jeans, cargo, casual, and dress pants. Transfer learning refers to reusing part of an already trained neural net and adapting it to a new but similar task. Specifically, once you train a neural net using the data for a task, you can move a fraction of the trained layers and combine them with some new layers that you can use for the new task. The new neural net can learn and adapt quickly to a new task by adding a few layers. The advantage of transfer learning is that you need fewer data to train a neural net, which is especially important because training for deep learning algorithms is expensive in terms of both time and money. The main advantage of transfer learning is that you need fewer data to train a neural net, which is especially important because training for deep learning algorithms is expensive both in terms of time and money (computational resources). Of course, it isn't easy to find enough labeled data for training. Let's come back to your example and assume that you use a neural net with 20 hidden layers for the shirt model. After running a few experiments, you realize that you can move the 18 layers of the shirt model and combine them with a new layer of parameters to train on the pant images. So the Pants model will have 19 hidden layers. The inputs and outputs of the two functions are different but reusable layers can summarize information relevant to both, for example, fabric aspects. Transfer learning has become more and more popular, and there are many concrete pre-trained models now available for common deep learning tasks such as image and text classification. 8. Reinforcement LearningImagine a mouse in a maze trying to find hidden pieces of cheese. At first, the Mouse may move randomly, but after a while, the Mouse's feel helps sense which actions bring it closer to the cheese. The more times we expose the Mouse to the maze, the better at finding the cheese. Process for Mouse refers to what we do with Reinforcement Learning (RL) to train a system or game. Generally speaking, RL is a method of machine learning that helps an agent to learn from experience. RL can maximize a cumulative reward by recording actions and using a trial-and-error approach in a set environment. In our example, the Mouse is the agent, and the maze is the environment. The set of possible actions for the Mouse is: move forward, backward, left, or right. The reward is cheese. You can use RL when you have little or no historical data about a problem, as it does not require prior information (unlike traditional machine learning methods). In the RL framework, you learn from the data as you go. Not surprisingly, RL is particularly successful with games, especially games of "correct information" such as chess and Go. With games, feedback from the agent and the environment comes quickly, allowing the model to learn faster. The downside of RL is that it can take a very long time to train if the problem is complex. As IBM's Deep Blue beat the best human chess player in 1997, the RL-based algorithm AlphaGo beat the best Go player in 2016. The current forerunners of RL are the teams of DeepMind in the UK. In April 2019, the OpenAI Five team was the first AI to defeat the world champion team of e-sport Dota 2, a very complex video game that the OpenAI Five team chose because there were no RL algorithms capable of winning it. You can tell that reinforcement learning is a particularly powerful form of AI, and we certainly want to see more progress from these teams. Still, it's also worth remembering the limitations of the method. 9. Natural Language ProcessingA large percentage of the world's data and knowledge is in some form of human language. For example, we can train our phones to autocomplete our text messages or correct misspelled words. We can also teach a machine to have a simple conversation with a human. Natural Language Processing (NLP) is not a machine learning method but a widely used technique for preparing text for machine learning. Think of many text documents in different formats (Word, online blog). Most of these text documents will be full of typos, missing characters, and other words that need to be filtered out. At the moment, the most popular package for processing text is NLTK (Natural Language Toolkit), created by Stanford researchers. The easiest way to map text to a numerical representation is to count the frequency of each word in each text document. Think of a matrix of integers where each row represents a text document, and each column represents a word. This matrix representation of the term frequency is usually called the term frequency matrix (TFM). We can create a more popular matrix representation of a text document by dividing each entry on the matrix by the weighting of how important each word is in the entire corpus of documents. We call this method Term Frequency Inverse Document Frequency (TFIDF), and it generally works better for machine learning tasks. 10. Word EmbeddingTFM and TFIDF are numerical representations of text documents that consider only frequency and weighted frequencies to represent text documents. In contrast, word embedding can capture the context of a word in a document. As with word context, embeddings can measure similarity between words, allowing us to perform arithmetic with words. Word2Vec is a neural net-based method that maps words in a corpus to a numerical vector. We can then use these vectors to find synonyms, perform arithmetic operations with words, or represent text documents (by taking the mean of all word vectors in the document). For example, we use a sufficiently large corpus of text documents to estimate word embeddings. Let's say vector('word') is the numeric vector representing the word 'word'. To approximate the vector ('female'), we can perform an arithmetic operation with the vectors: vector('king') + vector('woman') - vector('man') ~ vector('queen')

Arithmetic with Word (Vectors) Embeddings. The word representation allows finding the similarity between words by computing the cosine similarity between the vector representations of two words. The cosine similarity measures the angle between two vectors. We calculate word embedding's using machine learning methods, but this is often a pre-stage of implementing machine learning algorithms on top. For example, let's say we have access to the tweets of several thousand Twitter users. Let's also assume that we know which Twitter users bought the house. To estimate the probability of a new Twitter user buying a home, we can combine Word2Vec with logistic regression. You can train the word embedding yourself or get a pre-trained (transfer learning) set of word vectors. To download pre-trained word vectors in 157 different languages, look at Fast Text. SummaryStudying these methods thoroughly and fully understanding the basics of each can serve as a solid starting point for further study of more advanced algorithms and methods. There is no best way or one size fits all. Finding the right algorithm is partly just trial and error - even highly experienced data scientists can't tell whether an algorithm will work without trying it out. But algorithmic selection also depends on the size and type of data you're working with, the insights you want to derive from the data, and how those insights will be used.

Next TopicAutoML | Automated Machine Learning

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share