Apriori Algorithm in Machine LearningThe Apriori algorithm uses frequent itemsets to generate association rules, and it is designed to work on the databases that contain transactions. With the help of these association rule, it determines how strongly or how weakly two objects are connected. This algorithm uses a breadth-first search and Hash Tree to calculate the itemset associations efficiently. It is the iterative process for finding the frequent itemsets from the large dataset. This algorithm was given by the R. Agrawal and Srikant in the year 1994. It is mainly used for market basket analysis and helps to find those products that can be bought together. It can also be used in the healthcare field to find drug reactions for patients. What is Frequent Itemset? Frequent itemsets are those items whose support is greater than the threshold value or user-specified minimum support. It means if A & B are the frequent itemsets together, then individually A and B should also be the frequent itemset. Suppose there are the two transactions: A= {1,2,3,4,5}, and B= {2,3,7}, in these two transactions, 2 and 3 are the frequent itemsets. Note: To better understand the apriori algorithm, and related term such as support and confidence, it is recommended to understand the association rule learning.Steps for Apriori AlgorithmBelow are the steps for the apriori algorithm: Step-1: Determine the support of itemsets in the transactional database, and select the minimum support and confidence. Step-2: Take all supports in the transaction with higher support value than the minimum or selected support value. Step-3: Find all the rules of these subsets that have higher confidence value than the threshold or minimum confidence. Step-4: Sort the rules as the decreasing order of lift. Apriori Algorithm WorkingWe will understand the apriori algorithm using an example and mathematical calculation: Example: Suppose we have the following dataset that has various transactions, and from this dataset, we need to find the frequent itemsets and generate the association rules using the Apriori algorithm:

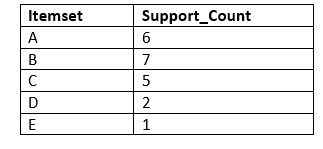

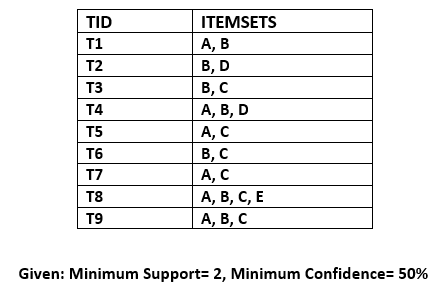

Solution:Step-1: Calculating C1 and L1:

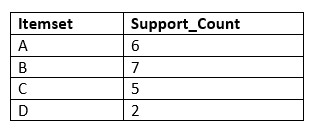

Step-2: Candidate Generation C2, and L2:

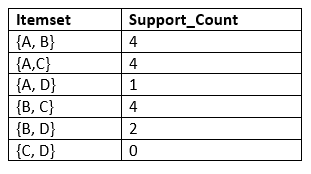

Step-3: Candidate generation C3, and L3:

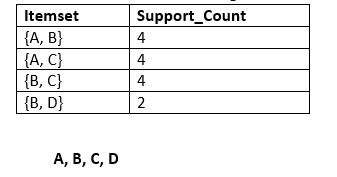

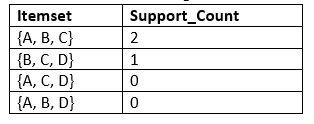

Step-4: Finding the association rules for the subsets:To generate the association rules, first, we will create a new table with the possible rules from the occurred combination {A, B.C}. For all the rules, we will calculate the Confidence using formula sup( A ^B)/A. After calculating the confidence value for all rules, we will exclude the rules that have less confidence than the minimum threshold(50%). Consider the below table:

As the given threshold or minimum confidence is 50%, so the first three rules A ^B → C, B^C → A, and A^C → B can be considered as the strong association rules for the given problem. Advantages of Apriori Algorithm

Disadvantages of Apriori Algorithm

Python Implementation of Apriori AlgorithmNow we will see the practical implementation of the Apriori Algorithm. To implement this, we have a problem of a retailer, who wants to find the association between his shop's product, so that he can provide an offer of "Buy this and Get that" to his customers. The retailer has a dataset information that contains a list of transactions made by his customer. In the dataset, each row shows the products purchased by customers or transactions made by the customer. To solve this problem, we will perform the below steps:

1. Data Pre-processing Step:The first step is data pre-processing step. Under this, first, we will perform the importing of the libraries. The code for this is given below:

Before importing the libraries, we will use the below line of code to install the apyori package to use further, as Spyder IDE does not contain it: Below is the code to implement the libraries that will be used for different tasks of the model:

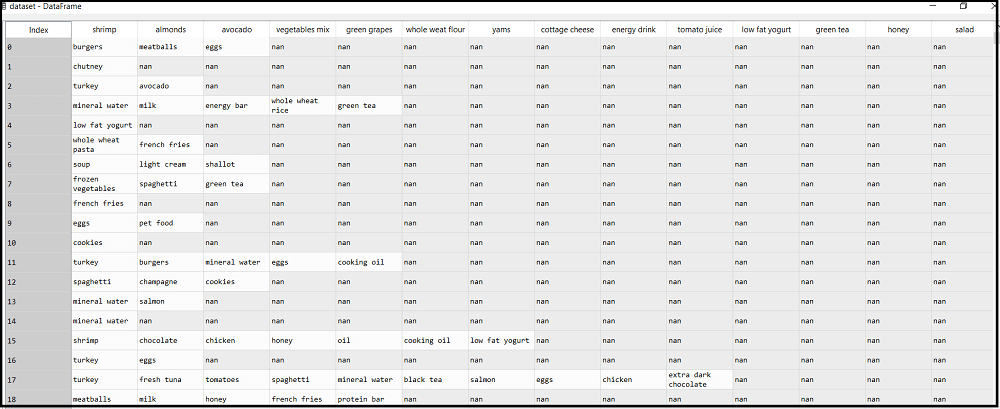

In the above code, the first line is showing importing the dataset into pandas format. The second line of the code is used because the apriori() that we will use for training our model takes the dataset in the format of the list of the transactions. So, we have created an empty list of the transaction. This list will contain all the itemsets from 0 to 7500. Here we have taken 7501 because, in Python, the last index is not considered. The dataset looks like the below image:

2. Training the Apriori Model on the datasetTo train the model, we will use the apriori function that will be imported from the apyroi package. This function will return the rules to train the model on the dataset. Consider the below code: In the above code, the first line is to import the apriori function. In the second line, the apriori function returns the output as the rules. It takes the following parameters:

3. Visualizing the resultNow we will visualize the output for our apriori model. Here we will follow some more steps, which are given below:

By executing the above lines of code, we will get the 9 rules. Consider the below output: Output:

[RelationRecord(items=frozenset({'chicken', 'light cream'}), support=0.004533333333333334, ordered_statistics=[OrderedStatistic(items_base=frozenset({'light cream'}), items_add=frozenset({'chicken'}), confidence=0.2905982905982906, lift=4.843304843304844)]),

RelationRecord(items=frozenset({'escalope', 'mushroom cream sauce'}), support=0.005733333333333333, ordered_statistics=[OrderedStatistic(items_base=frozenset({'mushroom cream sauce'}), items_add=frozenset({'escalope'}), confidence=0.30069930069930073, lift=3.7903273197390845)]),

RelationRecord(items=frozenset({'escalope', 'pasta'}), support=0.005866666666666667, ordered_statistics=[OrderedStatistic(items_base=frozenset({'pasta'}), items_add=frozenset({'escalope'}), confidence=0.37288135593220345, lift=4.700185158809287)]),

RelationRecord(items=frozenset({'fromage blanc', 'honey'}), support=0.0033333333333333335, ordered_statistics=[OrderedStatistic(items_base=frozenset({'fromage blanc'}), items_add=frozenset({'honey'}), confidence=0.2450980392156863, lift=5.178127589063795)]),

RelationRecord(items=frozenset({'ground beef', 'herb & pepper'}), support=0.016, ordered_statistics=[OrderedStatistic(items_base=frozenset({'herb & pepper'}), items_add=frozenset({'ground beef'}), confidence=0.3234501347708895, lift=3.2915549671393096)]),

RelationRecord(items=frozenset({'tomato sauce', 'ground beef'}), support=0.005333333333333333, ordered_statistics=[OrderedStatistic(items_base=frozenset({'tomato sauce'}), items_add=frozenset({'ground beef'}), confidence=0.37735849056603776, lift=3.840147461662528)]),

RelationRecord(items=frozenset({'olive oil', 'light cream'}), support=0.0032, ordered_statistics=[OrderedStatistic(items_base=frozenset({'light cream'}), items_add=frozenset({'olive oil'}), confidence=0.20512820512820515, lift=3.120611639881417)]),

RelationRecord(items=frozenset({'olive oil', 'whole wheat pasta'}), support=0.008, ordered_statistics=[OrderedStatistic(items_base=frozenset({'whole wheat pasta'}), items_add=frozenset({'olive oil'}), confidence=0.2714932126696833, lift=4.130221288078346)]),

RelationRecord(items=frozenset({'pasta', 'shrimp'}), support=0.005066666666666666, ordered_statistics=[OrderedStatistic(items_base=frozenset({'pasta'}), items_add=frozenset({'shrimp'}), confidence=0.3220338983050848, lift=4.514493901473151)])]

As we can see, the above output is in the form that is not easily understandable. So, we will print all the rules in a suitable format.

Output: By executing the above lines of code, we will get the below output: Rule: chicken -> light cream Support: 0.004533333333333334 Confidence: 0.2905982905982906 Lift: 4.843304843304844 ===================================== Rule: escalope -> mushroom cream sauce Support: 0.005733333333333333 Confidence: 0.30069930069930073 Lift: 3.7903273197390845 ===================================== Rule: escalope -> pasta Support: 0.005866666666666667 Confidence: 0.37288135593220345 Lift: 4.700185158809287 ===================================== Rule: fromage blanc -> honey Support: 0.0033333333333333335 Confidence: 0.2450980392156863 Lift: 5.178127589063795 ===================================== Rule: ground beef -> herb & pepper Support: 0.016 Confidence: 0.3234501347708895 Lift: 3.2915549671393096 ===================================== Rule: tomato sauce -> ground beef Support: 0.005333333333333333 Confidence: 0.37735849056603776 Lift: 3.840147461662528 ===================================== Rule: olive oil -> light cream Support: 0.0032 Confidence: 0.20512820512820515 Lift: 3.120611639881417 ===================================== Rule: olive oil -> whole wheat pasta Support: 0.008 Confidence: 0.2714932126696833 Lift: 4.130221288078346 ===================================== Rule: pasta -> shrimp Support: 0.005066666666666666 Confidence: 0.3220338983050848 Lift: 4.514493901473151 ===================================== From the above output, we can analyze each rule. The first rules, which is Light cream → chicken, states that the light cream and chicken are bought frequently by most of the customers. The support for this rule is 0.0045, and the confidence is 29%. Hence, if a customer buys light cream, it is 29% chances that he also buys chicken, and it is .0045 times appeared in the transactions. We can check all these things in other rules also.

Next TopicAssociation Rule Learning

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share