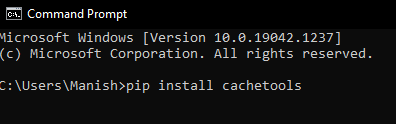

Python Cachetools ModuleMost of us would have heard the word 'Cache', but not all of us who heard this word know about it. The cache in terms of Computer technology is a software or hardware component that stores the data (of the activities performed on the computer by the user). It is very helpful when future requests are made for the same data because then the same data will be served faster. The data stored in the cache component of the system might be because of the computation performed earlier, or a copy of the same data is stored somewhere else. So that's how we can see how the cache is important for faster computing and better results in less time. Cache occupies very little compared to the actual size of the data, and therefore it becomes more useful to users. Python provides us with various modules by which we can deal with cache memory present in our system. Python also provides us modules where we can use the cache memory for faster computing and save time. One of such Python modules is Cachetools Module in Python, and we are going to learn about this module in this tutorial. We will learn in brief detail about the Cachetools Module & its functions in Python and how these functions are helpful in performing various tasks with the help of cache present in the system. Cachetools Module in PythonCachetools Module: IntroductionCachetools is a Python module that provides us with various decorators (that are the functions available in this module) and memorizing collections. Cachetools help us deal with the cache present in our system effectively, and we can use this cache with the Cachetools module for faster processing of Python programs. Cachetools Module also includes some variants of the Functools Module's (another Python module) @lru_cache decorator function. Cachetools Module: InstallationCachetools Module is not an in-built module of Python, and therefore if we want to use this module and its functions in our system, we have to install this module first. Here, in this section, we will install Cachetools Module through the command prompt terminal with the pip installer. First, we have to open the command prompt terminal, and then we have to write the following pip command inside the terminal:

When we press the enter key, the pip installer will start installing the Cachetools Module in our system through the terminal.

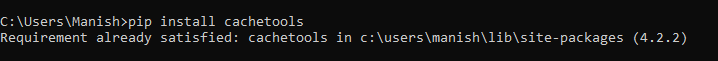

As we can see, Cachetools Module is successfully installed into our system, and now we can call this module in a Python program to deal with cache present in our system through Python. Cachetools Module: FunctionsHere, we will only talk about the functions present in the Cachetools Module of Python. We should note here that these functions are decorator function types in Python, and when we use these functions, they will be used as decorator functions in the program. Following are the five functions present inside the Cachetools Module of Python:

Now, we will study all the functions mentioned above in detail and understand their working by using each of them in a Python program. Cached FunctionThe cached function of the Cachetools Module is used as a decorator function in Python, and it is by default perform a simple cache in the program. When we call a function using the cached decorator, it will cache the function we called for using it later as a cache do. Syntax: Following is the syntax for using cached decorator function inside a Python program: Parameters: In the cached decorator, we use a default function with any_funct() name (We can even give parameters inside the function). Instead of a pass statement, while using the cached decorator inside a Python program, we will write a logical code inside the default function. Now, let's use this cached decorator inside a Python program to understand better its working and how it saves our time. Example 1: Look at the following program for understanding the implementation of cached decorator: Output: Result of operation: 1346269 Time Taken by function without cache: 0.8905675411224365 Result of operation: 1346269 Time Taken by function with cached: 0.015604496002197266 Explanation: We have first imported the cached decorator function from the Cachetools Module to use it in our program. We have also imported the time module in the program to keep count on time and check how cached makes calling of function faster. After that, we defined a default function and used logical code to calculate value through operation and returned the value after the operation. When we used a parameter in this fiban() function to find an actual value, we used the time() function before finding out how much time it takes to perform this operation. Then, we printed the value we got in operation and the total time it took to perform the operation. After that, we have used the cached decorator function and defined the same default function, i.e., fiban() function, with the same logic inside it. Now, we again used the time function to calculate the total time it will take to call the function. Then, after giving a parameter inside the fiban() function, we printed the operation and the total time it took to perform the operation with the cached decorator. We can see in the output that when we called the function without cached, it took much more time than when we called the function using the cached decorator (When both functions are same, having exact logic and have the same parameter when called). That's how cached decorator helps us by saving the function in cache memory and saves a lot of time when it is called again. TTLCache FunctionTTLCache is also known as "Time to Live" Cache, and it is also a decorator function included in the Cachetools Module package. It also works on the cache present in the system while we are calling a function inside a program. But it works very differently than the previous function of the Cachetools Module, and we can see this in the syntax of both functions. Syntax: Following is the syntax for using TTLCache decorator function inside a Python program: Parameters: Unlike the Cached decorator syntax, we have to give a total of two parameters inside the TTLCache decorator function. We can also see that we have specified the cache type to TTLCache before using the parameters in the function. Following are the parameters that we have to use inside the TTLCache decorator:

Now, let's use this TTLCache decorator inside a Python program to understand its working and how it is useful to us. Example 2: Look at the following program for understanding the implementation of the TTLCache function: Output: Total time taken for executing the function: 4.015438556671143 I am executed with the function number: 4 I am executed with the function number: 4 Total time taken for executing the function: 4.015343904495239 I am executed with the function number: 4 Explanation: We have imported both cached and TTLCache decorator from the Cachetools Module in our program along with the Time module to keep count on time taken for calling the function. We used the TTLCache decorator by defining its parameters as following: defined maximize parameter equals 64 and total time for which cache is stored is equal to 90 seconds. After that, we have defined a default function to perform the operation, and then we have used the time() function to calculate the time taken to call the function. After that, we called the function twice in the print statement. Then, we gave a break in calling the function a third time using the sleep() function. We used a 100-second break because, in the TTLCache function's parameter, we gave 90 seconds to store cache. We can see in the output that when in the second time we called the function, it called from the cache memory of the TTLCache decorator. But when we gave a break, it again called from the program as TTLCache no longer storing the cache memory for the function. RRCacheRRCache is short for Random Replacement Cache, and as the name suggests, RRCache is one of the types of caching technique in which the function randomly chooses from the items present in the cache memory and discards them. The RRCache function performs this operation randomly to free up some space in the cache memory whenever required. Syntax: Following is the syntax for using RRCache decorator function inside a Python program: Parameters: The RRCache have one necessary parameter, i.e., maxsize, which is the same as the maxsize parameter we used in the TTLCache decorator function. Other than this parameter, RRCache also takes an optional 'choice parameter', which is by default set to "random.choice' in the program. Now, let's use this RRCache decorator inside a Python program to understand its working and how it is useful to us. Example 3: Look at the following program for understanding the implementation of the RRCache function: Output: Total time taken for executing the function: 2.01472806930542 I am executed with the function number: 2 Total time taken for executing the function: 3.01532244682312 I am executed with the function number: 3 Total time taken for executing the function: 1.015653133392334 I am executed with the function number: 1 I am executed with the function number: 2 I am executed with the function number: 2 Total time taken for executing the function: 4.003695011138916 I am executed with the function number: 4 I am executed with the function number: 3 I am executed with the function number: 3 I am executed with the function number: 2 Explanation: In the output, we can see the behaviour and functionality of the RRCache decorator as it is dropping the cache randomly at any call of the function, and many times function is called from the cache memory stored in the RRCache. LRUCacheThe LRUCache decorator function is used inside the cached decorator like the other two functions we have learned about. LRUCache stands for Least Recently Used Cache, and as the name suggests, this decorator function keeps the cache for functions used recently in the program. Syntax: Following is the syntax for using LRUCache decorator function inside a Python program: Parameters: LRUCache decorator takes only one argument, i.e., maxsize, and it set how many recently called functions will be stored in the cache. Now, let's use this LRUCache decorator inside a Python program to understand its working and how it is useful to us. Example 4: Look at the following program for understanding the implementation of the LRUCache function: Output: Total time taken for calling the default function: 2.0151138305664062 I am executed with the function number: 2 Total time taken for calling the default function: 3.015472888946533 I am executed with the function number: 3 Total time taken for calling the default function: 1.0000226497650146 I am executed with the function number: 1 I am executed with the function number: 2 I am executed with the function number: 2 Total time taken for calling the default function: 4.015437841415405 I am executed with the function number: 4 I am executed with the function number: 3 Explanation: We have called the default function exactly 2 times more than the maxsize parameter we defined for the LRUCache function, i.e., 7 times. This is because, first, when the function is called, it is stored in the cache memory of LRUCache for and after that, for the next 5 times function will be called from the cache present in the LRUCache decorator. But, for the 7th time, the default function will be called from the program's memory as now it exceeds the maxsize we defined for the LRUCache, i.e., 5. We can visualize the variations in the result that are occurred due to the LRUCache decorator. LFUCacheLFUCache is one more type of caching technique which stands for Least Frequently Used Cache, and it is also called inside the cached decorator. LFUCache caching technique is used to retrieve how frequently (often) an item or function is called in the program. In the LFUCache caching technique, items or functions called least often in the program are discarded from the cache memory, and this operation is performed to free up some space in the cache. Syntax: Following is the syntax for using LFUCache decorator function inside a Python program: Parameters: LFUCache decorator function takes only one argument in it, i.e., maxsize, and it set how many least frequently called functions will be stored in the cache. If an item or function argument is not called in maxsize successive number of times, then it will be discarded from the cache. Now, let's use this LFUCache decorator inside a Python program to understand its working and how it is useful to us. Example 5: Look at the following program for understanding the implementation of the LFUCache function: Output: Total time taken for calling the default function: 2.01556396484375 I am executed with the function number: 2 Total time taken for calling the default function: 3.00508451461792 I am executed with the function number: 3 Total time taken for calling the default function: 1.0156123638153076 I am executed with the function number: 1 I am executed with the function number: 2 I am executed with the function number: 2 Total time taken for calling the default function: 4.0026326179504395 I am executed with the function number: 4 I am executed with the function number: 1 I am executed with the function number: 3 I am executed with the function number: 3 I am executed with the function number: 2 Explanation: We have called the default function with argument 3, and after that, we haven't called it for 5 successive times, which is equal to the maxsize argument in the LFUCache function). Therefore, LFUCache will discard the default function with argument 3 from the cache, and it will be called again from the memory of the program. We can visualize the variations in the result that are occurred due to the LFUCache decorator. ConclusionHere, we have completed the Cachetools Module tutorial, and we have learned about all the five functions present in the Cachetools Module. We have learned how functions of the Cachetools Module works inside a Python program and how we can use them to work with the cache memory of the program.

Next TopicPython Cmdparser Module

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share