AVL Tree AdvantagesWhat Is an AVL Tree?Adelson-Velskii and Landis are the people who discovered it, so the name came from their names, i.e., AVL. It is commonly referred to as a height binary tree. An AVL tree has one of the following characteristics at each node. A node is referred to as "left heavy" if the longest path in its left subtree is longer than the longest path in its right subtree. A node is referred to as "right heavy" if the longest path in its right subtree is longer than the longest path in its left subtree. A node is said to be balanced if the longest paths in the right and left subtrees are equal.

The AVL tree is a height-balanced tree where each node's right and left subtree height differences are either -1, 0, or 1. A factor known as the balance factor keeps the subtrees' height differences apart. As a result, we can define AVL as a balanced binary search tree in which each node's balance factor is either -1, 0, or +1. In this case, the formula follows to determine the balancing factor: Balance factor properties -

The AVL Tree's rotations:If any tree node falls out of balance, the necessary rotations are carried out to correct the imbalance. Four different rotational kinds are possible and are used under various scenarios as follows: LL Rotation: When a new node is added as the left child of the unbalanced node's left subtree. RR Rotation: When a new node is added as the right child of the right subtree of an unbalanced node, this process is known as RR Rotation. LR Rotation: When a new node is added as the left child of an unbalanced node's right subtree. RL Rotation: When a new node is added as the right child of an unbalanced node's left subtree. Binary Search Tree and AVL Tree:AnAVL tree is a kind of binary searchtree It has some nice complexity guarantees, such as O (log n) search, insertions, and deletions. Structurally it is just a binary search tree and only uses some algorithms (and an additional field) to guarantee the complexity. Abinary search tree is a kind of binary tree. They both have nodes with at most two children. In a binary search tree, a node can have two children, usually named left and right, where the value stored at left is smaller (or maybe equal) to the value at right. This implies that all values at the left subtree are smaller or equal to those on the right subtree. Importance of AVL Tree:AVL Trees are self-balancing Binary Search Trees (BSTs). A normal BST may be skewed to either side, which will result in a much greater effort to search for a key (the order will be much more than O (log2n) and sometimes equal O(n) at times giving a worst-case result which is the same effort spent in sequential searching. This makes BSTs useless as a practically efficient Data Structure. Self-balancing Binary tree versions similar to AVL trees are the solution. The Tree will determine whether there is a depth difference between its left and right subtrees and rotate some pivot nodes to the left, right, or both, thereby keeping the depth difference at 1. Every insertion undergoes balancing in order to maintain a constant level of insertion complexity. However, since every node will now carry a piece of depth baggage, there is a further increase in the space needed. Look at how quickly the data can be recalled and how practically it is implemented. Usually, the complexity will be at O(log2n). Searching can be done by Binary Search Tree (BST), but if the element is already sorted, BST takes the form of a chain, and the time complexity is O(n). To overcome this problem, an AVL tree is introduced. In the average and worst scenarios, lookup, insertion, and deletion require O (log n) time, where n is the number of nodes in the Tree before the operation. The Tree may need to undergo one or more tree rotations to restore balance after insertions and deletions. Red-black and AVL trees support the same set of operations and have a basic operation time of O (log n), which is why they are frequently compared. Because they are more carefully balanced than red-black trees, AVL trees are quicker for lookup-intensive applications. AVL trees are height balanced, like red-black trees. Due to the balance factor, it never takes the form of a chain the AVL tree is a nearly complete Binary tree. Operations like Insertion, Deletion, and searching in a tree take O (log n) Time in the worst case and Average case. Example: Consider the following trees

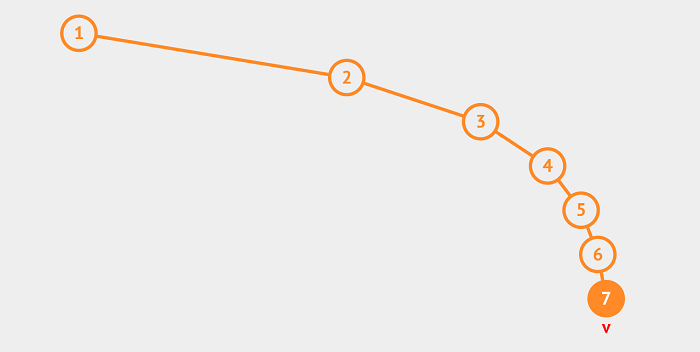

Figure 1: BST

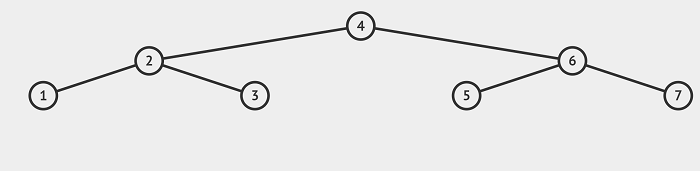

Figure 2:AVL Tree In order to insert a node with a key Q in the binary Tree, the algorithm requires 7 comparisons, but if you insert the same key in the AVL tree, from the above 2nd figure, you can see that the algorithm will require 3 comparisons. Even searching and deletion take more time in BST than in AVL.

When the nodes are arranged in increasing or decreasing order, the height of a BST is O(n). As a result, all operations take O(n) time, which is the worst case. The AVL tree balances itself so that its height is always O(log n) by preventing skewing and thus ensuring that the upper bound for all operations, whether insertion, deletion, or search, is O (log n) . Time -Complexity (Worst-Case):

AVL Tree Advantages:

AVL Tree Applications:

Next Topic#

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share