Time Complexity in Data StructureIntroduction:Time complexity is a critical concept in computer science and plays a vital role in the design and analysis of efficient algorithms and data structures. It allows us to measure the amount of time an algorithm or data structure takes to execute, which is crucial for understanding its efficiency and scalability. What is Time Complexity:Time complexity measures how many operations an algorithm completes in relation to the size of the input. It aids in our analysis of the algorithm's performance scaling with increasing input size. Big O notation (O()) is the notation that is most frequently used to indicate temporal complexity. It offers an upper bound on how quickly an algorithm's execution time will increase. Best, Worst, and Average Case Complexity:In analyzing algorithms, we consider three types of time complexity:

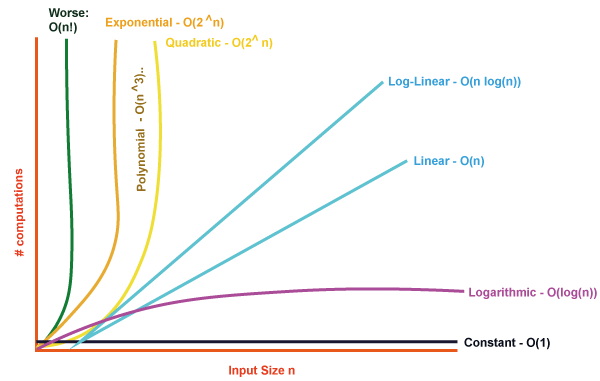

Big O Notation:Time complexity is frequently expressed using Big O notation. It represents the maximum possible running time for an algorithm given the size of the input. Let's go through some crucial notations.:

a) O(1) - Constant Time Complexity:If an algorithm takes the same amount of time to execute no matter how big the input is, it is said to have constant time complexity. This is the best case scenario as it shows how effective the algorithm is. Examples of operations having constant time complexity include accessing a component of an array or executing simple arithmetic calculations. As there is only one operation required to return the first element of the array, the getFirstElement function has an O(1) time complexity. b) O(log n) - Logarithmic Time Complexity:According to logarithmic time complexity, the execution time increases logarithmically as the input size increases. Algorithms with this complexity are often associated with efficient searching or dividing problems in half at each step. A well-known illustration of an algorithm having logarithmic time complexity is the binary search. The binarySearch function has a time complexity of O(log n) as it continuously halves the search space until it finds the target element or determines its absence. c) O(n) - Linear Time Complexity:According to linear time complexity, the running time grows linearly with the size of the input. When navigating data structures or performing actions on each piece, it is frequently noticed. For example, traversing an array or linked list to find a specific element. The printArray function has a time complexity of O(n) as it iterates over each element in the array to print its value. d) O(n^2) - Quadratic Time Complexity:An algorithm whose runtime quadratically increases with input size. O(n^2) denotes quadratic time complexity, in which an algorithm's execution time scales quadratically with the amount of the input. This type of time complexity is often observed in algorithms that involve nested iterations or comparisons between multiple elements. The printPairs function has a time complexity of O(n^2) as it performs nested iterations over the array, resulting in a quadratic relationship between the execution time and the input size. e) O(2^n) - Exponential Time Complexity:An algorithm that exhibits an exponential relationship between the execution time and the input size. Exponential time complexity indicates that the algorithm's execution time doubles with each additional element in the input, making it highly inefficient for larger input sizes. This type of time complexity is often observed in algorithms that involve an exhaustive search or generate all possible combinations. The Fibonacci function has a time complexity of O(2^n) as it recursively calculates the Fibonacci sequence, resulting in an exponential increase in the execution time as the input size increases. f) O(n!) - Factorial Time Complexity:An algorithm whose runtime increases proportionally to the size of the input. This kind of time complexity is frequently seen in algorithms that generate every combination or permutation of a set of components. The permute function has a time complexity of O(n!) as it generates all possible permutations of a given string, resulting in a factorial increase in the execution time as the input size increases. How to Calculate Time Complexity?Analysing the growth rate of an algorithm's running time as input size grows is necessary to determine how time-complex it is. It gives an estimate of how the algorithm performs as the input size increases. Here are the general steps to calculate time complexity:

Time Complexity of Different Data Structures:Here are the time complexities associated with common data structures: Arrays:

Linked Lists:

Doubly Linked List:

Stacks:

Queues:

Hash Tables:

Binary Search Trees (BSTs):

AVL Tree:

B-Tree:

Red-Black Tree:

Analyzing Algorithms with Time Complexity:The best algorithm and data structure for a specific task must be chosen, and this requires an understanding of temporal complexity. We can estimate the scalability and performance of our algorithms by looking at time complexity. It enables us to make informed decisions during algorithm design and optimization. For example, suppose we have a large dataset in which we frequently need to search for specific items. In such cases, a Binary Search Tree (BST) would provide efficient search operations with a time complexity of O(log n). However, if the dataset requires frequent insertions and deletions, a linked list or an array with O(1) insertion and deletion at the beginning may be a better choice. Conclusion:In conclusion, understanding time complexity in data structures is crucial for analyzing and evaluating the efficiency and performance of algorithms. Time complexity is a measure of how long an algorithm takes to run as the size of the input increases. It provides valuable insights into how an algorithm scales and helps in making informed decisions about algorithm selection and optimization.

Next TopicArrays are best Data Structure

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share