Cache Memory DefinitionWhat is Cache?A cache, which is pronounced "cash," is a piece of software or hardware that is used to temporarily store anything in a computer environment, often data. To enhance the performance of recently or often accessed data, a tiny quantity of quicker, more costly memory is employed. Data that has been cached is transiently kept on a local storage medium that is available to the cache client and unrelated to the main storage. The CPU, programs, web browsers, and operating systems all often employ cache. Since bulk and main storage cannot meet client demands, a cache is used. Cache speeds up input/output (I/O), minimizes latency, and shortens data access times. I/O operations are a must for virtually all application workloads; therefore caching enhances application performance.

AdvantagesCaching has a number of advantages, including the following:

DisadvantagesCaches have problems, such as the following:

How to Use Caches?Using both hardware and software components, caches are used to store temporary files. A CPU cache is an illustration of a hardware cache. This is a tiny portion of storage on the computer's CPU used to store common or recently used fundamental computer instructions. Many programs and pieces of software have their own caches as well. App-related information, files, or instructions are temporarily stored in this sort of cache for quick retrieval. An excellent illustration of application caching is web browsers. As was already established, each browser has a cache that stores data from past surfing sessions for use during subsequent ones. Because the browser accesses it from the cache, where it was saved from the previous session, a user who wants to rewatch a YouTube video will be able to load it more quickly. The following list of software categories also makes use of caches:

Caching TypesDespite the fact that caching is a general idea, some forms stand out in particular. There are essential ideas that cannot be overlooked if developers want to comprehend the most popular caching strategies. 1. Caching in MemoryThe original data is kept in a traditional storage system, however with this method; cached data is kept in RAM, which is said to be speedier. This form of caching is most often implemented using key-value databases. They may be thought of as collections of key-value pairs. A single value serves as the key, while cached data serves as the value. This basically implies that each item of data has a unique value that serves as its identification. The key-value databases will return the related value if this value is specified. Such a method is quick, effective, and simple to comprehend. This is why developers who are attempting to create a cache layer often use this method. 2. Database CachingEvery database often includes some kind of caching. In particular, an internal cache is often utilized to prevent making too many database queries. The database can quickly deliver previously cached data by caching the results of the most recent queries run. The database may postpone running queries in this fashion for as long as the requested cached data is still valid. The most common method is based on utilizing a hash table that stores key-value pairs, however, each database may implement this in a different way. The key is utilized, as we've seen previously, to search up the value. Note that Object-relational mapping solutions often include this form of caching by default. 3. Web CachingThere are two further subcategories that fall under this:

Most Internet users are used to this kind of cache, which is kept on clients. It is also known as web browser caching since it often comes with browsers. It operates in a very logical manner. A browser saves the page resources, including text, pictures, stylesheets, scripts, including media files, when it first loads a web page. When the same page is accessed again, the browser may search its cache for previously cached resources and obtain them from the user's computer. In most cases, this is far quicker than downloading them through the network.

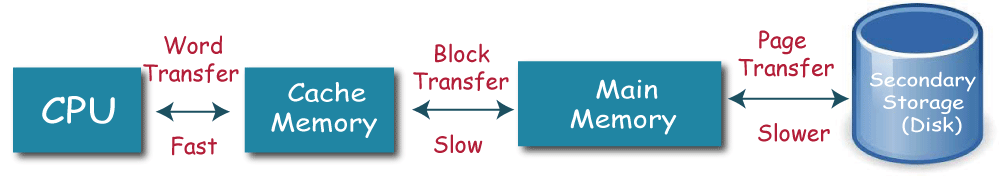

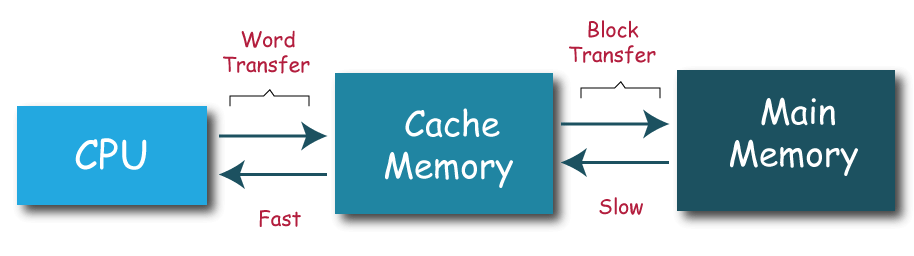

This system is designed to store resources on the server side for later usage. Particularly when dealing with dynamically produced material, which takes time to develop, such a strategy is beneficial. On the other hand, it is useless for static material. Web server caching reduces the effort required and speeds up page delivery by preventing servers from being overwhelmed. 4. CDN CacheThe term "Content Delivery Network" refers to a system for caching media files, style sheets, and web pages in proxy servers. Between the user with the origin server, it functions as a system of gateways that stores its resources. A proxy server intercepts requests for resources when a user makes one and determines if it has a copy. If so, the user receives the resource right away; if not, the request is sent back to the originating server. User queries are dynamically routed to the closest of these proxy servers, which are dispersed across the globe. As a result, they should be less distant from end users than origin servers, this should result in a decrease in network latency. Additionally, it lessens the volume of requests sent to the origin servers. WorkingThe information in a cache is often kept on hardware that can be accessed immediately, such as RAM (random access memory), or it may be combined with a software component. A cache's primary goal is to increase data retrieval speed by removing the need to communicate with the slower storage layer behind it. In contrast to data archives, whose data is often complete and lasting, a cache generally briefly retains a subset of the data in return for storage space. The caching is checked first when the cache's client wants to get data. A cache hit occurs when the data is discovered there. The cache hit rate/ratio is the percentage of tries that result in a cache hit. Data that is absent from the cache is transferred from the main memory and stored in the cache. Cache misses like these are common. The caching algorithms, cache mechanisms, & system laws dictate how this is done and what data is deleted from the cache to make room for new data. The cache operates according to a variety of caching techniques. Write-around rules allow for writing operations that use storage rather than the cache. By doing this, the cache is kept from overflowing during times of high write I/O. Data is not cached using this method until it is read from storage, which is a disadvantage. As a result, this read operation takes longer since the data has not been cached. Policies for write-through caching keep data in both cache and storage. The write-through cache has the advantage of constantly caching newly recorded data, enabling quick reading. However, write operations are not complete until the data has been simultaneously written to the principal store and the cache. Operational delays in writing might result from this Since all writes are directed to the cache, the write-back cache is comparable to the write-through cache. A write-back cache, on the other hand, deems the writing operation complete after the data has been cached. A transfer from the cache to the storage system follows. Let's now examine the operation of a hardware cache. The cache is used by the hardware to temporarily store data that will probably be needed again. It works like a memory block. While web servers and browsers typically rely on software-based caching, CPUs, SSDs, and HDDs typically have a hardware-based cache. A collection of entries makes up a cache. Each entry includes pertinent data that is a replica of the precise information kept in backup storage. Each item also has a tag identifying the content of the backup store that it is a duplicate of. Concurrent cache-oriented algorithms can function on several levels without interruption from differential relays thanks to tagging. The cache client (CPU, browser, or OS) always looks in the cache before attempting to retrieve data that is typically stored in the underlying store. The data from the entry is used instead if one with a label matching the required data can be found. It's known as a cache hit. A web browser, for instance, may check its local caches on a disc to determine if it has a local copy of the content of a website at a specific URL. In this case, the data is the web page's content, and the URL address serves as the tag. The hit rate or hit ratio of the cache refers to the percentage of cache visits that result in cache hits. When the cache is inspected and nothing with the required tag is discovered, it is known as a cache miss. Due to this, more expensive access to information from the backup store is required. The necessary data is often kept in the cache for later use once it has been downloaded. A current cache entry is deleted after a cache miss to make room for recently acquired data. The heuristics employed to choose which entry is to replace are referred to as replacement policy. The "least recently used (LRU)" replacement method replaces the earliest item with the one with the lowest usage that was accessed the least recently. The quantity of content that has been cached, the latency, and resource use for the cache and backup store are all factors that efficient caching algorithms take into account when calculating the use-hit frequency. ConclusionIn this article, we examined what caching is and why it has grown in significance in computer science. At the same time, it's important to not minimize the dangers and risks associated with caching. It takes time and skill to implement a properly specified caching system, thus it's not a simple operation. Understanding the most significant cache kinds is essential for creating the ideal system because of this.

Next TopicContact Force Definition

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share