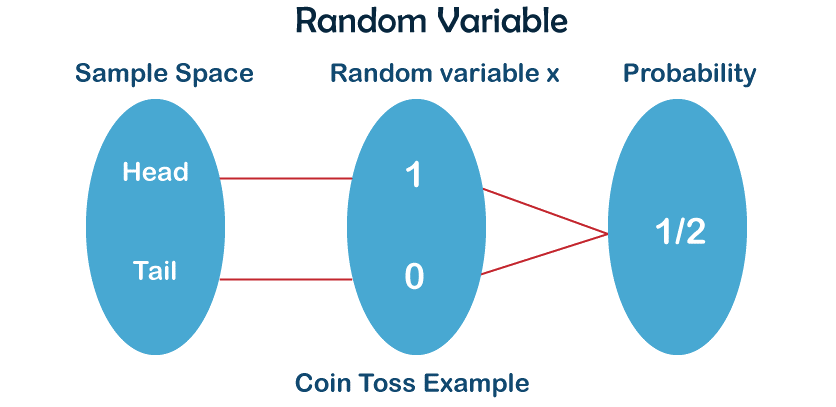

Random Variable DefinitionIntroduction to Random VariablesA random variable is a mathematical function that assigns a numerical value to every outcome which is possible in a random experiment. It is called a random variable because its value depends on the output of a random experiment. Random variables are usually symbolized by capital letters, such as X, Y, or Z. For example, let's consider the outcome of flipping a coin. If the coin lands head, we assign a value of 1 to the random variable X, and if it lands tails, we assign a value of 0 to X. Therefore, X is a random variable that takes on two possible values, 0 or 1, depending on the outcome of the coin flip. We can represent the possible values of X using a probability distribution, which tells us the probability of each possible value. For the case of tossing a coin, the probability distribution is as follows:

In general, a probability distribution for a random variable specifies the probability of each possible value of the variable.

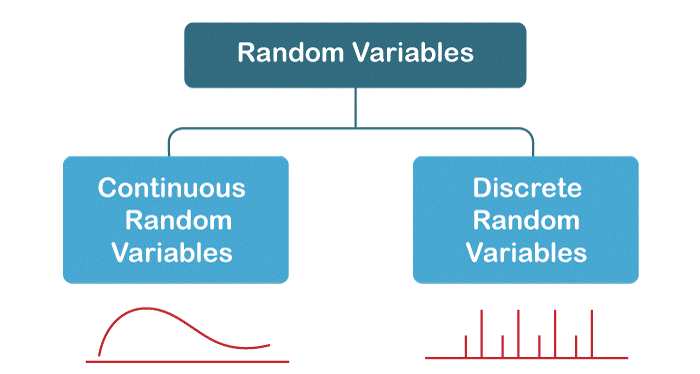

Definition of Random VariableA random variable is a mathematical function that maps each output of a random experiment to a numerical value. More specifically, a random variable X is a random function that maps each outcome in the sample space S to a real number. That is, X: S to R, Where R symbolizes the set of real numbers, the values that X can take on are called the range of X. HistoryRandom variables have a long and rich history in mathematics and statistics, dating back to the 17th century. The concept of probability, which forms the foundation for random variables, was first studied by mathematicians such as Blaise Pascal and Pierre de Fermat. In the 18th and 19th centuries, mathematicians such as Laplace and Gauss further developed the theory of probability and introduced the idea of a continuous probability distribution. However, it was not till the 20th century that the concept of a random variable was formally defined and used in modern statistics. In 1900, the mathematician Georg Cantor introduced the concept of a sample space, which is the set of all possible outcomes of a random experiment. This led to the rise of the concept of a random variable, which is a variable that takes on different values depending on the outcome of a random experiment. In the early 1900s, the statistician Karl Pearson introduced the concept of a probability density function, which describes the probability distribution of a continuous random variable. Later, other mathematicians and statisticians such as Ronald Fisher, Jerzy Neyman, and Abraham Wald further developed the theory of random variables and their applications in statistics. Well, to put it simply, we can say that it is not confirmed who discovered the concept of Random Variables but many historians and mathematicians believed that Pafnuty Chebyshev (a Russian Mathematician also known as the 'founding father of Russian Mathematics ) was the first person "to think systematically in terms of random variables". Today, random variables are a fundamental concept in probability theory and statistics and are used in a wide range of fields, including finance, engineering, physics, and biology. The study of random variables continues to be an active area of research in mathematics and statistics, with new applications and extensions of the theory being developed all the time. Types of a Random VariableThere are two specific types of Random variables:

1. Discrete Random Variables: Discrete random variables take on a finite or accountably infinite set of possible values. In other words, the range of a discrete random variable is a discrete set of numbers. For example, the number of heads obtained when flipping a coin five times is a discrete random variable that can take on values 0, 1, 2, 3, 4, or 5. The probability distribution for a discrete random variable is called a probability mass function (PMF). The PMF gives the probability that the random variable takes on a particular value. For example, consider the random variable X which represents the number of heads obtained when flipping a fair coin three times. The PMF for X is:

Note that the probabilities in the PMF must sum to 1 since the random variable must take on one of the possible values. 2. Continuous Random Variables: The Continuous random variables take on any value in a continuous range of possible values. In other words, the range of a continuous random variable is an uncountable set of numbers. For example, the height of a person can be referred to as a continuous random variable that can take on any value in a continuous range from zero to infinity. The probability distribution for a continuous random variable is called a probability density function (PDF). Unlike the PMF, the PDF does not give the probability that the random variable takes on a particular value, but rather the probability density at each point in the range of possible values. The probability density can be thought of as the height of a curve at a particular point on the x-axis, and the total area under the curve must equal 1. Parts of Random VariablesRandom variables can be classified in different ways, depending on the properties that we are interested in. Some common classifications of random variables are:

Properties of Random VariableRandom variables have several important properties that help us understand and analyze their behaviour. Here are some of the most important properties of random variables:

Applications of Random Variables: An OverviewRandom variables are mathematical constructs used to model the outcomes of random events. They play a fundamental role in probability theory and statistics and have a wide range of applications across different fields. In this description, we will provide an outlook of some of the key applications of random variables.

So overall, we can say that Random Variables have numerous applications across different fields, from probability theory and statistics to economics, engineering, and physics. Understanding the properties and behaviour of random variables is essential for modelling and analyzing uncertain events and systems.

Next TopicSatire Definition

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share