Computer Monitor- DefinitionA computer monitor is an output appliance that shows information as text or images. An external user control panel, housing, power supply, support circuitry, and a visual display are all included in a discrete monitor. As of the 2010s, LCDs with LED backlights have mostly supplanted LCDs with CCFL backlights as the display type in current displays. The majority of displays utilized CRTs up until the mid-2000s. Various proprietary connections and signals connect monitors to computers, including DisplayPort, HDMI, USB-C, DVI, and VGA.

Initially, video was processed on television sets while data was done on computer displays. Since the 1980s, computers (and their displays) have been used for processing data and displaying video, and TVs have added some computer capabilities. Televisions and computer displays' standard aspect ratios have shifted from 4:3 to 16:9 in the 2000s. Most modern televisions and computer displays may be used interchangeably. External devices, such as a DTA box, may be required to use a computer monitor as a TV set because most computer monitors do not come with built-in speakers, TV tuners, or remote controls. Inventor of the Computer MonitorIn 1897, "Karl Ferdinand Braun" created the first computer monitor. German physicist, he was born on June 6, 1850. Karl was a Nobel Prize in Physics recipient and created the first computer display. He made a substantial contribution to the advancement of technology, namely radio and television.

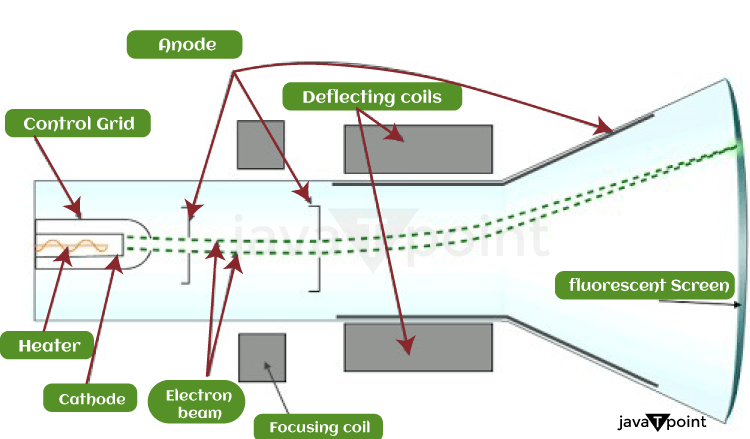

Due to Karl's influence, the CRT is sometimes known as the "Braun Tube." This primary computer monitor was created using a fluorescent tube with a cathode beam and an oscilloscope. When electrons hit the CRT, it produces light. A vacuum tube having phosphors on one end is the cathode beam tube. Instead of reading the content before displaying the graphics and sentences, they exhibit shoddy vector drawings. They displayed graphics and text that had been used to handle information. History Of Computer MonitorIn 1951, Univac computer operators had no display to let them see what was happening within the computer; instead, they had to rely on the control panel's flashing lights to tell them what the machine was doing. The complexity of computing tasks nowadays requires displays for humans to interact with their computers. However, compared to today's massive, colorful flat panels, the early commercial monitors, which started to appear about 1960, were highly rudimentary. I. Early Paper-Based ComputingComputers utilize monitors as output devices to display information. Because people communicated with computers in the early days of computing by using paper, monitors were less critical. They can write instructions on cards using punch card machines, which a computer can then interpret. The computer processed the input, processed the instructions, and then punched its result into more cards or paper tape for humans to decode. II. Early ScreensRows of blinking indicator lights that turned on and off in response to inputs or while accessing memory locations initially lit up the monitor panels. Additionally, input and output were performed using Hollerith punched cards. Programs were written on devices resembling typewriters to encrypt the punched-in instructions for the paper card. Long reels of paper tape occasionally replaced punched cards.

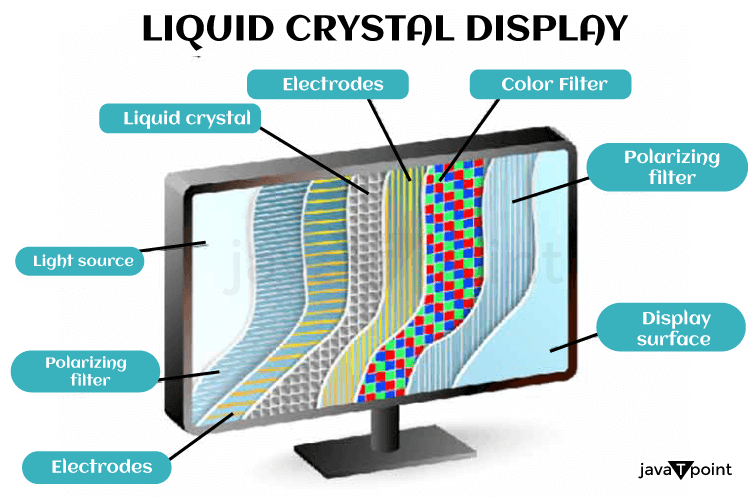

Experts must decode these punch cards before being inserted into computers that can read and execute the programming. Similarly, operations placed the paper tape through a machine and eventually used electric typewriters to type the computer output so that it could be read on paper rolls. These early electronic computers included the ENIAC. III. Introduction of Plasma ScreensPlasma displays were popular throughout the 1960s. This type of display technology trapped a charged gas between two sheets of glass. A glowing feature would appear whenever a modification was implemented throughout the sheets in particular zones. One of the first companies to employ a plasma display for its computers is reputed to be the PLATO TV terminal. IBM and GriD later developed the idea of small, light screens in portable computers. Sadly, despite getting some attention again with TVs, this technology was not very popular with PCs. IV. CRT's ArrivalEarly computers did not have displays; instead, they used cathode-ray tubes as a memory. Designers utilized CRTs to display the data stored in CRT-based memory because they were aware of their efficiency. The first graphical displays, such as those in the SAGE system and the PDP-1, were made with CRTs with an oscilloscope and radar. Even still, they weren't highly well-liked. V. The Introduction of LCD Flat-Screen MonitorsAnother type of computer display that first gained popularity in primitive calculators and wristwatches was the liquid crystal display (LCD). Portable computers expertly adapted their attributes for computer displays and used this flat screen to its fullest potential. LCDs were lightweight and energy-efficient. Even though they had little contrast and were monochrome, they needed different lighting.

Early LCDs needed direct lighting to read them. With the advent of laptop computers, LCDs advanced, obtaining improved contrast, viewing angles, and color fidelity. LCDs took up less desk space with no heat emulsion while using less electricity. It became more challenging to sell CRT displays due to the introduction of color LCDs by ViewSonic, IBM, and Apple. VI. Modern MonitorsIn the PC sector, widescreen LCDs are highly regarded. LCDs are a booming industry in the consumer sector thanks to their affordability and cost-effectiveness. Their creative approaches resulted in the development of 3D support for specialized glasses with highly high refresh rates. Computer monitors and TVs are replacing their traditional activation methods with LCD flat-panel displays, which are becoming increasingly popular for obvious reasons as digital TV sets become more common. Flat panel displays now have more consumer-friendly features thanks to new technologies, such as glossy screens that improve color saturation and clarity by swapping out the anti-glare matte coating for a glossy one.

These flat panels' curved shapes lessen geometric distortion for immediate viewing. With the use of polarizers and special 3D glass, they simulate the illusion of depth. Numerous features have been added to these most recent displays, such as anti-glare and anti-reflection screens, a directional screen for narrow-angle visuals, integrated professional accessories like integrated screen calibration tools and signal transmitters, a Tablet screen, local dimming backlight, backlight brightness or color uniformity compensation, and a variety of other features. Types of Computer MonitorsComputer displays have evolved throughout time along with technology. Here, we describe the various types of computer monitors in case one is unsure of what they are or would like to learn more. The seven distinct types of computer displays are presented here. 1. CRT MonitorOne of the first display kinds is the CRT. A visible picture is produced by striking the millions of tiny red, green, and blue phosphor dots embedded in the screen. When an electron beam passes over them, they glow and produce an image. How this operates within a CRT is seen in the figure below.

In electronics, the terms anode and cathode interchange for positive and negative terminals. As an illustration, a battery's positive and negative terminals are called the anode and cathode. A heated filament functions as the "cathode" in a cathode ray tube. Inside a glass "tube," a vacuum has been created around the heated filament. The "ray" is a stream of electrons released by an electron cannon from a heated cathode, falling naturally into a vacuum. Negative ions are electrons. The positive anode pulls the cathode's emitted electrons toward it. Phosphor, an organic substance that illuminates when exposed to an electron beam, is applied to the surface of this screen. 2. LCD MonitorOne of the display kinds that became more common with the use of laptops is the LCD monitor. A computer or liquid crystal display (LCD) monitor mostly appears in flat-panel and laptops. LCD technology is used in LCD monitors to provide sharp pictures.

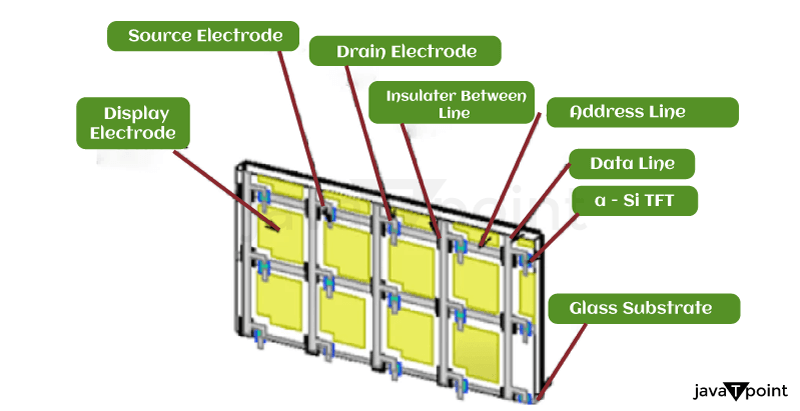

This innovation has replaced the conventional cathode ray tube (CRT) monitors, which were the prior norm and were formerly thought to have higher image quality than early LCD models. In terms of color and picture resolution, as well as the ability to support high resolutions, LCD has overtaken CRT thanks to improved LCD technology and its ongoing progress. LCD monitors might also be made for much less money than CRT monitors. 3. TFT MonitorIn an LCD, thin-film transistor technology is used in TFT monitors. In both TVs and computer screens, LCD monitors?also known as flat panel displays?are replacing outdated cathode ray tubes (CRTs). Today, TFT technology is used in almost all LCD monitors. The individual, small transistor for each display pixel is an advantage of thin-film technology. Due to the tiny size of each transistor, less charge is required to regulate them. Due to the picture being painted over or updated numerous times per second, the screen can refresh quite rapidly.

Fast-moving pictures could not be displayed on passive matrix LCDs before TFT. For instance, if a mouse were moved from point X to point Y on the screen, it would vanish in the space between the two spots. The display produced by a TFT monitor, which can track the mouse, is suitable for video, gaming, and all other types of multimedia. About 1.3 million pixels and 1.3 million transistors comprise a 17-inch TFT monitor. Because "dead pixels" are standard, there is a significant chance that one or more transistors on the display will malfunction. A dead pixel is one whose transistor has failed, rendering it incapable of producing a display picture. Dead pixels will thus appear as tiny red, white, or blue dots on an entirely black background, for example. When a monitor has less than 11 dead pixels, most manufacturers won't replace it. Most screens don't contain dead pixels, but even those that do usually only stand out if they're in a crucial spot. 4. LED MonitorA panel of LEDs is used as the light source in an LED display (also known as a light-emitting diode display) to create a screen display. Many small and large electronic devices currently use LED displays as a screen and a means of user and system interaction. The output of contemporary electronic gadgets, including mobile phones, TVs, tablets, computer displays, laptop screens, etc., is displayed on an LED display. One of the most widely used commercial screen displays is the LED Display. The main benefits of LED displays are their effectiveness and low energy consumption, which are crucial for portable and rechargeable devices like mobile phones and tablets. Several LED panels make up an LED display, and those panels comprise several LEDs.

Compared to alternative light-emitting sources, LEDs have several benefits. LEDs use less electricity and generate light with increased brightness and intensity. Vacuum fluorescent displays, like those used in various consumer devices like vehicle stereos and videocassette recorders, vary from LED displays, so the two displays should be distinct. 5. Touchscreen MonitorThe touchscreen monitor is the sort of display that has gained popularity with smartphones. The computer display panel, a touch screen, doubles as an input device. Users interact with computers by touching images or text on the displays, which respond to pressure.

There are three varieties of touchscreen technology:

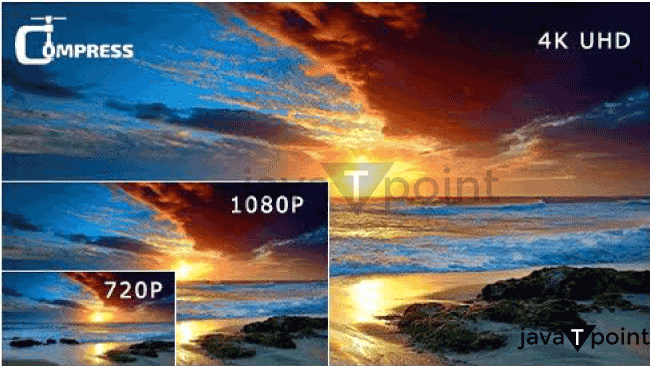

6. OLED MonitorThe OLED monitor is the following type of monitor on the list. "OLED" refers to an Organic Light-Emitting Diode, a technology that uses LEDs and emits light made of organic molecules instead of conventional means. The most incredible display panels in the world are said to be made with these organic LEDs. OLED displays are created by sandwiching several thin organic sheets between two conductors. The application of an electrical current causes the emission of brilliant light. A straightforward design has several benefits over alternative display technologies. Unlike LCDs, where the light originates from a backlighting unit, OLEDs allow for emissive displays, where each pixel is independently controlled and generates light. Bright colors, quick motion, and, most crucially, a high contrast ratio all contribute to the excellent visual quality of OLED displays. Most notably, "real" blacks (which LCDs can't produce because of the illumination). Because of the straightforward OLED architecture, flexible and transparent screens can also be made relatively quickly. Features Of Computer MonitorBefore looking at a computer monitor, several features must be taken into account. 1. ResolutionAny monitor's resolution is a crucial component. A pixel, also known as a "picture element," is a teeny-tiny point of light that makes up an image and measures the screen's width and height. For instance, there are 3,686,400 pixels on a 2,560 by 1,440 screen.

For conventional resolutions, manufacturers may mention one dimension: 4K refers to the width, while 1080p and 1440p relate to height. High definition (HD) refers to any resolution greater than 1,280 720. The clarity of the image also improves when the display resolution is increased since it becomes more difficult to see individual pixels with the naked human eye. Higher resolutions provide an additional advantage besides enhancing the level of detail in video games or movies. 2. ColorIt can be simple to tell which display has brighter colors, deeper blacks, or a more lifelike color palette when two monitors are side by side. However, since the color in monitors is assessed in a variety of ways, it can be challenging to visualize the scene in your head after reading the specifications. Contrast ratio, brightness, black level, color gamut, and other factors all come into play. Thus, it is only possible to concentrate on one of them. Color depth measures a monitor's capacity to display slightly varied colors without banding or inaccurate rendering. The color depth of a screen indicates how much information (measured in bits) may be used to create a single pixel's color.

Red, green, and blue light are flashed at different intensities in each of the three color channels that make up each pixel on the screen, producing (usually) millions of different hues. Eight bits are used for each color channel in an 8-bit color display. With an 8-bit color depth, a total of 28x28x28=16,777,216 hues may be displayed on a screen. 3. Refresh RateThe frequency with which the image on the entire screen is refreshed is known as the refresh rate. The onscreen motion appears smoother at higher refresh rates because the screen refreshes each object's location more quickly. This may improve a screen's responsiveness while browsing through a website or launching an app on the phone, or it can also help competitive gamers monitor moving foes in first-person shooters.

Sharp camera motions appear smoother, motion blur is seen as less, and tracking things with your eye is more straightforward when the refresh rate is higher. The benefits of displays with a refresh rate higher than 120Hz are hotly debated online. Response time is the amount of time, in milliseconds, it takes for one of the pixels to change color. Less visual artifacts, including blurred motion or "trails" behind moving images, result from faster response times. 4. MountingThe mount for gaming monitors frequently has three different adjustments: tilt, height, and degree of rotation. Using them, one may position the monitor ergonomically and make it operate in various environments. The monitor's compatibility with additional mounts, including wall mounts or movable display arms, depends on the VESA mounting holes on the back of the device. This standard, developed by VESA (the Video Electronics Standards Association, a collection of manufacturers), defines the screws needed to install the monitor and the distance in millimetres between the mounting holes. 5. Aspect RatioThe ratio of a monitor's width to height is known as its aspect ratio. The 1990s' boxy displays were commonly 4:3, or "standard," rather than 1:1, which would be perfectly square. Widescreen (16:9) and a few ultrawide aspect ratios (21:9, 32:9, 32:10) have taken their place. Widescreen to ultrawide aspect ratios are frequently supported in contemporary video games. ConclusionA computer monitor is a piece of hardware that shows the picture and visual information produced by an attached computer via the computer's video card. Hanns-G, Acer, Dell, Sceptre, LG Electronics, Samsung, HP, and AOC are some of the most widely used computer monitor brands. These manufacturers sell monitors directly or may be purchased from stores like Amazon and Newegg. Typically, a monitor's performance is influenced by a variety of elements rather than just one, such as the size of the screen in general. Some of them are the aspect ratio (the ratio of the width to the height), battery life, refresh rate, contrast ratio (the concentration of the lightest to darkest colors), response time (the amount of time it takes for a pixel to switch from being active to being inactive to being active again), display resolution, and others.

Typically, a monitor is connected to a VGA, DVI, or HDMI port. Thunderbolt, Thunderbolt 2, and DisplayPort are additional ports. Verifying that both devices support the same type of connection before spending money on a new monitor to use with your machine is very important. For instance, if the computer can only take a VGA connection, don't get a monitor with an HDMI connector. It's still vital to examine the compatibility of video cards and monitors, even though most have several connections to accommodate different sorts of devices.

Next TopicDental Caries Definition

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share