What is Parallel Computing?

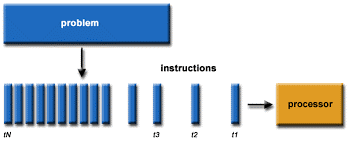

Parallel computing refers to the process of executing several processors an application or computation simultaneously. Generally, it is a kind of computing architecture where the large problems break into independent, smaller, usually similar parts that can be processed in one go. It is done by multiple CPUs communicating via shared memory, which combines results upon completion. It helps in performing large computations as it divides the large problem between more than one processor. Parallel computing also helps in faster application processing and task resolution by increasing the available computation power of systems. The parallel computing principles are used by most supercomputers employ to operate. The operational scenarios that need massive processing power or computation, generally, parallel processing is commonly used there. Typically, this infrastructure is housed where various processors are installed in a server rack; the application server distributes the computational requests into small chunks then the requests are processed simultaneously on each server. The earliest computer software is written for serial computation as they are able to execute a single instruction at one time, but parallel computing is different where it executes several processors an application or computation in one time. There are many reasons to use parallel computing, such as save time and money, provide concurrency, solve larger problems, etc. Furthermore, parallel computing reduces complexity. In the real-life example of parallel computing, there are two queues to get a ticket of anything; if two cashiers are giving tickets to 2 persons simultaneously, it helps to save time as well as reduce complexity. Types of parallel computingFrom the open-source and proprietary parallel computing vendors, there are generally three types of parallel computing available, which are discussed below:

Applications of Parallel ComputingThere are various applications of Parallel Computing, which are as follows:

Advantages of Parallel computingParallel computing advantages are discussed below:

Disadvantages of Parallel ComputingThere are many limitations of parallel computing, which are as follows:

Fundamentals of Parallel Computer ArchitectureParallel computer architecture is classified on the basis of the level at which the hardware supports parallelism. There are different classes of parallel computer architectures, which are as follows: Multi-core computingA computer processor integrated circuit containing two or more distinct processing cores is known as a multi-core processor, which has the capability of executing program instructions simultaneously. Cores may implement architectures like VLIW, superscalar, multithreading, or vector and are integrated on a single integrated circuit die or onto multiple dies in a single chip package. Multi-core architectures are classified as heterogeneous that consists of cores that are not identical, or they are categorized as homogeneous that consists of only identical cores. Symmetric multiprocessingIn Symmetric multiprocessing, a single operating system handles multiprocessor computer architecture having two or more homogeneous, independent processors that treat all processors equally. Each processor can work on any task without worrying about the data for that task is available in memory and may be connected with the help of using on-chip mesh networks. Also, all processor contains a private cache memory. Distributed computingOn different networked computers, the components of a distributed system are located. These networked computers coordinate their actions with the help of communicating through HTTP, RPC-like message queues, and connectors. The concurrency of components and independent failure of components are the characteristics of distributed systems. Typically, distributed programming is classified in the form of peer-to-peer, client-server, n-tier, or three-tier architectures. Sometimes, the terms parallel computing and distributed computing are used interchangeably as there is much overlap between both. Massively parallel computingIn this, several computers are used simultaneously to execute a set of instructions in parallel. Grid computing is another approach where numerous distributed computer system execute simultaneously and communicate with the help of the Internet to solve a specific problem. Why parallel computing?There are various reasons why we need parallel computing, such are discussed below:

Future of Parallel ComputingFrom serial computing to parallel computing, the computational graph has completely changed. Tech giant likes Intel has already started to include multicore processors with systems, which is a great step towards parallel computing. For a better future, parallel computation will bring a revolution in the way of working the computer. Parallel Computing plays an important role in connecting the world with each other more than before. Moreover, parallel computing's approach becomes more necessary with multi-processor computers, faster networks, and distributed systems. Difference Between serial computation and Parallel ComputingSerial computing refers to the use of a single processor to execute a program, also known as sequential computing, in which the program is divided into a sequence of instructions, and each instruction is processed one by one. Traditionally, the software offers a simpler approach as it has been programmed sequentially, but the processor's speed significantly limits its ability to execute each series of instructions. Also, sequential data structures are used by the uni-processor machines in which data structures are concurrent for parallel computing environments. As compared to benchmarks in parallel computing, in sequential programming, measuring performance is far less important and complex because it includes identifying bottlenecks in the system. With the help of benchmarking and performance regression testing frameworks, benchmarks can be achieved in parallel computing. These testing frameworks include a number of measurement methodologies like multiple repetitions and statistical treatment. With the help of moving data through the memory hierarchy, the ability to avoid this bottleneck is mainly evident in parallel computing. Parallel computing comes at a greater cost and may be more complex. However, parallel computing deals with larger problems and helps to solve problems faster. History of Parallel ComputingThroughout the '60s and '70s, with the advancements of supercomputers, the interest in parallel computing dates back to the late 1950s. On the need for branching and waiting and the parallel programming, Stanley Gill (Ferranti) discussed in April 1958. Also, on the use of the first parallelism in numerical calculations, IBM researchers Daniel Slotnick and John Cocke discussed in the same year 1958. In 1962, a four-processor computer, the D825, was released by Burroughs Corporation. A conference, the American Federation of Information Processing Societies, was held in 1967 in which a debate about the feasibility of parallel processing was published by Amdahl and Slotnick. Asymmetric multiprocessor system, the first Multics system of Honeywell, was introduced in 1969, which was able to run up to eight processors in parallel. In the 1970s, a multi-processor project, C.mmp, was among the first multiprocessors with more than a few processors at Carnegie Mellon University. During this project, for scientific applications from 64 Intel 8086/8087 processors, a supercomputer was launched, and a new type of parallel computing was started. In 1984, the Synapse N+1, with snooping caches, was the first bus-connected multiprocessor. For the Lawrence Livermore National Laboratory, building on the large-scale parallel computer had proposed by Slotnick in 1964. The ILLIAC IV was the earliest SIMD parallel-computing effort, which was designed by the US Air Force.

Next TopicWhat is a transistor

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share