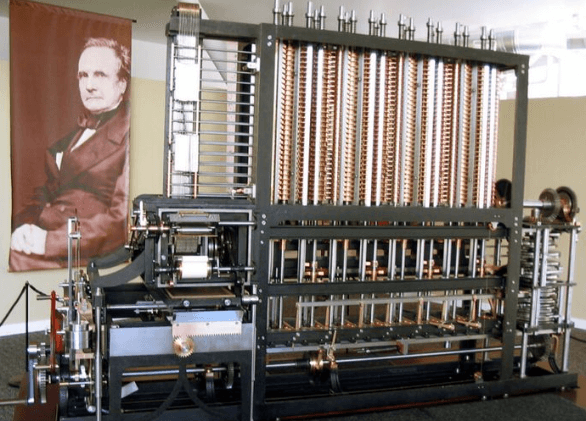

Who invented the computer?Computers have developed into complicated electrical devices capable of performing complex functions at breakneck rates. Computers have played a vital component in developing our modern civilization, tremendously affecting many aspects of our ordinary lives, together with work, communication, information access, and lifestyle. Computer systems have advanced dramatically throughout time, from simple calculators to cutting-edge supercomputers and portable laptops, greatly influencing the industry, research, schooling, entertainment, and various other disciplines. Computers have enormous importance in today's environment. They let us handle massive volumes of data, solve complicated issues, and automate routine chores. Computers have improved the effectiveness and accuracy of businesses such as healthcare, banking, manufacturing, and transportation. Manage the spun words as you want. They have revolutionized communication by permitting people from all around the world to speak fast. Computers have also made developing virtual worlds less complicated, opening up new possibilities in gaming, virtual reality, and augmented reality. Determining a single inventor of the computer is a complicated task because of the collaborative nature of its development. Computer evolution involved numerous individuals' contributions and technological advancements spanning several decades. It culminated in ideas, inventions, and innovations from various brilliant minds. History and Evolution of Computers:We could argue that the abacus or its descendant was the first computer, the slide rule that was invented by William Oughtred in 1622. But between 1833 and 1871, British mathematician Charles Babbage imagined and designed the Analytical Engine. The Analytical Engine was the first computer resembling today's modern machines. Before Charles Babbage came in the field of computer science, the "computer" was a person, someone who actually sat around the whole day. It worked by adding and subtracting numbers and providing output into tables. Then these tables are copied into books through which other people can use tables to solve problems, such as calculating taxes or launching artillery shells accurately. In fact, it was a great project that motivated Babbage in the first place. When Napoleon Bonaparte was instructed to switch the new metric system from the old imperial system of measurements, then in 1790, he started the project. The outcomes of human computers completed the tables and made the necessary changes for 10 years. In Paris, Bonaparte collecting dust in the Académie des Sciences, and he was never able to publish the tables. After a page of tables, Babbage viewed the unpublished manuscript with page while visiting the City of Light in 1819. He thought to produce the faster tables that would have less manpower and fewer mistakes and also wondered about many marvels generated by the Industrial Revolution. When inventors can develop the steam locomotive and the cotton gin, so why can they not be developed the machine to perform calculations. Charles Babbage decided to develop such a type of machine when he comes back to England. The first vision of Babbage was something that worked on the principle of finite differences; he gave it name the Difference Engine. The Difference Engine may work on making complex mathematical calculations with the help of repeated addition without using multiplication or division. Then in 1832, he got fund by the government and perfecting his idea spent eight years. His funding had run out, only to produce a functioning prototype of his table-making machine. Charles Babbage and the Analytical Engine

Babbage was working continuously without getting discouraged. He turned his attention to a wonderful idea and built the Analytical Engine rather than simplifying his design of the Difference Engine to make it easier. The Analytical Engine was a new type of mechanical computer, which had the ability to solve more complex calculations, including multiplication and division. The basic parts of the Analytical Engine are much similar to the computer component, which are sold in the today market. It included features two hallmarks of any modern machine; one was for memory and the second for central processing unit, or CPU. But he gave the name mill to CPU, and store to Memory. He also had a device that was known as a reader, used to give instructions and to record generated outputs by the machine on the paper. Babbage gave the named printer and the predecessor of laser and inkjet printers to this output device. The new invention of Babbage almost entirely existed on the paper. He wrote notes and sketches in detail about his computers around 5000 pages. As he had a clear vision about the machine, how will it look and works; hence, he never built a prototype to develop the Analytical Engine. In 1804-05, a weaving machine was developed by using the same technology used by the Jacquard loom, which was able to create a variety of cloth patterns automatically, and made it possible to data will be entered on punched cards. The computer can store up to 1,000 50-digit numbers. Also, punched cards would be able to transmit the instructions, which the machine could be capable of executing the instructions in sequential order. Unfortunately, Babbage's ambitious design could not accept the technology of the day. His specific ideas were finally converted into a functioning computer; it was not until 1991. That is the reason the Science Museum made the exact specifications to Babbage's Difference Engine in London. It stands more than 3 meters long and 2 meters tall (11 feet long and 7 feet tall), contains 15 tons weighs and 8000 moving parts. In Mountain View, California, a copy of the machine was built and shipped to the Computer History Museum. Until December 2010, it remained on display. The impact of Babbage's ideas extended beyond his own time. His concepts influenced subsequent generations of inventors and engineers, inspiring further advancements in computer technology. Babbage's work inspired Ada Lovelace, who recognized the potential of the Analytical Engine beyond mere calculations and is widely regarded as the world's first computer programmer. Moreover, Babbage's contributions established the groundwork for developing modern computing principles and concepts. His designs laid the foundation for a stored-program computer, which became a cornerstone of computer architecture. The mechanical computation and automation concepts that Babbage pioneered set the stage for the subsequent evolution of electronic and digital computing systems.

Babbage's Influence and the Future of ComputingCharles Babbage's innovative thoughts and groundbreaking creations had a tremendous impact on succeeding innovators and computer pioneers. His work influenced famous personalities like Alan Turing and John von Neumann, affecting the course of computer science and leaving a lasting impression on the future of computers. Although it was only partially realized during Babbage's lifetime, his Analytical Engine established the framework for creating modern computers. Its design and conceptualization anticipated key components of contemporary computer architecture. The Analytical Engine included a central processing unit (CPU), known as the "mill," and a memory unit, known as the "store." These parts are quite identical to their current equivalents. Babbage's idea of a machine capable of executing complicated computations, such as multiplication and division, foretold today's computer capabilities. Alan Turing was one of the noteworthy persons influenced by Babbage's work. Turing built upon Babbage's ideas and expanded them through his concept of Turing Machines. He recognized Babbage's Analytical Engine's relevance as a programmable machine and utilized it as a starting point for his theoretical studies. Turing's accomplishments during WWII, notably his efforts in codebreaking and establishing the Turing Test, moved computers ahead and laid the groundwork for contemporary computer science. Babbage's theories influenced John von Neumann, another significant person in computer science. Babbage's concept of a stored-program computer, which stores instructions and data in the same memory unit, influenced him. Von Neumann's fundamental work on computer design, known as the von Neumann architecture, used this method and created the foundation for most present computer systems. The continuing advancement of computer technology demonstrates Babbage's vision's lasting importance. His concepts and insights laid the foundation for the digital revolution and subsequent advancements in computer science. Babbage's emphasis on automation and mechanical computation anticipated the automation-driven society today, where computers are integral to various industries and aspects of daily life. Ada Lovelace and the Analytical Engine

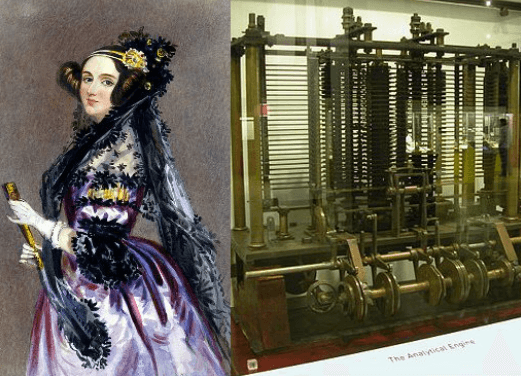

Ada Lovelace, also called Augusta Ada Byron, was an English mathematician and author best remembered as the world's first computer programmer. Lovelace's contributions to computers were inextricably linked to her partnership with Charles Babbage, notably on his groundbreaking creation, the Analytical Engine. Ada Lovelace first met Charles Babbage in the 1830s when she was introduced to him by her tutor, mathematician Mary Somerville. After recognizing her outstanding mathematical skill, Babbage recruited Lovelace to work on his innovative Analytical Engine, a mechanical computing device well ahead of its time. Lovelace's contributions to the Analytical Engine went beyond mere programming; she possessed a unique insight into its potential uses. In 1842, Lovelace translated and supplemented an article by Italian engineer Luigi Menabrea describing the Analytical Engine. Lovelace added her observations and ideas in her extensive notes, expanding on the machine's capabilities. Lovelace's work on the Analytical Engine was characterized by her emphasis on the machine's potential to go beyond mere calculation. She anticipated that the Engine could manipulate symbols and generate results beyond numerical calculations, foreseeing modern computer programs encompassing data processing and logical operations. Although the Analytical Engine was never built due to funding and technological limitations, Lovelace's visionary insights and contributions were far-reaching. Her notes on the Analytical Engine revealed a thorough comprehension of its capabilities and the notion of computing, establishing her as a pioneer in the subject. Ada Lovelace's work established the groundwork for future computer programming advancement. Even though her work was mostly disregarded during her lifetime, her thoughts and conceptions impacted succeeding generations of computer scientists and programmers. Lovelace's contributions to the Analytical Engine and her visionary ideas about computing continue to influence and define the discipline of computer science today. Turing Machines and the Birth of Computer Science:

A brilliant mathematician, Alan Turing made fundamental contributions to computer science in the 1930s by developing the notion of Turing Machines. Turing Machines were theoretical machines that established the basis for current computers and shaped the area of computing. Turing Machines were hypothetical devices designed to simulate any mechanical device's computation. They consisted of a tape divided into cells, each capable of storing a symbol. The machine had a read-write head to read the symbol on the tape, write a new symbol, or move to the next cell. Additionally, the machine had a set of rules called the transition function, which determined its behavior based on the current symbol being read. Turing's theoretical work profoundly influenced the development of computer science. His concept of Turing Machines demonstrated that computation could be carried out mechanically, challenging the prevailing notion that human intuition was necessary for complex calculations. This paved the way for the development of electronic computers, as researchers realized that the mechanical processes described by Turing Machines could be implemented using electrical circuits. Turing's theoretical contributions also played a critical role during World War II. He played a crucial role in cracking the unbreakable German Enigma code, ultimately impacting the outcome of the war through his development of codebreaking techniques. Turing is widely considered one of the fathers of computer science in appreciation of his breakthrough achievements. His forward-thinking ideas and theoretical frameworks revolutionized the discipline and continued to drive the breakthroughs and possibilities of modern computers. The Electronic Era: From ENIAC to Personal Computers

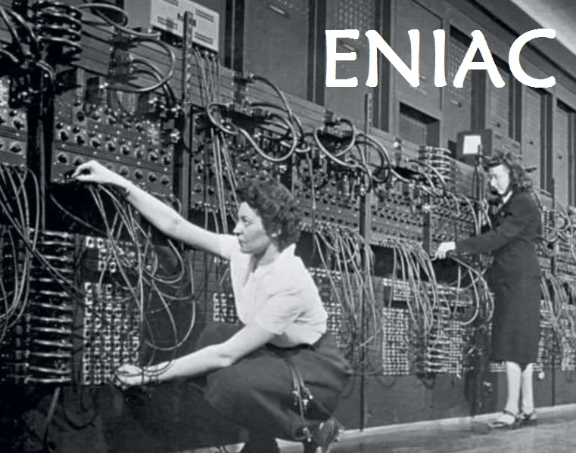

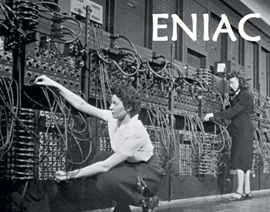

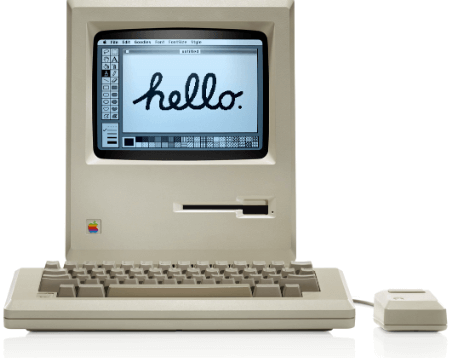

The evolution of personal computers from massive electronic computers like the ENIAC to these devices was a crucial turning point in the history of electronic computing. Several major advances, like the creation of transistors and integrated circuits, were critical in this growth. The invention of transistors by Bell Laboratories scientists in 1947 proved to be a game changer. Transistors, which replaced bulky and power-consuming vacuum tubes with smaller, more efficient electronic components, were smaller and more efficient. They were more dependable, used less power, and made computers smaller, quicker, and less expensive. Computers like the UNIVAC and IBM 704 dominated the computing situation in the 1950s. However, their size, expense, and technological sophistication made them inaccessible to individual users. Government groups, research groups, and businesses have mainly used those massive and expensive computers for complex calculations and data processing. The launch of the IBM personal laptop (IBM pc) in 1981 firmly established the popularity of personal computers. The IBM computer brought a standard design and software device that made it well-suited to various applications and programs. This open design created a broad hardware and software ecosystem, resulting in the fast growth of personal computing. Personal computers became increasingly powerful, inexpensive, and user-friendly each year. Adding graphical user interfaces, such as Apple's Macintosh in 1984 and Microsoft Windows in 1985, improved the user experience even more. With the advancement of personal computer systems, computing was transformed from a specialized subject to an important tool for people, organizations, and education.

The Evolution of Computing MachinesAfter Charles Babbage's time, computing machines continued to progress, improving electromechanical and electronic computer systems that created the foundation for the latest computers. The discovery of electromechanical devices in the late 1800s was a huge development. In 1889, American inventor Herman Hollerith created the punched card tabulating machine. This machine used punched cards to store and process data, enabling the automated processing of large amounts of information. It was commonly used for census tabulation and data analysis. The introduction of mechanical calculators in the early twentieth century accelerated development. James Dalton and William Burroughs pioneered the construction of the first commercial mechanical calculator, the Burroughs adding machine, in 1902. These calculators used gears, levers, and other mechanical components for quick and precise arithmetic computations. The most fundamental development in computing occurred in the middle of the twentieth century with the invention of electronic computers. Developing the electronic numerical integrator and computer (ENIAC) during World War II was an important turning point. The ENIAC, completed in 1945, was the world's first general-purpose electronic digital computer. It used vacuum tubes to perform calculations and could be reprogrammed to solve various problems. Following the ENIAC, the next major advancement came with the invention of the transistor in 1947. Transistors replaced vacuum tubes, offering smaller sizes, improved reliability, and increased computational speed. This resulted in the construction of the UNIVAC I, the first commercially viable electronic computer, in 1951. The emergence of mainframe computers, which were huge and powerful machines utilized by companies and government organizations for data processing and scientific computations, in the 1950s and 1960s. These computers were built on the batch processing idea, in which jobs were submitted and executed consecutively. The arrival of microprocessors in the 1970s marked a dramatic shift in computing. These chips integrated the central processing unit (CPU) into a single factor, resulting in more compact and efficient computer systems. This discovery resulted in the development of personal computers (pcs) in the 1980s. Individuals and small corporations could own their computers thanks to computers, which made computing available to them. There were several significant advances in computers in the following years. Networking grew more common, graphical user interfaces were created, and the internet was born, linking people worldwide. These developments shaped the digital world we inhabit today. Technology has progressed, bringing us mobile devices, cloud computing, artificial intelligence, and even quantum computing, all of which have pushed the boundaries of what computers can achieve.

Next TopicHow To Change Computer Password

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share